@AIBot 请继续

这样直接在 Python 环境中 import PySpark 库并使用即可。例如:

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName('example-app').getOrCreate()

df = spark.read.option('header',True).csv('/path/to/data.csv')

df.show()可以将 jar 包放到 Python 环境下可以查看的目录中,然后在创建 SparkContext 时指定 jar 的路径即可,例如:

from pyspark import SparkContext, SparkConf

conf = SparkConf().setAppName('app_name').setMaster('local').set('spark.jars', '/path/to/xxx.jar')

sc = SparkContext(conf=conf)

或者使用 --jars 参数:

from pyspark import SparkContext, SparkConf

conf = SparkConf().setAppName('app_name').setMaster('local').set('spark.jars', '/path/to/xxx.jar')

sc = SparkContext(conf=conf)

如果需要在 SparkSession 中使用,可以给 SparkConf 添加配置后传入 SparkSession 中:

from pyspark.sql import SparkSession

from pyspark import SparkConf

conf = SparkConf().setAppName('app_name').setMaster('local').set('spark.jars', '/path/to/xxx.jar')

spark = SparkSession.builder.config(conf=conf).getOrCreate()

或者使用 --jars 参数:

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName('app_name').config('spark.jars', '/path/to/xxx.jar').getOrCreate()

这样在使用 PySpark 时,就可以直接调用 SparkSession 的 API,通过 spark.sparkContext 获取 SparkContext,即可使用指定的 jar 包。

您看是这样吗?我试过这种方法 也是原来的错误

from pyspark import SparkConf, SparkContext

conf = SparkConf().setAppName("MyApp").setMaster('spark://192.168.1.230:7077')\

.set("--driver-class-path", "/root/download/nebula-spark-connector.jar")\

.set("--driver-class-path", r"D:\PycharmProjects\pythonProject2\download\nebula-spark-connector.jar")\

.set("--driver-class-path", "/root/download/nebula-algo.jar")\

.set("--jars", "/root/download/nebula-spark-connector.jar")\

.set("--jars", r"D:\PycharmProjects\pythonProject2\download\nebula-spark-connector.jar")\

.set("--jars", "/root/download/nebula-algo.jar")

# sc = SparkContext(conf=conf)

spark = SparkSession.builder.config(conf=conf).getOrCreate()

# print(sc)

df = spark.read.format(

"com.vesoft.nebula.connector.NebulaDataSource").option(

"type", "vertex").option(

"spaceName", "demo").option(

"label", "player").option(

"returnCols", "name,age").option(

"metaAddress", "192.168.1.230:33473").option(

"partitionNumber", 1).load()

df.show(n=2)

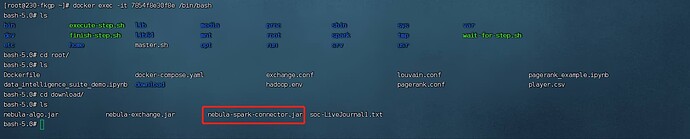

运行结果

E:\anaconda\envs\successful\python.exe D:/PycharmProjects/pythonProject2/1.py

Warning: Ignoring non-Spark config property: --jars

Warning: Ignoring non-Spark config property: --driver-class-path

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Traceback (most recent call last):

File "D:/PycharmProjects/pythonProject2/1.py", line 61, in <module>

"partitionNumber", 1).load()

File "D:\spark\spark-2.4.5-bin-hadoop2.7\spark-2.4.5-bin-hadoop2.7\python\pyspark\sql\readwriter.py", line 172, in load

return self._df(self._jreader.load())

File "E:\anaconda\envs\successful\lib\site-packages\py4j\java_gateway.py", line 1257, in __call__

answer, self.gateway_client, self.target_id, self.name)

File "D:\spark\spark-2.4.5-bin-hadoop2.7\spark-2.4.5-bin-hadoop2.7\python\pyspark\sql\utils.py", line 63, in deco

return f(*a, **kw)

File "E:\anaconda\envs\successful\lib\site-packages\py4j\protocol.py", line 328, in get_return_value

format(target_id, ".", name), value)

py4j.protocol.Py4JJavaError: An error occurred while calling o51.load.

: java.lang.ClassNotFoundException: Failed to find data source: com.vesoft.nebula.connector.NebulaDataSource. Please find packages at http://spark.apache.org/third-party-projects.html

at org.apache.spark.sql.execution.datasources.DataSource$.lookupDataSource(DataSource.scala:657)

at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:194)

at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:167)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:244)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:282)

at py4j.commands.AbstractCommand.invokeMethod(AbstractCommand.java:132)

at py4j.commands.CallCommand.execute(CallCommand.java:79)

at py4j.GatewayConnection.run(GatewayConnection.java:238)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.ClassNotFoundException: com.vesoft.nebula.connector.NebulaDataSource.DefaultSource

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

at org.apache.spark.sql.execution.datasources.DataSource$$anonfun$20$$anonfun$apply$12.apply(DataSource.scala:634)

at org.apache.spark.sql.execution.datasources.DataSource$$anonfun$20$$anonfun$apply$12.apply(DataSource.scala:634)

at scala.util.Try$.apply(Try.scala:192)

at org.apache.spark.sql.execution.datasources.DataSource$$anonfun$20.apply(DataSource.scala:634)

at org.apache.spark.sql.execution.datasources.DataSource$$anonfun$20.apply(DataSource.scala:634)

at scala.util.Try.orElse(Try.scala:84)

at org.apache.spark.sql.execution.datasources.DataSource$.lookupDataSource(DataSource.scala:634)

... 13 more

进程已结束,退出代码为 1

这个方法的意义和上面的方法是一样的,同样的效果。区别只在于使用的 SparkConf 的创建方式不同。如果只需要指定一个配置项,可以使用 config 方法;如果需要指定多个配置项,可以先创建 SparkConf 对象,再通过 SparkSession.builder.config(conf=conf) 方法将 SparkConf 对象传入 SparkConf 中。

报错显示找不到 com.vesoft.nebula.connector.NebulaDataSource 类,这可能是由于你在设置 SparkConf 时未正确设置 spark.jars 或 --jars 参数的路径,导致程序无法找到该依赖包。

可以先检查一下路径是否正确,可以将路径改为绝对路径来避免路径问题。如果路径正确,那可能是包的版本不兼容问题,可以尝试使用对应版本的 jar 包。

spark: 2.4.7、2.4.8

nebula Connecter 3.x

我本地的spark是不是也应该是和上面的对应包括hadoop

嗯嗯是 2.4.4,我记错了,实际号码比容器上的数字少一个,当然要匹配

./nebula-pyspark.sh

Python 2.7.16 (default, Jan 14 2020, 07:22:06)

[GCC 8.3.0] on linux2

Type "help", "copyright", "credits" or "license" for more information.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/root/download/nebula-spark-connector.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/spark/jars/slf4j-log4j12-1.7.16.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

23/03/08 06:37:25 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 2.4.4

/_/

Using Python version 2.7.16 (default, Jan 14 2020 07:22:06)

SparkSession available as 'spark'.

>>>

Python版本也需要和服务器对上吧

这个好像不是必须,但是 pyspark 应该有支持范围,我这个是 python 2.7 如果 export PYSPARK_PYTHON=python3 之后,也可以 python3,参考我那个 jupyter notebook

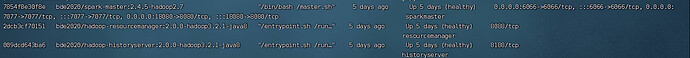

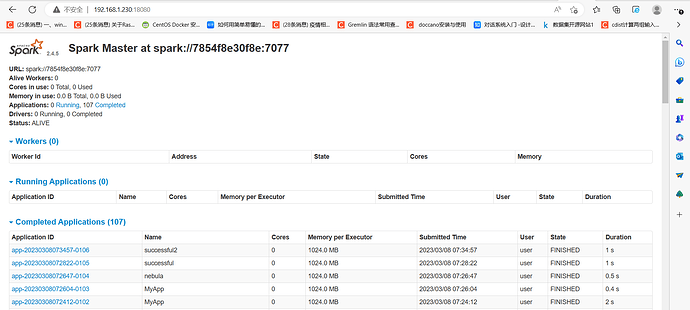

我通过sparkUI可以看见我提交的任务但是就是访问不通meta

from pyspark import SparkConf, SparkContext

conf = SparkConf().setAppName("successful2").setMaster('spark://192.168.1.230:7077')\

.set("--driver-class-path", "/root/download/nebula-spark-connector.jar")\

.set("--driver-class-path", r"D:\PycharmProjects\pythonProject2\path_to\nebula-spark-connector-3.0.0.jar")\

.set("--driver-class-path", "/root/download/nebula-algo.jar")\

.set("--jars", "/root/download/nebula-spark-connector.jar")\

.set("--jars", r"D:\PycharmProjects\pythonProject2\path_to\nebula-spark-connector-3.0.0.jar")\

.set("--jars", "/root/download/nebula-algo.jar")

# spark = SparkContext(conf=conf)

# print(spark)

spark = SparkSession.builder.config(conf=conf).getOrCreate()

print(spark)

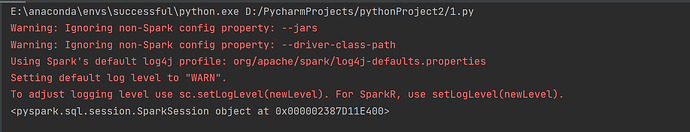

运行结果

sparkUI上面也可以看见

但是我去读取meta就会报错

from pyspark import SparkConf, SparkContext

conf = SparkConf().setAppName("successful2").setMaster('spark://192.168.1.230:7077')\

.set("--driver-class-path", "/root/download/nebula-spark-connector.jar")\

.set("--driver-class-path", r"D:\PycharmProjects\pythonProject2\path_to\nebula-spark-connector-3.0.0.jar")\

.set("--driver-class-path", "/root/download/nebula-algo.jar")\

.set("--jars", "/root/download/nebula-spark-connector.jar")\

.set("--jars", r"D:\PycharmProjects\pythonProject2\path_to\nebula-spark-connector-3.0.0.jar")\

.set("--jars", "/root/download/nebula-algo.jar")

# spark = SparkContext(conf=conf)

# print(spark)

spark = SparkSession.builder.config(conf=conf).getOrCreate()

print(spark)

df = spark.read.format(

"com.vesoft.nebula.connector.NebulaDataSource").option(

"type", "vertex").option(

"spaceName", "demo").option(

"label", "player").option(

"returnCols", "name,age").option(

"metaAddress", "192.168.1.230:33473").option(

"partitionNumber", 1).load()

df.show(n=2)

报错信息

E:\anaconda\envs\successful\python.exe D:/PycharmProjects/pythonProject2/1.py

Warning: Ignoring non-Spark config property: --jars

Warning: Ignoring non-Spark config property: --driver-class-path

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

<pyspark.sql.session.SparkSession object at 0x00000298EA03E4E0>

Traceback (most recent call last):

File "D:/PycharmProjects/pythonProject2/1.py", line 64, in <module>

"partitionNumber", 1).load()

File "D:\spark\spark-2.4.5-bin-hadoop2.7\spark-2.4.5-bin-hadoop2.7\python\pyspark\sql\readwriter.py", line 172, in load

return self._df(self._jreader.load())

File "E:\anaconda\envs\successful\lib\site-packages\py4j\java_gateway.py", line 1257, in __call__

answer, self.gateway_client, self.target_id, self.name)

File "D:\spark\spark-2.4.5-bin-hadoop2.7\spark-2.4.5-bin-hadoop2.7\python\pyspark\sql\utils.py", line 63, in deco

return f(*a, **kw)

File "E:\anaconda\envs\successful\lib\site-packages\py4j\protocol.py", line 328, in get_return_value

format(target_id, ".", name), value)

py4j.protocol.Py4JJavaError: An error occurred while calling o51.load.

: java.lang.ClassNotFoundException: Failed to find data source: com.vesoft.nebula.connector.NebulaDataSource. Please find packages at http://spark.apache.org/third-party-projects.html

at org.apache.spark.sql.execution.datasources.DataSource$.lookupDataSource(DataSource.scala:657)

at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:194)

at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:167)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:244)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:282)

at py4j.commands.AbstractCommand.invokeMethod(AbstractCommand.java:132)

at py4j.commands.CallCommand.execute(CallCommand.java:79)

at py4j.GatewayConnection.run(GatewayConnection.java:238)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.ClassNotFoundException: com.vesoft.nebula.connector.NebulaDataSource.DefaultSource

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

at org.apache.spark.sql.execution.datasources.DataSource$$anonfun$20$$anonfun$apply$12.apply(DataSource.scala:634)

at org.apache.spark.sql.execution.datasources.DataSource$$anonfun$20$$anonfun$apply$12.apply(DataSource.scala:634)

at scala.util.Try$.apply(Try.scala:192)

at org.apache.spark.sql.execution.datasources.DataSource$$anonfun$20.apply(DataSource.scala:634)

at org.apache.spark.sql.execution.datasources.DataSource$$anonfun$20.apply(DataSource.scala:634)

at scala.util.Try.orElse(Try.scala:84)

at org.apache.spark.sql.execution.datasources.DataSource$.lookupDataSource(DataSource.scala:634)

... 13 more

进程已结束,退出代码为 1

我本地的spark和服务器的也对应上了,pyspark是直接用的spark/python/pyspark

此话题已在最后回复的 7 天后被自动关闭。不再允许新回复。