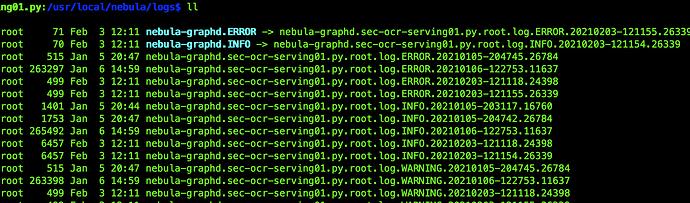

服务端日志在安装目录下logs 下

你这个就尴尬了=====

你这个graph服务起来后又挂的问题单独提一个帖子吧

ps:日志在你自己配的目录中,你配的是 --log_dir=/logs

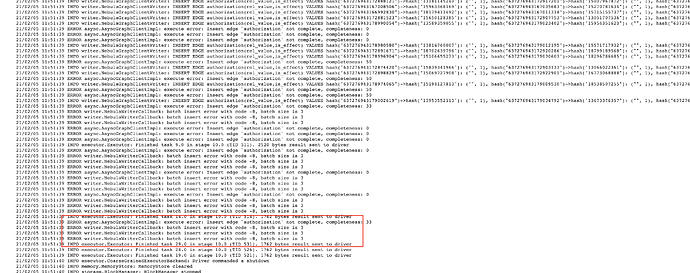

21/02/04 23:28:17 ERROR async.AsyncGraphClientImpl: execute error: Insert vertex not complete, completeness: 0

又是啥问题

21/02/04 23:28:16 INFO storage.ShuffleBlockFetcherIterator: Started 1 remote fetches in 17 ms

21/02/04 23:28:16 INFO codegen.CodeGenerator: Code generated in 14.938376 ms

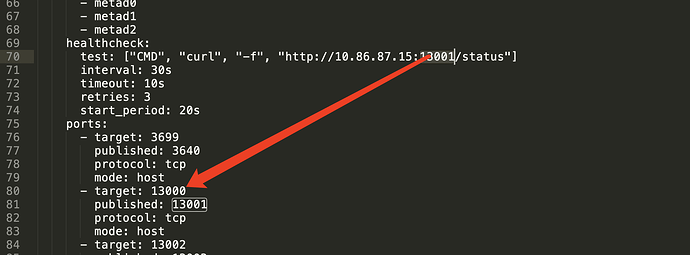

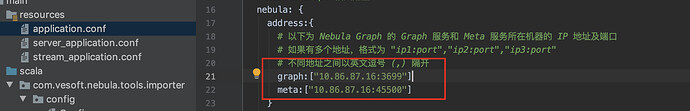

21/02/04 23:28:16 INFO writer.NebulaGraphClientWriter: Connection to List(10.86.87.15:3699)

21/02/04 23:28:16 INFO writer.NebulaGraphClientWriter: Connection to List(10.86.87.15:3699)

21/02/04 23:28:16 INFO writer.NebulaGraphClientWriter: Connection to List(10.86.87.15:3699)

21/02/04 23:28:16 INFO writer.NebulaGraphClientWriter: Connection to List(10.86.87.15:3699)

21/02/04 23:28:16 INFO writer.NebulaGraphClientWriter: Connection to List(10.86.87.15:3699)

21/02/04 23:28:16 INFO writer.NebulaGraphClientWriter: INSERT VERTEX user(user_id,create_time) VALUES hash("637294531820027474"): ("637294531820027474", "2020-12-18 18:01:27")

21/02/04 23:28:16 INFO writer.NebulaGraphClientWriter: INSERT VERTEX user(user_id,create_time) VALUES hash("637294531820052593"): ("637294531820052593", "2020-12-18 18:16:40")

21/02/04 23:28:16 INFO writer.NebulaGraphClientWriter: INSERT VERTEX user(user_id,create_time) VALUES hash("637294531820039679"): ("637294531820039679", "2020-12-18 18:08:25")

21/02/04 23:28:16 INFO writer.NebulaGraphClientWriter: INSERT VERTEX user(user_id,create_time) VALUES hash("637294531820049752"): ("637294531820049752", "2020-12-18 18:09:57")

21/02/04 23:28:16 INFO writer.NebulaGraphClientWriter: INSERT VERTEX user(user_id,create_time) VALUES hash("637294531820032771"): ("637294531820032771", "2020-12-18 18:14:04")

21/02/04 23:28:17 ERROR async.AsyncGraphClientImpl: execute error: Insert vertex not complete, completeness: 0

21/02/04 23:28:17 ERROR async.AsyncGraphClientImpl: execute error: Insert vertex not complete, completeness: 0

21/02/04 23:28:17 ERROR writer.NebulaWriterCallback: batch insert error with code -8, batch size is 1

21/02/04 23:28:17 ERROR writer.NebulaWriterCallback: batch insert error with code -8, batch size is 1

21/02/04 23:28:17 ERROR async.AsyncGraphClientImpl: execute error: Insert vertex not complete, completeness: 0

21/02/04 23:28:17 ERROR writer.NebulaWriterCallback: batch insert error with code -8, batch size is 1

21/02/04 23:28:17 INFO executor.Executor: Finished task 29.0 in stage 2.0 (TID 392). 1725 bytes result sent to driver

21/02/04 23:28:17 INFO executor.Executor: Finished task 1.0 in stage 2.0 (TID 376). 1725 bytes result sent to driver

21/02/04 23:28:17 INFO executor.Executor: Finished task 9.0 in stage 2.0 (TID 384). 1725 bytes result sent to driver

21/02/04 23:28:17 INFO executor.Executor: Finished task 5.0 in stage 2.0 (TID 380). 2483 bytes result sent to driver

21/02/04 23:28:17 INFO executor.Executor: Finished task 25.0 in stage 2.0 (TID 388). 1725 bytes result sent to driver

21/02/04 23:29:18 ERROR executor.CoarseGrainedExecutorBackend: RECEIVED SIGNAL TERM

21/02/04 23:29:18 INFO storage.DiskBlockManager: Shutdown hook called

21/02/04 23:29:18 INFO util.ShutdownHookManager: Shutdown hook called

你看下storaged服务的日志,有可能是因为导入数据时batch太大导致partition压力过大。

1.导入数据量大会报time out

21/02/04 19:48:32 ERROR YarnScheduler: Lost executor 55 on bigdata-nmg-hdp1343.nmg01.diditaxi.com: Container marked as failed: container_e21_1612237606203_1613145_01_000065 on host: bigdata-nmg-hdp1343.nmg01.diditaxi.com. Exit status: 50. Diagnostics: Exception from container-launch.

Container id: container_e21_1612237606203_1613145_01_000065

Exit code: 50

Stack trace: ExitCodeException exitCode=50:

at org.apache.hadoop.util.Shell.runCommand(Shell.java:545)

at org.apache.hadoop.util.Shell.run(Shell.java:456)

at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:722)

at org.apache.hadoop.yarn.server.nodemanager.LinuxContainerExecutor.launchContainer(LinuxContainerExecutor.java:372)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:310)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:85)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Shell output: main : command provided 1

main : user is yarn

main : requested yarn user is prod_jc_antispam

Container exited with a non-zero exit code 50. Last 4096 bytes of stderr :

ete, completeness: 33

21/02/04 19:48:19 ERROR entry.AbstractNebulaCallback: onError: java.util.concurrent.TimeoutException: Operation class com.vesoft.nebula.graph.GraphService$AsyncClient$execute_call timed out after 3015 ms.

java.util.concurrent.TimeoutException: Operation class com.vesoft.nebula.graph.GraphService$AsyncClient$execute_call timed out after 3015 ms.

at com.facebook.thrift.async.TAsyncClientManager$SelectThread.timeoutMethods(TAsyncClientManager.java:157)

at com.facebook.thrift.async.TAsyncClientManager$SelectThread.run(TAsyncClientManager.java:114)

21/02/04 19:48:19 ERROR util.SparkUncaughtExceptionHandler: Uncaught exception in thread Thread[pool-15-thread-1,5,main]

java.lang.Error: com.vesoft.nebula.tools.importer.TooManyErrorsException: too many errors

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1148)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Caused by: com.vesoft.nebula.tools.importer.TooManyErrorsException: too many errors

at com.vesoft.nebula.tools.importer.writer.NebulaWriterCallback.onFailure(ServerBaseWriter.scala:214)

at com.google.common.util.concurrent.Futures$4.run(Futures.java:1140)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

... 2 more

- 加limit 20000 能执行完,但spark log 就报

21/02/04 23:28:17 ERROR async.AsyncGraphClientImpl: execute error: Insert vertex not complete, completeness: 0

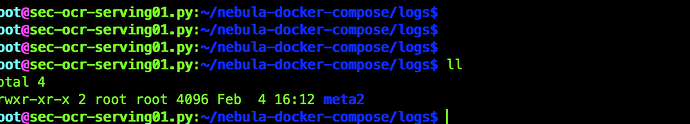

1)你说的storaged服务的日志 在哪里? 只有master节点有logs目录, 只有meta日志

- batch太大导致partition压力过大。 这个需要怎么调节呢?