graph跟storage的容器之间可以ping通吗, 还有在部署服务器上能否ping通meta服务3个容器的ip

ok,我都试试

- storage容器之间互相ping不通

- graph和storage容器之间互相ping不通

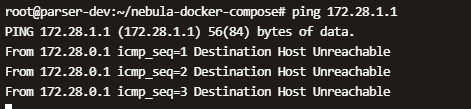

- 部署服务器上无法ping通meta服务3个容器的ip

- graph节点处于healthy,meta和storage节点都为unhealthy

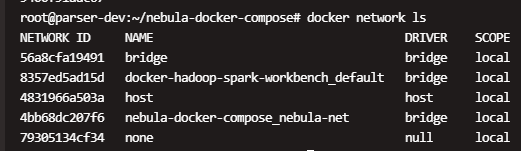

麻烦你把docker network ls 和ip link 执行后的结果贴出来看一下

iplink

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000

link/ether 00:16:3e:08:15:1f brd ff:ff:ff:ff:ff:ff

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:f9:cd:ca:c2 brd ff:ff:ff:ff:ff:ff

8: br-607eb19c74b6: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN mode DEFAULT group default

link/ether 02:42:a0:4d:4f:96 brd ff:ff:ff:ff:ff:ff

10: br-8357ed5ad15d: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:5a:2f:4f:ae brd ff:ff:ff:ff:ff:ff

286: veth36a548c@if285: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-8357ed5ad15d state UP mode DEFAULT group default

link/ether 5e:71:f5:d7:5f:29 brd ff:ff:ff:ff:ff:ff link-netnsid 10

288: veth732a779@if287: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-8357ed5ad15d state UP mode DEFAULT group default

link/ether b6:0a:00:b3:ff:c9 brd ff:ff:ff:ff:ff:ff link-netnsid 11

290: vetha675506@if289: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-8357ed5ad15d state UP mode DEFAULT group default

link/ether 2e:13:86:b9:39:34 brd ff:ff:ff:ff:ff:ff link-netnsid 12

292: vethf64d409@if291: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-8357ed5ad15d state UP mode DEFAULT group default

link/ether 8e:28:d4:a4:2c:7a brd ff:ff:ff:ff:ff:ff link-netnsid 17

294: veth9df0f02@if293: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-8357ed5ad15d state UP mode DEFAULT group default

link/ether 22:f6:ef:71:71:8b brd ff:ff:ff:ff:ff:ff link-netnsid 16

296: veth879667f@if295: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-8357ed5ad15d state UP mode DEFAULT group default

link/ether b6:15:95:d4:8f:26 brd ff:ff:ff:ff:ff:ff link-netnsid 18

298: veth25c1a66@if297: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether 96:53:2c:32:ea:d4 brd ff:ff:ff:ff:ff:ff link-netnsid 19

357: br-4bb68dc207f6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:bc:8e:62:9f brd ff:ff:ff:ff:ff:ff

451: veth028d969@if450: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-4bb68dc207f6 state UP mode DEFAULT group default

link/ether 0e:f8:80:26:b6:d1 brd ff:ff:ff:ff:ff:ff link-netnsid 13

453: veth42a89b6@if452: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-4bb68dc207f6 state UP mode DEFAULT group default

link/ether 32:65:16:f0:8b:66 brd ff:ff:ff:ff:ff:ff link-netnsid 22

455: veth7e39f21@if454: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-4bb68dc207f6 state UP mode DEFAULT group default

link/ether 7e:0a:d1:d4:85:17 brd ff:ff:ff:ff:ff:ff link-netnsid 15

457: veth087c877@if456: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-4bb68dc207f6 state UP mode DEFAULT group default

link/ether 72:10:99:9f:51:79 brd ff:ff:ff:ff:ff:ff link-netnsid 14

459: veth1143012@if458: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-4bb68dc207f6 state UP mode DEFAULT group default

link/ether 5e:7e:80:0b:55:cc brd ff:ff:ff:ff:ff:ff link-netnsid 20

204: vethd41e2a3@if203: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether 5a:c2:35:17:54:63 brd ff:ff:ff:ff:ff:ff link-netnsid 0

461: veth6402b96@if460: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-4bb68dc207f6 state UP mode DEFAULT group default

link/ether 1e:dd:8b:0e:20:f4 brd ff:ff:ff:ff:ff:ff link-netnsid 21

206: vethfa64e26@if205: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether ea:f4:fa:75:cf:3f brd ff:ff:ff:ff:ff:ff link-netnsid 1

208: vethf34842c@if207: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether 5a:16:98:ea:6b:3e brd ff:ff:ff:ff:ff:ff link-netnsid 2

210: vethab73159@if209: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether d2:77:94:19:d7:d1 brd ff:ff:ff:ff:ff:ff link-netnsid 3

212: vethe7cd5e4@if211: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether f2:53:b3:c4:b3:19 brd ff:ff:ff:ff:ff:ff link-netnsid 4

214: vethc6b8d97@if213: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether 5e:7a:b5:af:15:68 brd ff:ff:ff:ff:ff:ff link-netnsid 5

216: vetha782e79@if215: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether 62:5d:73:3d:65:55 brd ff:ff:ff:ff:ff:ff link-netnsid 6

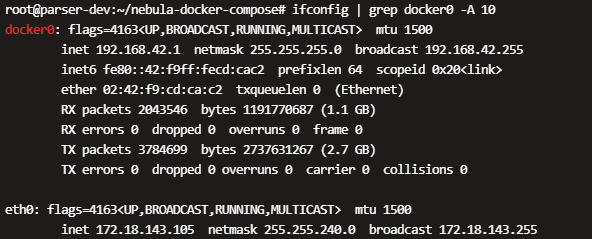

看veth网卡没有异常,还需要再贴下docker network inspect nebula-docker-compose_nebula-net ,另外确认172.28.0.1 跟 网卡docker0的 ip 是否都能ping通

nebula-network

[

{

"Name": "nebula-docker-compose_nebula-net",

"Id": "4bb68dc207f6661a2fd2474c3ce627d3dbecd7b99f078baac54f04fd142ab670",

"Created": "2021-02-24T20:05:26.748596345+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.28.0.0/16"

}

]

},

"Internal": false,

"Attachable": true,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"3c665930dce2f0873ae718d29454b01d260379b738cd7a09a0fdc0d8b91c3c4e": {

"Name": "nebula-docker-compose_graphd1_1",

"EndpointID": "470d56f3b9407add974f1cddd609ca627ccbcfb7ee74af6b800e3fcf020a753e",

"MacAddress": "02:42:ac:1c:03:02",

"IPv4Address": "172.28.3.2/16",

"IPv6Address": ""

},

"3e107de042b67351408046d1ab490908b7e779e77cdd7927bd1ec210bc66986e": {

"Name": "nebula-docker-compose_graphd2_1",

"EndpointID": "1fd0c1a9b2caedf85466a6cb0a0c2ad16d57e5012558f5329c22d99f9a8309b0",

"MacAddress": "02:42:ac:1c:03:03",

"IPv4Address": "172.28.3.3/16",

"IPv6Address": ""

},

"5525cbb18814a226504ff0393758a8afda0f9c27ed811442f9b8ddaf57ee4a56": {

"Name": "nebula-docker-compose_storaged0_1",

"EndpointID": "ecaa166f22b474508deaa0c5d234f88fbbc6722663283e76bfa7c01b0946c4f5",

"MacAddress": "02:42:ac:1c:02:01",

"IPv4Address": "172.28.2.1/16",

"IPv6Address": ""

},

"555fe45e20c2c370084966aa00a93e8fc303940245ba6023a1dc828473511ec7": {

"Name": "nebula-docker-compose_storaged2_1",

"EndpointID": "c4ce653da31a62a7e6eddbd69398754f581f61c7956bd5b54d3683d40eda9b07",

"MacAddress": "02:42:ac:1c:02:03",

"IPv4Address": "172.28.2.3/16",

"IPv6Address": ""

},

"5cea80f0e4084b4841be95c6db200baab07c92a061a9b5160152bb1153d6ea9d": {

"Name": "nebula-docker-compose_storaged1_1",

"EndpointID": "f92891b84fd8a63ea2721498a8f7e9ffaa6d7572544c5b361c3bb6b930725c22",

"MacAddress": "02:42:ac:1c:02:02",

"IPv4Address": "172.28.2.2/16",

"IPv6Address": ""

},

"76f853785690382b73a25a832855b025b4e9ee7c817be025626f9aa8cae7c55c": {

"Name": "nebula-docker-compose_graphd0_1",

"EndpointID": "576847c1925e9ce5583946cb2cc211c704e54339f1d6623f03fd6f7d41bb7f75",

"MacAddress": "02:42:ac:1c:03:01",

"IPv4Address": "172.28.3.1/16",

"IPv6Address": ""

},

"9400f91aac67247f657ef83e130542169bd0e5c4d6d1d002ef0ab5a9ea185194": {

"Name": "nebula-docker-compose_metad0_1",

"EndpointID": "08eebf08ce0dd3c59e6322a93a246bafaea8124e151b631edf8a778068131e2a",

"MacAddress": "02:42:ac:1c:01:01",

"IPv4Address": "172.28.1.1/16",

"IPv6Address": ""

},

"c306eadf7f87c30172a8b55a4a15d47e472ed1c189aee6fd05645a9a27758b54": {

"Name": "nebula-docker-compose_metad2_1",

"EndpointID": "74cdee255f60c8ef55dac3fd4030c1a9e99dc63dcfce9597d1a0bb7b653bc4ca",

"MacAddress": "02:42:ac:1c:01:03",

"IPv4Address": "172.28.1.3/16",

"IPv6Address": ""

},

"e68b5716cb8119779e954c69c2db814598e1a96c7a5edfe99bc82bfb776b5522": {

"Name": "nebula-docker-compose_metad1_1",

"EndpointID": "a73dd31ba8dd0b6d55df2fe19a83c737ef384bbbe77b5389e0a21e422c1a4608",

"MacAddress": "02:42:ac:1c:01:02",

"IPv4Address": "172.28.1.2/16",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {

"com.docker.compose.network": "nebula-net",

"com.docker.compose.project": "nebula-docker-compose",

"com.docker.compose.version": "1.27.4"

}

}

]

ping 172.28.0.1 可以ping通

docker0的ip 192.168.42.1 也可以ping 通

知道什么原因么,不知道为什么meta容器互相ping不通

这里没看到Gateway字段,你可以进入到graphd或者storaged中任何一个容器,使用ip route命令查看路由出口是否正确,默认出口应该指向172.28.0.1

容器内部没 ip route 命令,yum install 装不了。。。

怎么加gateway字段?

gateway字段不是手动添加的,由docker负责生成

容器之间有隔离机制,互相之间本来就不能ping通。