提问参考模版:

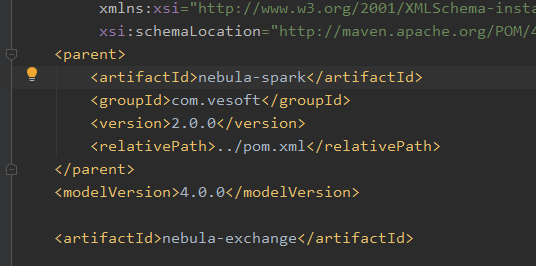

- nebula 版本:2.0.0

- 部署方式: 分布式

- 硬件信息

- 磁盘( 推荐使用 SSD)

- CPU、内存信息

- 问题的具体描述

- 相关的 meta / storage / graph info 日志信息

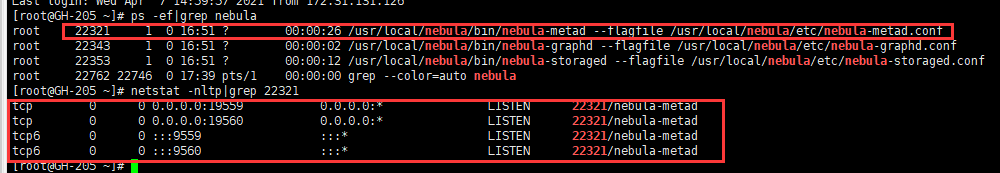

meta服务IP

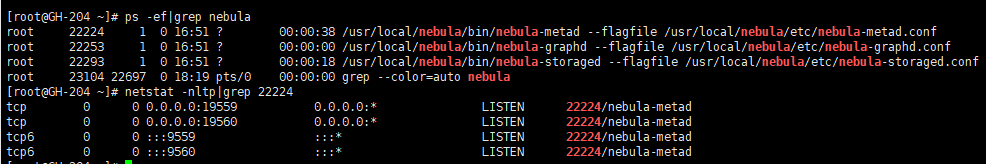

graph服务器IP

报错日志

ERROR [Executor task launch worker for task 2] - Exception in task 0.0 in stage 2.0 (TID 2)

com.facebook.thrift.protocol.TProtocolException: Expected protocol id ffffff82 but got 0

at com.facebook.thrift.protocol.TCompactProtocol.readMessageBegin(TCompactProtocol.java:475)

at com.vesoft.nebula.meta.MetaService$Client.recv_getSpace(MetaService.java:511)

at com.vesoft.nebula.meta.MetaService$Client.getSpace(MetaService.java:488)

at com.vesoft.nebula.client.meta.MetaClient.getSpace(MetaClient.java:131)

at com.vesoft.nebula.connector.nebula.MetaProvider.getVidType(MetaProvider.scala:29)

at com.vesoft.nebula.connector.writer.NebulaWriter.<init>(NebulaWriter.scala:25)

at com.vesoft.nebula.connector.writer.NebulaVertexWriter.<init>(NebulaVertexWriter.scala:19)

at com.vesoft.nebula.connector.writer.NebulaVertexWriterFactory.createDataWriter(NebulaSourceWriter.scala:28)

at org.apache.spark.sql.execution.datasources.v2.DataWritingSparkTask$.run(WriteToDataSourceV2Exec.scala:113)

at org.apache.spark.sql.execution.datasources.v2.WriteToDataSourceV2Exec$$anonfun$doExecute$2.apply(WriteToDataSourceV2Exec.scala:67)

at org.apache.spark.sql.execution.datasources.v2.WriteToDataSourceV2Exec$$anonfun$doExecute$2.apply(WriteToDataSourceV2Exec.scala:66)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:123)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:748)

WARN [task-result-getter-2] - Lost task 0.0 in stage 2.0 (TID 2, localhost, executor driver): com.facebook.thrift.protocol.TProtocolException: Expected protocol id ffffff82 but got 0

at com.facebook.thrift.protocol.TCompactProtocol.readMessageBegin(TCompactProtocol.java:475)

at com.vesoft.nebula.meta.MetaService$Client.recv_getSpace(MetaService.java:511)

at com.vesoft.nebula.meta.MetaService$Client.getSpace(MetaService.java:488)

at com.vesoft.nebula.client.meta.MetaClient.getSpace(MetaClient.java:131)

at com.vesoft.nebula.connector.nebula.MetaProvider.getVidType(MetaProvider.scala:29)

at com.vesoft.nebula.connector.writer.NebulaWriter.<init>(NebulaWriter.scala:25)

at com.vesoft.nebula.connector.writer.NebulaVertexWriter.<init>(NebulaVertexWriter.scala:19)

at com.vesoft.nebula.connector.writer.NebulaVertexWriterFactory.createDataWriter(NebulaSourceWriter.scala:28)

at org.apache.spark.sql.execution.datasources.v2.DataWritingSparkTask$.run(WriteToDataSourceV2Exec.scala:113)

at org.apache.spark.sql.execution.datasources.v2.WriteToDataSourceV2Exec$$anonfun$doExecute$2.apply(WriteToDataSourceV2Exec.scala:67)

at org.apache.spark.sql.execution.datasources.v2.WriteToDataSourceV2Exec$$anonfun$doExecute$2.apply(WriteToDataSourceV2Exec.scala:66)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:123)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:748)

ERROR [task-result-getter-2] - Task 0 in stage 2.0 failed 1 times; aborting job

ERROR [main] - Data source writer com.vesoft.nebula.connector.writer.NebulaDataSourceVertexWriter@7e2a76be is aborting.

ERROR [main] - NebulaDataSourceVertexWriter abort

ERROR [main] - Data source writer com.vesoft.nebula.connector.writer.NebulaDataSourceVertexWriter@7e2a76be aborted.

代码中服务器IP配置

def writeVertex(spark: SparkSession): Unit = {

LOG.info("start to write nebula vertices")

val df = spark.read.json("nebula-spark-utils/example/src/main/resources/vertex")

df.show()

val config =

NebulaConnectionConfig

.builder()

.withMetaAddress("172.31.137.205:19560")

.withGraphAddress("172.31.137.204:9669")

.withConenctionRetry(2)

.build()

val nebulaWriteVertexConfig: WriteNebulaVertexConfig = WriteNebulaVertexConfig

.builder()

.withSpace("test")

.withTag("person")

.withVidField("id")

.withVidAsProp(true)

.withBatch(1000)

.build()

df.write.nebula(config, nebulaWriteVertexConfig).writeVertices()

}