- nebula 版本:2.0

- 部署方式:分布式

jar包下载的连接:

https://repo1.maven.org/maven2/com/vesoft/nebula-spark-connector/2.0.0/

代码片段

public static void main(String[] args) {

SparkSession session = SparkSession

.builder()

.appName("test")

.master("local[4]")

.config("hive.metastore.uris", "thrift://172.31.134.62:9083")

.enableHiveSupport()

.getOrCreate();

final Dataset<Row> dataset = session.sql("select qsip as qsip_long,zzip as zzip_long,sheng,isp as yys from isdms.ip_ipip_netx_long where shi = '武汉'");

dataset.show();

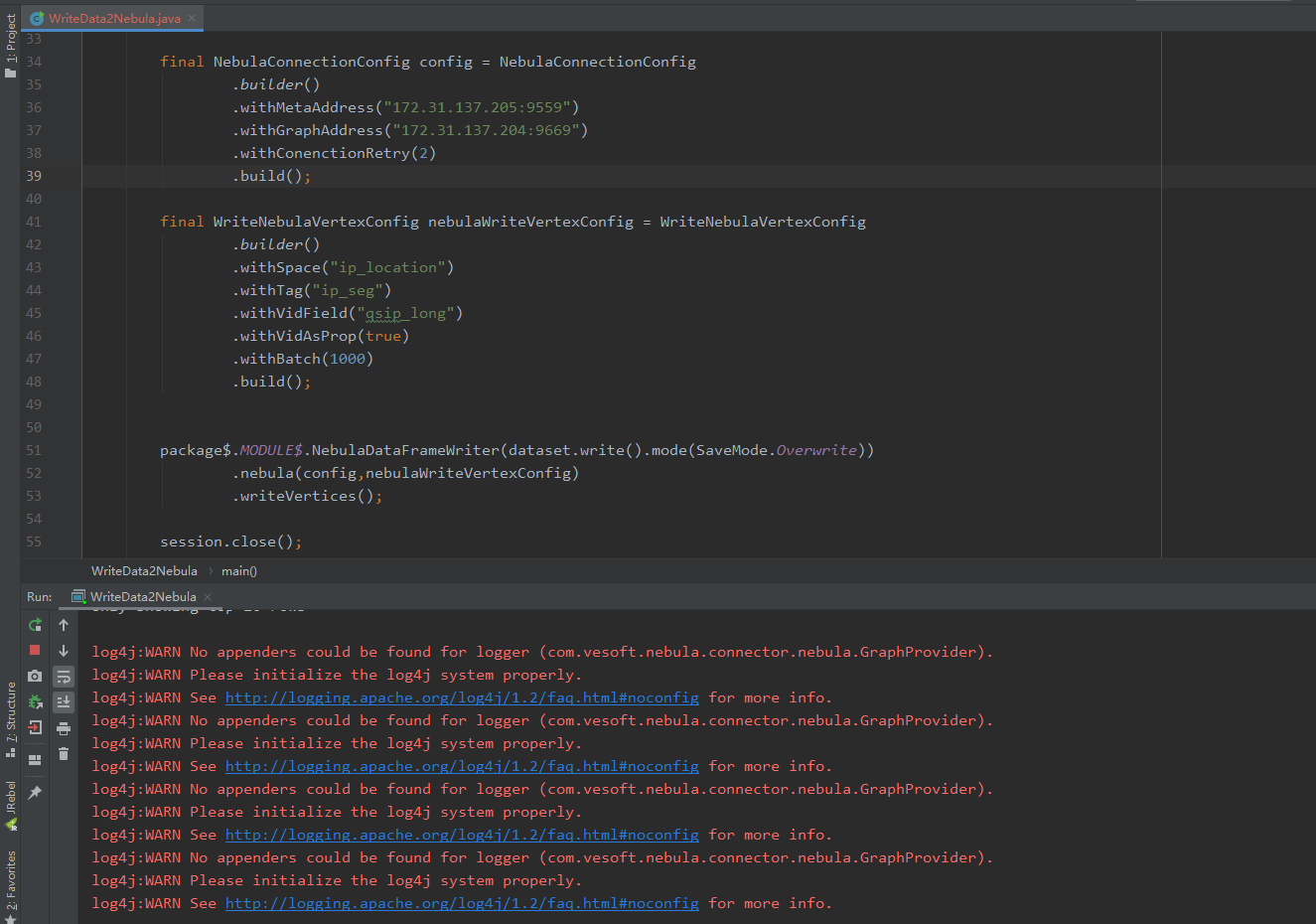

final NebulaConnectionConfig config = NebulaConnectionConfig

.builder()

.withMetaAddress("172.31.137.205:9559")

.withGraphAddress("172.31.137.204:9669")

.withConenctionRetry(2)

.build();

final WriteNebulaVertexConfig nebulaWriteVertexConfig = WriteNebulaVertexConfig

.builder()

.withSpace("ip_location")

.withTag("ip_seg")

.withVidField("qsip_long")

.withVidAsProp(true)

.withBatch(1000)

.build();

package$.MODULE$.NebulaDataFrameWriter(dataset.write().mode(SaveMode.Overwrite))

.nebula(config,nebulaWriteVertexConfig)

.writeVertices();

session.close();

}

问题描述:

数据写入之后,程序一直处在运行状态,无法退出