提问参考模版:

- nebula 版本:v2.0 ga

- 部署方式(分布式 / 单机 / Docker / DBaaS): 分布式

- 是否为线上版本:Y

- 硬件信息

- 磁盘( 推荐使用 SSD) 阿里云SSD 云盘

- CPU、内存信息 阿里云 8C32GB 3节点

- 问题的具体描述

从1.2.0 升级到2.0.0的过程中,metad copy完成后没有出错,在创建完2.0.0 storaged的路径后,通过db_upgrader 迁移数据时报错。

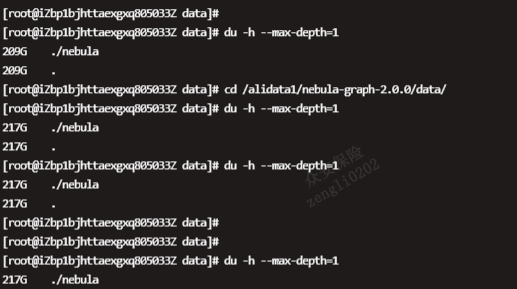

原始1.2.0 storaged 磁盘数据为209GB

在执行升级命令后,

/opt/nebula-graph-2.0.0/bin/db_upgrader \

--src_db_path=/alidata1/nebula-graph/data/ \

--dst_db_path=/alidata1/nebula-graph-2.0.0/data/ \

--upgrade_meta_server=172.26.18.168:45500,172.26.25.76:45500,172.26.25.77:45500 \

--upgrade_version=1 \

后日志如下:

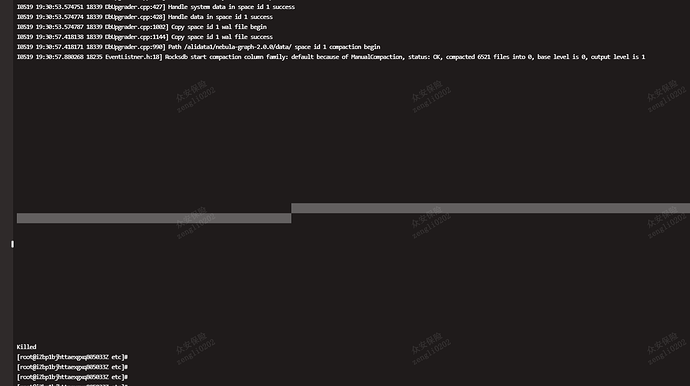

[root@iZbp1bjhttaexgxq805033Z etc]# /opt/nebula-graph-2.0.0/bin/db_upgrader \> --src_db_path=/alidata1/nebula-graph/data/ \> --dst_db_path=/alidata1/nebula-graph-2.0.0/data/ \> --upgrade_meta_server=172.26.18.168:45500,172.26.25.76:45500,172.26.25.77:45500 \> --upgrade_version=1 \> ;===========================PARAMS============================meta server: 172.26.18.168:45500,172.26.25.76:45500,172.26.25.77:45500source data path: /alidata1/nebula-graph/data/destination data path: /alidata1/nebula-graph-2.0.0/data/The size of the batch written: 100upgrade data from version: 1whether to compact all data: truemaximum number of concurrent parts allowed:10maximum number of concurrent spaces allowed: 5===========================PARAMS============================I0519 17:40:05.628006 18228 DbUpgraderTool.cpp:116] Prepare phase beginI0519 17:40:05.629070 18228 MetaClient.cpp:50] Create meta client to "172.26.25.77":45500I0519 17:40:06.642655 18228 MetaClient.cpp:99] Register time task for heartbeat!I0519 17:40:06.642704 18228 DbUpgraderTool.cpp:176] Prepare phase endI0519 17:40:06.642727 18228 DbUpgraderTool.cpp:179] Upgrade phase benginI0519 17:40:06.642836 18232 DbUpgraderTool.cpp:190] Upgrade from path /alidata1/nebula-graph/data/ to path /alidata1/nebula-graph-2.0.0/data/ beginI0519 17:40:06.642962 18232 DbUpgrader.cpp:1085] Upgrade from path /alidata1/nebula-graph/data/ to path /alidata1/nebula-graph-2.0.0/data/ in DbUpgrader run beginI0519 17:40:06.643085 18232 RocksEngineConfig.cpp:254] Emplace rocksdb option max_subcompactions=10I0519 17:40:06.643096 18232 RocksEngineConfig.cpp:254] Emplace rocksdb option max_background_jobs=10I0519 17:40:06.643131 18232 RocksEngineConfig.cpp:254] Emplace rocksdb option hard_pending_compaction_bytes_limit=274877906944I0519 17:40:06.643137 18232 RocksEngineConfig.cpp:254] Emplace rocksdb option level0_slowdown_writes_trigger=999999I0519 17:40:06.643141 18232 RocksEngineConfig.cpp:254] Emplace rocksdb option soft_pending_compaction_bytes_limit=137438953472I0519 17:40:06.643146 18232 RocksEngineConfig.cpp:254] Emplace rocksdb option disable_auto_compactions=trueI0519 17:40:06.643149 18232 RocksEngineConfig.cpp:254] Emplace rocksdb option level0_stop_writes_trigger=999999I0519 17:40:06.643153 18232 RocksEngineConfig.cpp:254] Emplace rocksdb option write_buffer_size=134217728I0519 17:40:06.643157 18232 RocksEngineConfig.cpp:254] Emplace rocksdb option max_write_buffer_number=12I0519 17:40:06.643162 18232 RocksEngineConfig.cpp:254] Emplace rocksdb option max_bytes_for_level_base=268435456I0519 17:40:43.437387 18232 RocksEngine.cpp:119] open rocksdb on /alidata1/nebula-graph/data//nebula/1/dataI0519 17:40:43.437530 18232 RocksEngineConfig.cpp:254] Emplace rocksdb option max_subcompactions=10I0519 17:40:43.437539 18232 RocksEngineConfig.cpp:254] Emplace rocksdb option max_background_jobs=10I0519 17:40:43.437556 18232 RocksEngineConfig.cpp:254] Emplace rocksdb option hard_pending_compaction_bytes_limit=274877906944I0519 17:40:43.437559 18232 RocksEngineConfig.cpp:254] Emplace rocksdb option level0_slowdown_writes_trigger=999999I0519 17:40:43.437563 18232 RocksEngineConfig.cpp:254] Emplace rocksdb option soft_pending_compaction_bytes_limit=137438953472I0519 17:40:43.437567 18232 RocksEngineConfig.cpp:254] Emplace rocksdb option disable_auto_compactions=trueI0519 17:40:43.437572 18232 RocksEngineConfig.cpp:254] Emplace rocksdb option level0_stop_writes_trigger=999999I0519 17:40:43.437575 18232 RocksEngineConfig.cpp:254] Emplace rocksdb option write_buffer_size=134217728I0519 17:40:43.437579 18232 RocksEngineConfig.cpp:254] Emplace rocksdb option max_write_buffer_number=12I0519 17:40:43.437583 18232 RocksEngineConfig.cpp:254] Emplace rocksdb option max_bytes_for_level_base=268435456I0519 17:40:43.443280 18232 RocksEngine.cpp:119] open rocksdb on /alidata1/nebula-graph-2.0.0/data//nebula/1/dataI0519 17:40:43.443537 18232 DbUpgrader.cpp:81] Src data path: /alidata1/nebula-graph/data/ space id 1 has 30 partsI0519 17:40:43.443562 18232 DbUpgrader.cpp:125] Tag id 3 has 16 fields!I0519 17:40:43.443567 18232 DbUpgrader.cpp:125] Tag id 2 has 16 fields!I0519 17:40:43.443581 18232 DbUpgrader.cpp:145] Tag id 2 has 1 indexesI0519 17:40:43.443595 18232 DbUpgrader.cpp:145] Tag id 3 has 1 indexesI0519 17:40:43.443603 18232 DbUpgrader.cpp:167] Edgetype 11 has 4 fields!I0519 17:40:43.443608 18232 DbUpgrader.cpp:167] Edgetype 6 has 4 fields!I0519 17:40:43.443612 18232 DbUpgrader.cpp:167] Edgetype 4 has 4 fields!I0519 17:40:43.443616 18232 DbUpgrader.cpp:167] Edgetype 5 has 4 fields!I0519 17:40:43.443640 18232 DbUpgrader.cpp:1112] Max concurrenct spaces: 1I0519 17:40:43.443815 18339 DbUpgrader.cpp:1130] Upgrade from path /alidata1/nebula-graph/data/ space id 1 to path /alidata1/nebula-graph-2.0.0/data/ beginI0519 17:40:43.443835 18339 DbUpgrader.cpp:378] Start to handle data in space id 1I0519 17:40:43.443838 18339 DbUpgrader.cpp:383] Max concurrenct parts: 10I0519 18:17:19.674314 18347 DbUpgrader.cpp:360] Handle vertex/edge/index data in space id 1 part id 8 finishedI0519 18:17:19.684294 18347 DbUpgrader.cpp:207] Start to handle vertex/edge/index data in space id 1 part id 11I0519 18:17:20.814790 18345 DbUpgrader.cpp:360] Handle vertex/edge/index data in space id 1 part id 6 finishedI0519 18:17:20.814853 18345 DbUpgrader.cpp:207] Start to handle vertex/edge/index data in space id 1 part id 12I0519 18:17:21.596624 18340 DbUpgrader.cpp:360] Handle vertex/edge/index data in space id 1 part id 1 finishedI0519 18:17:21.596678 18340 DbUpgrader.cpp:207] Start to handle vertex/edge/index data in space id 1 part id 13I0519 18:17:21.787756 18349 DbUpgrader.cpp:360] Handle vertex/edge/index data in space id 1 part id 10 finishedI0519 18:17:21.787822 18349 DbUpgrader.cpp:207] Start to handle vertex/edge/index data in space id 1 part id 14I0519 18:17:21.856604 18342 DbUpgrader.cpp:360] Handle vertex/edge/index data in space id 1 part id 3 finishedI0519 18:17:21.856667 18342 DbUpgrader.cpp:207] Start to handle vertex/edge/index data in space id 1 part id 15I0519 18:17:22.870478 18348 DbUpgrader.cpp:360] Handle vertex/edge/index data in space id 1 part id 9 finishedI0519 18:17:22.870541 18348 DbUpgrader.cpp:207] Start to handle vertex/edge/index data in space id 1 part id 16I0519 18:17:23.340703 18346 DbUpgrader.cpp:360] Handle vertex/edge/index data in space id 1 part id 7 finishedI0519 18:17:23.340764 18346 DbUpgrader.cpp:207] Start to handle vertex/edge/index data in space id 1 part id 17I0519 18:17:23.507486 18344 DbUpgrader.cpp:360] Handle vertex/edge/index data in space id 1 part id 5 finishedI0519 18:17:23.507551 18344 DbUpgrader.cpp:207] Start to handle vertex/edge/index data in space id 1 part id 18I0519 18:17:23.672267 18343 DbUpgrader.cpp:360] Handle vertex/edge/index data in space id 1 part id 4 finishedI0519 18:17:23.672322 18343 DbUpgrader.cpp:207] Start to handle vertex/edge/index data in space id 1 part id 19I0519 18:17:25.285491 18341 DbUpgrader.cpp:360] Handle vertex/edge/index data in space id 1 part id 2 finishedI0519 18:17:25.285555 18341 DbUpgrader.cpp:207] Start to handle vertex/edge/index data in space id 1 part id 20I0519 18:54:00.664706 18347 DbUpgrader.cpp:360] Handle vertex/edge/index data in space id 1 part id 11 finishedI0519 18:54:00.674669 18347 DbUpgrader.cpp:207] Start to handle vertex/edge/index data in space id 1 part id 21I0519 18:54:01.541780 18345 DbUpgrader.cpp:360] Handle vertex/edge/index data in space id 1 part id 12 finishedI0519 18:54:01.541837 18345 DbUpgrader.cpp:207] Start to handle vertex/edge/index data in space id 1 part id 22I0519 18:54:02.421280 18340 DbUpgrader.cpp:360] Handle vertex/edge/index data in space id 1 part id 13 finishedI0519 18:54:02.421351 18340 DbUpgrader.cpp:207] Start to handle vertex/edge/index data in space id 1 part id 23I0519 18:54:04.422369 18344 DbUpgrader.cpp:360] Handle vertex/edge/index data in space id 1 part id 18 finishedI0519 18:54:04.422427 18344 DbUpgrader.cpp:207] Start to handle vertex/edge/index data in space id 1 part id 24I0519 18:54:06.524277 18346 DbUpgrader.cpp:360] Handle vertex/edge/index data in space id 1 part id 17 finishedI0519 18:54:06.524348 18346 DbUpgrader.cpp:207] Start to handle vertex/edge/index data in space id 1 part id 25I0519 18:54:06.806751 18349 DbUpgrader.cpp:360] Handle vertex/edge/index data in space id 1 part id 14 finishedI0519 18:54:06.806816 18349 DbUpgrader.cpp:207] Start to handle vertex/edge/index data in space id 1 part id 26I0519 18:54:06.866108 18342 DbUpgrader.cpp:360] Handle vertex/edge/index data in space id 1 part id 15 finishedI0519 18:54:06.866164 18342 DbUpgrader.cpp:207] Start to handle vertex/edge/index data in space id 1 part id 27I0519 18:54:08.052470 18348 DbUpgrader.cpp:360] Handle vertex/edge/index data in space id 1 part id 16 finishedI0519 18:54:08.052536 18348 DbUpgrader.cpp:207] Start to handle vertex/edge/index data in space id 1 part id 28I0519 18:54:09.693222 18341 DbUpgrader.cpp:360] Handle vertex/edge/index data in space id 1 part id 20 finishedI0519 18:54:09.693279 18341 DbUpgrader.cpp:207] Start to handle vertex/edge/index data in space id 1 part id 29I0519 18:54:09.890674 18343 DbUpgrader.cpp:360] Handle vertex/edge/index data in space id 1 part id 19 finishedI0519 18:54:09.890744 18343 DbUpgrader.cpp:207] Start to handle vertex/edge/index data in space id 1 part id 30I0519 19:30:53.572106 18339 DbUpgrader.cpp:398] Start to handle system data in space id 1I0519 19:30:53.574751 18339 DbUpgrader.cpp:427] Handle system data in space id 1 successI0519 19:30:53.574774 18339 DbUpgrader.cpp:428] Handle data in space id 1 successI0519 19:30:53.574787 18339 DbUpgrader.cpp:1002] Copy space id 1 wal file beginI0519 19:30:57.418138 18339 DbUpgrader.cpp:1144] Copy space id 1 wal file successI0519 19:30:57.418171 18339 DbUpgrader.cpp:990] Path /alidata1/nebula-graph-2.0.0/data/ space id 1 compaction beginI0519 19:30:57.880268 18235 EventListner.h:18] Rocksdb start compaction column family: default because of ManualCompaction, status: OK, compacted 6521 files into 0, base level is 0, output level is 1

但是等待到晚上20:40几分时,新版本数据磁盘数据写到455GB时,出现KILLED

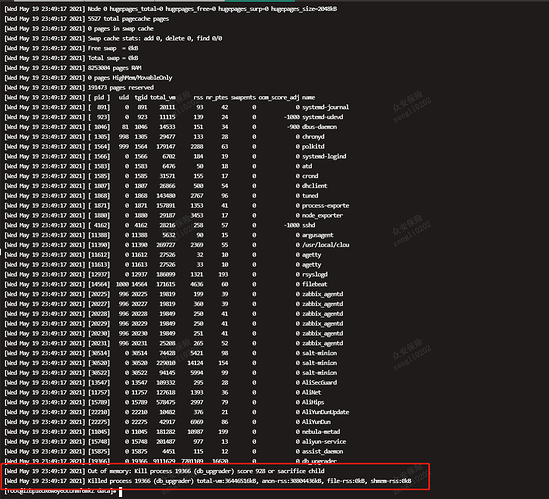

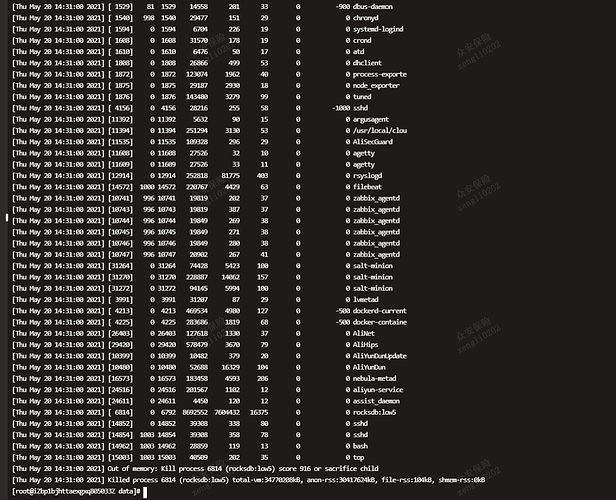

为避免错误,21:00左右用nohup挂起加载后,在晚间23:45分仍然出现了上述情况,卡compaction,新版本磁盘数据写到622-655GB

经查询dmesg -T 命令发现 ,upgrader被kill掉

storageb配置文件如下:

[root@iZbp10ox6w6y6oconmf6mkZ etc]# more nebula-metad.conf

########## basics ##########

# Whether to run as a daemon process

--daemonize=true

# The file to host the process id

--pid_file=pids/nebula-metad.pid

########## logging ##########

# The directory to host logging files, which must already exists

--log_dir=/alidata1/nebula-graph-2.0.0/log

# Log level, 0, 1, 2, 3 for INFO, WARNING, ERROR, FATAL respectively

--minloglevel=0

# Verbose log level, 1, 2, 3, 4, the higher of the level, the more verbose of the logging

--v=0

# Maximum seconds to buffer the log messages

--logbufsecs=0

########## networking ##########

# Meta Server Address

--meta_server_addrs=172.26.18.168:45500,172.26.25.76:45500,172.26.25.77:45500

# Local ip

--local_ip=172.26.25.77

# Meta daemon listening port

--port=45500

# HTTP service ip

--ws_ip=172.26.25.77

# HTTP service port

--ws_http_port=11000

# HTTP2 service port

--ws_h2_port=11002

--heartbeat_interval_secs=60

########## storage ##########

# Root data path, here should be only single path for metad

--data_path=/alidata1/nebula-graph-2.0.0/meta

########## other #########

# wether support null type

--null_type=true

[root@iZbp10ox6w6y6oconmf6mkZ etc]# more nebula-storaged.conf

########## basics ##########

# Whether to run as a daemon process

--daemonize=true

# The file to host the process id

--pid_file=pids/nebula-storaged.pid

########## logging ##########

# The directory to host logging files, which must already exists

--log_dir=/alidata1/nebula-graph-2.0.0/log

# Log level, 0, 1, 2, 3 for INFO, WARNING, ERROR, FATAL respectively

--minloglevel=0

# Verbose log level, 1, 2, 3, 4, the higher of the level, the more verbose of the logging

--v=0

# Maximum seconds to buffer the log messages

--logbufsecs=0

########## networking ##########

# Meta server address

--meta_server_addrs=172.26.18.168:45500,172.26.25.76:45500,172.26.25.77:45500

# Local ip

--local_ip=172.26.25.77

# Storage daemon listening port

--port=44500

# HTTP service ip

--ws_ip=172.26.25.77

# HTTP service port

--ws_http_port=12000

# HTTP2 service port

--ws_h2_port=12002

# heartbeat with meta service

--heartbeat_interval_secs=60

######### Raft #########

# Raft election timeout

--raft_heartbeat_interval_secs=30

# RPC timeout for raft client (ms)

--raft_rpc_timeout_ms=10000

## recycle Raft WAL

--wal_ttl=3600

########## Disk ##########

# Root data path. Split by comma. e.g. --data_path=/disk1/path1/,/disk2/path2/

# One path per Rocksdb instance.

--data_path=/alidata1/nebula-graph-2.0.0/data

############## Rocksdb Options ##############

# The default reserved bytes for one batch operation

--rocksdb_batch_size=4096

# The default block cache size used in BlockBasedTable. (MB)

# recommend: 1/3 of all memory

--rocksdb_block_cache=10240

# Compression algorithm, options: no,snappy,lz4,lz4hc,zlib,bzip2,zstd

# For the sake of binary compatibility, the default value is snappy.

# Recommend to use:

# * lz4 to gain more CPU performance, with the same compression ratio with snappy

# * zstd to occupy less disk space

# * lz4hc for the read-heavy write-light scenario

--rocksdb_compression=snappy

# Set different compressions for different levels

# For example, if --rocksdb_compression is snappy,

# "no:no:lz4:lz4::zstd" is identical to "no:no:lz4:lz4:snappy:zstd:snappy"

# In order to disable compression for level 0/1, set it to "no:no"

--rocksdb_compression_per_level=

# Whether or not to enable rocksdb's statistics, disabled by default

--enable_rocksdb_statistics=false

# Statslevel used by rocksdb to collection statistics, optional values are

# * kExceptHistogramOrTimers, disable timer stats, and skip histogram stats

# * kExceptTimers, Skip timer stats

# * kExceptDetailedTimers, Collect all stats except time inside mutex lock AND time spent on compression.

# * kExceptTimeForMutex, Collect all stats except the counters requiring to get time inside the mutex lock.

# * kAll, Collect all stats

--rocksdb_stats_level=kExceptHistogramOrTimers

# Whether or not to enable rocksdb's prefix bloom filter, disabled by default.

--enable_rocksdb_prefix_filtering=false

# Whether or not to enable the whole key filtering.

--enable_rocksdb_whole_key_filtering=true

# The prefix length for each key to use as the filter value.

# can be 12 bytes(PartitionId + VertexID), or 16 bytes(PartitionId + VertexID + TagID/EdgeType).

--rocksdb_filtering_prefix_length=12

############## rocksdb Options ##############

# rocksdb DBOptions in json, each name and value of option is a string, given as "option_name":"option_value" separated by comma

--rocksdb_db_options={"max_subcompactions":"1","max_background_jobs":"1"}

# rocksdb ColumnFamilyOptions in json, each name and value of option is string, given as "option_name":"option_value" separated by comma

--rocksdb_column_family_options={"disable_auto_compactions":"false","write_buffer_size":"67108864","max_write_buffer_number":"4","max_bytes_for_level_base":"268435456"}

# rocksdb BlockBasedTableOptions in json, each name and value of option is string, given as "option_name":"option_value" separated by comma

--rocksdb_block_based_table_options={"block_size":"8192"}

############# edge samplings ##############

# --enable_reservoir_sampling=false