-

nebula 版本:2.5.0

-

部署方式(分布式 / 单机 / Docker / DBaaS):k8s

-

是否为线上版本:Y

-

问题的具体描述

使用helm升级资源

--namespace database --create-namespace \

--set nebula.storaged.resources.limits.cpu="2" \

--set nebula.storaged.resources.limits.memory="2Gi"

之后,StatefulSet的yaml文件是改了,但是pod没有重新部署,cpu 和内存也没变,

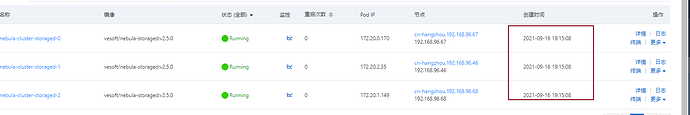

pod截图显示的还是昨天的创建时间,并没有重新部署

更改之后的yaml文件详情

apiVersion: apps/v1

kind: StatefulSet

metadata:

annotations:

nebula-graph.io/last-applied-configuration: >-

{"podManagementPolicy":"Parallel","replicas":3,"revisionHistoryLimit":10,"selector":{"matchLabels":{"app.kubernetes.io/cluster":"nebula-cluster","app.kubernetes.io/component":"storaged","app.kubernetes.io/managed-by":"nebula-operator","app.kubernetes.io/name":"nebula-graph"}},"serviceName":"nebula-cluster-storaged-headless","template":{"metadata":{"annotations":{"nebula-graph.io/cm-hash":"a55d2afe43d67e31"},"creationTimestamp":null,"labels":{"app.kubernetes.io/cluster":"nebula-cluster","app.kubernetes.io/component":"storaged","app.kubernetes.io/managed-by":"nebula-operator","app.kubernetes.io/name":"nebula-graph"}},"spec":{"containers":[{"command":["/bin/bash","-ecx","exec

/usr/local/nebula/bin/nebula-storaged

--flagfile=/usr/local/nebula/etc/nebula-storaged.conf

--meta_server_addrs=nebula-cluster-metad-0.nebula-cluster-metad-headless.database.svc.cluster.local:9559,nebula-cluster-metad-1.nebula-cluster-metad-headless.database.svc.cluster.local:9559,nebula-cluster-metad-2.nebula-cluster-metad-headless.database.svc.cluster.local:9559

--local_ip=$(hostname).nebula-cluster-storaged-headless.database.svc.cluster.local

--ws_ip=$(hostname).nebula-cluster-storaged-headless.database.svc.cluster.local

--daemonize=false"],"image":"vesoft/nebula-storaged:v2.5.0","imagePullPolicy":"IfNotPresent","name":"storaged","ports":[{"containerPort":9779,"name":"thrift"},{"containerPort":19779,"name":"http"},{"containerPort":19780,"name":"http2"},{"containerPort":9778,"name":"admin"}],"readinessProbe":{"httpGet":{"path":"/status","port":19779,"scheme":"HTTP"},"initialDelaySeconds":20,"periodSeconds":10,"timeoutSeconds":5},"resources":{"limits":{"cpu":"1","memory":"2Gi"},"requests":{"cpu":"1","memory":"2Gi"}},"volumeMounts":[{"mountPath":"/usr/local/nebula/logs","name":"storaged","subPath":"logs"},{"mountPath":"/usr/local/nebula/data","name":"storaged","subPath":"data"},{"mountPath":"/usr/local/nebula/etc","name":"nebula-cluster-storaged"}]}],"schedulerName":"default-scheduler","topologySpreadConstraints":[{"labelSelector":{"matchLabels":{"app.kubernetes.io/cluster":"nebula-cluster","app.kubernetes.io/component":"storaged","app.kubernetes.io/managed-by":"nebula-operator","app.kubernetes.io/name":"nebula-graph"}},"maxSkew":1,"topologyKey":"kubernetes.io/hostname","whenUnsatisfiable":"ScheduleAnyway"}],"volumes":[{"name":"storaged","persistentVolumeClaim":{"claimName":"storaged"}},{"configMap":{"items":[{"key":"nebula-storaged.conf","path":"nebula-storaged.conf"}],"name":"nebula-cluster-storaged"},"name":"nebula-cluster-storaged"}]}},"updateStrategy":{"rollingUpdate":{"partition":3},"type":"RollingUpdate"},"volumeClaimTemplates":[{"apiVersion":"v1","kind":"PersistentVolumeClaim","metadata":{"creationTimestamp":null,"name":"storaged"},"spec":{"accessModes":["ReadWriteOnce"],"resources":{"requests":{"storage":"50Gi"}},"storageClassName":"alicloud-nas-subpath-prod","volumeMode":"Filesystem"},"status":{"phase":"Pending"}}]}

creationTimestamp: '2021-09-16T11:14:56Z'

generation: 108

labels:

app.kubernetes.io/cluster: nebula-cluster

app.kubernetes.io/component: storaged

app.kubernetes.io/managed-by: nebula-operator

app.kubernetes.io/name: nebula-graph

managedFields:

- apiVersion: apps/v1

fieldsType: FieldsV1

fieldsV1:

'f:metadata':

'f:annotations':

.: {}

'f:nebula-graph.io/last-applied-configuration': {}

'f:labels':

.: {}

'f:app.kubernetes.io/cluster': {}

'f:app.kubernetes.io/component': {}

'f:app.kubernetes.io/managed-by': {}

'f:app.kubernetes.io/name': {}

'f:ownerReferences':

.: {}

'k:{"uid":"1f2c9e33-74dc-4999-b238-8d33436d39d4"}':

.: {}

'f:apiVersion': {}

'f:blockOwnerDeletion': {}

'f:controller': {}

'f:kind': {}

'f:name': {}

'f:uid': {}

'f:spec':

'f:podManagementPolicy': {}

'f:replicas': {}

'f:revisionHistoryLimit': {}

'f:selector': {}

'f:serviceName': {}

'f:template':

'f:metadata':

'f:annotations':

.: {}

'f:nebula-graph.io/cm-hash': {}

'f:labels':

.: {}

'f:app.kubernetes.io/cluster': {}

'f:app.kubernetes.io/component': {}

'f:app.kubernetes.io/managed-by': {}

'f:app.kubernetes.io/name': {}

'f:spec':

'f:containers':

'k:{"name":"storaged"}':

.: {}

'f:command': {}

'f:image': {}

'f:imagePullPolicy': {}

'f:name': {}

'f:ports':

.: {}

'k:{"containerPort":19779,"protocol":"TCP"}':

.: {}

'f:containerPort': {}

'f:name': {}

'f:protocol': {}

'k:{"containerPort":19780,"protocol":"TCP"}':

.: {}

'f:containerPort': {}

'f:name': {}

'f:protocol': {}

'k:{"containerPort":9778,"protocol":"TCP"}':

.: {}

'f:containerPort': {}

'f:name': {}

'f:protocol': {}

'k:{"containerPort":9779,"protocol":"TCP"}':

.: {}

'f:containerPort': {}

'f:name': {}

'f:protocol': {}

'f:readinessProbe':

.: {}

'f:failureThreshold': {}

'f:httpGet':

.: {}

'f:path': {}

'f:port': {}

'f:scheme': {}

'f:initialDelaySeconds': {}

'f:periodSeconds': {}

'f:successThreshold': {}

'f:timeoutSeconds': {}

'f:resources':

.: {}

'f:limits':

.: {}

'f:memory': {}

'f:requests':

.: {}

'f:cpu': {}

'f:memory': {}

'f:terminationMessagePath': {}

'f:terminationMessagePolicy': {}

'f:volumeMounts':

.: {}

'k:{"mountPath":"/usr/local/nebula/data"}':

.: {}

'f:mountPath': {}

'f:name': {}

'f:subPath': {}

'k:{"mountPath":"/usr/local/nebula/etc"}':

.: {}

'f:mountPath': {}

'f:name': {}

'k:{"mountPath":"/usr/local/nebula/logs"}':

.: {}

'f:mountPath': {}

'f:name': {}

'f:subPath': {}

'f:dnsPolicy': {}

'f:restartPolicy': {}

'f:schedulerName': {}

'f:securityContext': {}

'f:terminationGracePeriodSeconds': {}

'f:topologySpreadConstraints':

.: {}

'k:{"topologyKey":"kubernetes.io/hostname","whenUnsatisfiable":"ScheduleAnyway"}':

.: {}

'f:labelSelector': {}

'f:maxSkew': {}

'f:topologyKey': {}

'f:whenUnsatisfiable': {}

'f:volumes':

.: {}

'k:{"name":"nebula-cluster-storaged"}':

.: {}

'f:configMap':

.: {}

'f:defaultMode': {}

'f:items': {}

'f:name': {}

'f:name': {}

'k:{"name":"storaged"}':

.: {}

'f:name': {}

'f:persistentVolumeClaim':

.: {}

'f:claimName': {}

'f:updateStrategy':

'f:rollingUpdate':

.: {}

'f:partition': {}

'f:type': {}

'f:volumeClaimTemplates': {}

manager: controller-manager

operation: Update

time: '2021-09-16T11:14:56Z'

- apiVersion: apps/v1

fieldsType: FieldsV1

fieldsV1:

'f:status':

'f:collisionCount': {}

'f:currentReplicas': {}

'f:currentRevision': {}

'f:observedGeneration': {}

'f:readyReplicas': {}

'f:replicas': {}

'f:updateRevision': {}

manager: kube-controller-manager

operation: Update

time: '2021-09-16T13:30:43Z'

- apiVersion: apps/v1

fieldsType: FieldsV1

fieldsV1:

'f:spec':

'f:template':

'f:spec':

'f:containers':

'k:{"name":"storaged"}':

'f:resources':

'f:limits':

'f:cpu': {}

manager: Apache-HttpClient

operation: Update

time: '2021-09-17T01:45:11Z'

name: nebula-cluster-storaged

namespace: database

ownerReferences:

- apiVersion: apps.nebula-graph.io/v1alpha1

blockOwnerDeletion: true

controller: true

kind: NebulaCluster

name: nebula-cluster

uid: 1f2c9e33-74dc-4999-b238-8d33436d39d4

resourceVersion: '1821771619'

uid: 8a47f25e-f0db-4c1a-8b3a-4027521448f9

spec:

podManagementPolicy: Parallel

replicas: 3

revisionHistoryLimit: 10

selector:

matchLabels:

app.kubernetes.io/cluster: nebula-cluster

app.kubernetes.io/component: storaged

app.kubernetes.io/managed-by: nebula-operator

app.kubernetes.io/name: nebula-graph

serviceName: nebula-cluster-storaged-headless

template:

metadata:

annotations:

nebula-graph.io/cm-hash: a55d2afe43d67e31

labels:

app.kubernetes.io/cluster: nebula-cluster

app.kubernetes.io/component: storaged

app.kubernetes.io/managed-by: nebula-operator

app.kubernetes.io/name: nebula-graph

spec:

containers:

- command:

- /bin/bash

- '-ecx'

- >-

exec /usr/local/nebula/bin/nebula-storaged

--flagfile=/usr/local/nebula/etc/nebula-storaged.conf

--meta_server_addrs=nebula-cluster-metad-0.nebula-cluster-metad-headless.database.svc.cluster.local:9559,nebula-cluster-metad-1.nebula-cluster-metad-headless.database.svc.cluster.local:9559,nebula-cluster-metad-2.nebula-cluster-metad-headless.database.svc.cluster.local:9559

--local_ip=$(hostname).nebula-cluster-storaged-headless.database.svc.cluster.local

--ws_ip=$(hostname).nebula-cluster-storaged-headless.database.svc.cluster.local

--daemonize=false

image: 'vesoft/nebula-storaged:v2.5.0'

imagePullPolicy: IfNotPresent

name: storaged

ports:

- containerPort: 9779

name: thrift

protocol: TCP

- containerPort: 19779

name: http

protocol: TCP

- containerPort: 19780

name: http2

protocol: TCP

- containerPort: 9778

name: admin

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /status

port: 19779

scheme: HTTP

initialDelaySeconds: 20

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

resources:

limits:

cpu: '2'

memory: 2Gi

requests:

cpu: '1'

memory: 2Gi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /usr/local/nebula/logs

name: storaged

subPath: logs

- mountPath: /usr/local/nebula/data

name: storaged

subPath: data

- mountPath: /usr/local/nebula/etc

name: nebula-cluster-storaged

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

topologySpreadConstraints:

- labelSelector:

matchLabels:

app.kubernetes.io/cluster: nebula-cluster

app.kubernetes.io/component: storaged

app.kubernetes.io/managed-by: nebula-operator

app.kubernetes.io/name: nebula-graph

maxSkew: 1

topologyKey: kubernetes.io/hostname

whenUnsatisfiable: ScheduleAnyway

volumes:

- name: storaged

persistentVolumeClaim:

claimName: storaged

- configMap:

defaultMode: 420

items:

- key: nebula-storaged.conf

path: nebula-storaged.conf

name: nebula-cluster-storaged

name: nebula-cluster-storaged

updateStrategy:

rollingUpdate:

partition: 3

type: RollingUpdate

volumeClaimTemplates:

- apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: storaged

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 50Gi

storageClassName: alicloud-nas-subpath-prod

volumeMode: Filesystem

status:

phase: Pending

status:

collisionCount: 0

currentReplicas: 3

currentRevision: nebula-cluster-storaged-75747d5877

observedGeneration: 108

readyReplicas: 3

replicas: 3

updateRevision: nebula-cluster-storaged-666654f58b