nebula 版本:v3.2.0

部署方式:分布式

安装方式:RPM

是否为线上版本:Y

硬件信息

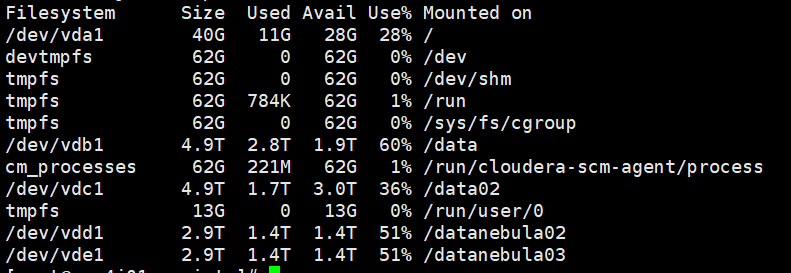

磁盘( 推荐使用 SSD)SSD

CPU、内存信息

32c128g 一共五台服务器

202 metad:9559 graphd:9669 storaged:9779

203 metad:9559 graphd:9669 storaged:9779

204 metad:9559 graphd:9669 storaged:9779

205 graphd:9669 storaged:9779

206 graphd:9669 storaged:9779

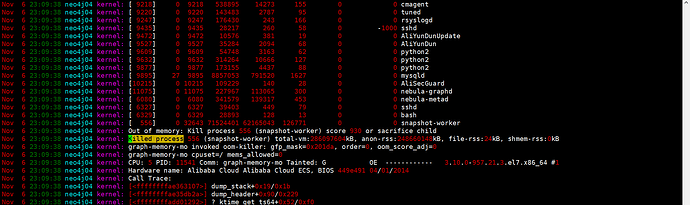

问题的具体描述 Storage服务无法启动 偶尔启动成功会崩溃!

storaged-stderr.log

*** Signal 6 (SIGABRT) (0xa2e) received by PID 2606 (pthread TID 0x7feece3ff700) (linux TID 2636) (maybe from PID 2606, UID 0) (code: -6), stack trace: ***

(error retrieving stack trace)

ExceptionHandler::GenerateDump sys_pipe failed:Too many open files

ExceptionHandler::WaitForContinueSignal sys_read failed:ExceptionHandler::SendContinueSignalToChild sys_write failed:Bad file descriptor

Bad file descriptor

*** Aborted at 1666921888 (Unix time, try 'date -d @1666921888') ***

*** Signal 6 (SIGABRT) (0xa2e) received by PID 2606 (pthread TID 0x7feecd7ff700) (linux TID 2638) (maybe from PID 2606, UID 0) (code: -6), stack trace: ***

(error retrieving stack trace)

*** Aborted at 1666921888 (Unix time, try 'date -d @1666921888') ***

*** Signal 6 (SIGABRT) (0xa2e) received by PID 2606 (pthread TID 0x7feed17ff700) (linux TID 2628) (maybe from PID 2606, UID 0) (code: -6), stack trace: ***

(error retrieving stack trace)

Could not create logging file: Too many open files

COULD NOT CREATE A LOGGINGFILE 20221028-102847.23727!Could not create logging file: Too many open files

COULD NOT CREATE A LOGGINGFILE 20221028-102847.23727!F20221028 10:28:47.280041 23744 RaftPart.cpp:1073] [Port: 9780, Space: 120, Part: 32] Failed to commit logs

*** Check failure stack trace: ***

*** Aborted at 1666924127 (Unix time, try 'date -d @1666924127') ***

*** Signal 6 (SIGABRT) (0x5caf) received by PID 23727 (pthread TID 0x7fb910bff700) (linux TID 23744) (maybe from PID 23727, UID 0) (code: -6), stack trace: ***

(error retrieving stack trace)

ExceptionHandler::GenerateDump sys_pipe failed:Too many open files

ExceptionHandler::WaitForContinueSignal sys_read failed:ExceptionHandler::SendContinueSignalToChild sys_write failed:Bad file descriptor

Bad file descriptor

Could not create logging file: Too many open files

COULD NOT CREATE A LOGGINGFILE 20221101-033954.16674!F20221101 03:39:54.263427 16687 RaftPart.cpp:1073] [Port: 9780, Space: 120, Part: 4] Failed to commit logs

*** Check failure stack trace: ***

F20221101 03:39:54.265478 16710 RaftPart.cpp:1073] [Port: 9780, Space: 120, Part: 2] Failed to commit logsF20221101 03:39:54.265703 16704 RaftPart.cpp:1073] [Port: 9780, Space: 120, Part: 17] Failed to commit logs

*** Check failure stack trace: ***

*** Aborted at 1667245194 (Unix time, try 'date -d @1667245194') ***

F20221101 03:39:54.265478 16710 RaftPart.cpp:1073] [Port: 9780, Space: 120, Part: 2] Failed to commit logsF20221101 03:39:54.265703 16704 RaftPart.cpp:1073] [Port: 9780, Space: 120, Part: 17] Failed to commit logs

*** Check failure stack trace: ***

*** Signal 6 (SIGABRT) (0x4122) received by PID 16674 (pthread TID 0x7f952acfe700) (linux TID 16687) (maybe from PID 16674, UID 0) (code: -6), stack trace: ***

(error retrieving stack trace)

ExceptionHandler::GenerateDump sys_pipe failed:Too many open files

ExceptionHandler::WaitForContinueSignal sys_read failed:ExceptionHandler::SendContinueSignalToChild sys_write failed:Bad file descriptorBad file descriptor

*** Aborted at 1667245194 (Unix time, try 'date -d @1667245194') ***

*** Signal 6 (SIGABRT) (0x4122) received by PID 16674 (pthread TID 0x7f9521dff700) (linux TID 16710) (maybe from PID 16674, UID 0) (code: -6), stack trace: ***

(error retrieving stack trace)

*** Aborted at 1667245194 (Unix time, try 'date -d @1667245194') ***

*** Signal 6 (SIGABRT) (0x4122) received by PID 16674 (pthread TID 0x7f95241ff700) (linux TID 16704) (maybe from PID 16674, UID 0) (code: -6), stack trace: ***

(error retrieving stack trace)

Could not create logging file: Too many open files

COULD NOT CREATE A LOGGINGFILE 20221101-103402.9782!Could not create logging file: Too many open files

COULD NOT CREATE A LOGGINGFILE 20221101-103402.9782!Could not create logging file: Too many open files

COULD NOT CREATE A LOGGINGFILE 20221101-103402.9782!F20221101 10:34:02.414801 9797 RaftPart.cpp:1073] [Port: 9780, Space: 120, Part: 4] Failed to commit logs

*** Check failure stack trace: ***

*** Aborted at 1667270042 (Unix time, try 'date -d @1667270042') ***

*** Signal 6 (SIGABRT) (0x2636) received by PID 9782 (pthread TID 0x7f7c135ff700) (linux TID 9797) (maybe from PID 9782, UID 0) (code: -6), stack trace: ***

(error retrieving stack trace)

ExceptionHandler::GenerateDump sys_pipe failed:Too many open files

ExceptionHandler::WaitForContinueSignal sys_read failed:ExceptionHandler::SendContinueSignalToChild sys_write failed:Bad file descriptorBad file descriptor

F20221101 10:34:02.691797 9818 RaftPart.cpp:1073] [Port: 9780, Space: 120, Part: 2] Failed to commit logs

*** Check failure stack trace: ***

Could not create logging file: Too many open files

COULD NOT CREATE A LOGGINGFILE 20221101-105248.15484!Could not create logging file: Too many open files

COULD NOT CREATE A LOGGINGFILE 20221101-105248.15484!Could not create logging file: Too many open files

COULD NOT CREATE A LOGGINGFILE 20221101-105249.15484!F20221101 10:52:49.521068 15507 RaftPart.cpp:1073] [Port: 9780, Space: 120, Part: 2] Failed to commit logs

*** Check failure stack trace: ***

F20221101 10:52:49.521129 15517 RaftPart.cpp:1073] [Port: 9780, Space: 120, Part: 4] Failed to commit logs

*** Check failure stack trace: ***

*** Aborted at 1667271169 (Unix time, try 'date -d @1667271169') ***

*** Signal 6 (SIGABRT) (0x3c7c) received by PID 15484 (pthread TID 0x7f03c4dff700) (linux TID 15507) (maybe from PID 15484, UID 0) (code: -6), stack trace: ***

(error retrieving stack trace)

ExceptionHandler::GenerateDump sys_pipe failed:Too many open files

ExceptionHandler::WaitForContinueSignal sys_read failed:ExceptionHandler::SendContinueSignalToChild sys_write failed:Bad file descriptorBad file descriptor

*** Aborted at 1667271169 (Unix time, try 'date -d @1667271169') ***

*** Signal 6 (SIGABRT) (0x3c7c) received by PID 15484 (pthread TID 0x7f03c12ff700) (linux TID 15517) (maybe from PID 15484, UID 0) (code: -6), stack trace: ***

(error retrieving stack trace)

nebula-storaged.INFO

I20221104 11:51:12.150789 18255 NebulaStore.cpp:430] [Space: 120, Part: 34] has existed!

I20221104 11:51:12.150794 18255 NebulaStore.cpp:430] [Space: 120, Part: 37] has existed!

I20221104 11:51:12.150804 18255 NebulaStore.cpp:430] [Space: 120, Part: 39] has existed!

I20221104 11:51:12.150810 18255 NebulaStore.cpp:430] [Space: 120, Part: 42] has existed!

I20221104 11:51:12.150815 18255 NebulaStore.cpp:430] [Space: 120, Part: 44] has existed!

I20221104 11:51:12.150818 18255 NebulaStore.cpp:430] [Space: 120, Part: 47] has existed!

I20221104 11:51:12.150823 18255 NebulaStore.cpp:430] [Space: 120, Part: 49] has existed!

I20221104 11:51:12.150827 18255 NebulaStore.cpp:430] [Space: 120, Part: 52] has existed!

I20221104 11:51:12.150832 18255 NebulaStore.cpp:430] [Space: 120, Part: 54] has existed!

I20221104 11:51:12.150836 18255 NebulaStore.cpp:430] [Space: 120, Part: 57] has existed!

I20221104 11:51:12.150840 18255 NebulaStore.cpp:430] [Space: 120, Part: 59] has existed!

I20221104 11:51:12.150859 18255 NebulaStore.cpp:78] Register handler...

I20221104 11:51:12.150868 18255 StorageServer.cpp:228] Init LogMonitor

I20221104 11:51:12.172487 18255 StorageServer.cpp:96] Starting Storage HTTP Service

I20221104 11:51:12.174451 18255 StorageServer.cpp:100] Http Thread Pool started

I20221104 11:51:12.194942 22044 WebService.cpp:124] Web service started on HTTP[19779]

I20221104 11:51:12.194989 18255 TransactionManager.cpp:24] TransactionManager ctor()

I20221104 11:51:17.803148 18322 MetaClient.cpp:3094] Load leader of "172.17.126.202":9779 in 1 space

I20221104 11:51:17.803186 18322 MetaClient.cpp:3094] Load leader of "172.17.126.203":9779 in 1 space

I20221104 11:51:17.803193 18322 MetaClient.cpp:3094] Load leader of "172.17.126.204":9779 in 1 space

I20221104 11:51:17.803201 18322 MetaClient.cpp:3094] Load leader of "172.17.126.205":9779 in 1 space

I20221104 11:51:17.803210 18322 MetaClient.cpp:3094] Load leader of "172.17.126.206":9779 in 1 space

I20221104 11:51:17.803215 18322 MetaClient.cpp:3100] Load leader ok

I20221104 11:51:27.813603 18322 MetaClient.cpp:3094] Load leader of "172.17.126.202":9779 in 1 space

I20221104 11:51:27.813668 18322 MetaClient.cpp:3094] Load leader of "172.17.126.203":9779 in 1 space

I20221104 11:51:27.813681 18322 MetaClient.cpp:3094] Load leader of "172.17.126.204":9779 in 1 space

I20221104 11:51:27.813694 18322 MetaClient.cpp:3094] Load leader of "172.17.126.205":9779 in 1 space

I20221104 11:51:27.813707 18322 MetaClient.cpp:3094] Load leader of "172.17.126.206":9779 in 1 space

I20221104 11:51:27.813715 18322 MetaClient.cpp:3100] Load leader ok

I20221104 11:51:48.197736 18322 MetaClient.cpp:3094] Load leader of "172.17.126.202":9779 in 1 space

I20221104 11:51:48.198194 18322 MetaClient.cpp:3094] Load leader of "172.17.126.203":9779 in 1 space

I20221104 11:51:48.198215 18322 MetaClient.cpp:3094] Load leader of "172.17.126.204":9779 in 1 space

I20221104 11:51:48.198225 18322 MetaClient.cpp:3094] Load leader of "172.17.126.205":9779 in 1 space

I20221104 11:51:48.198231 18322 MetaClient.cpp:3094] Load leader of "172.17.126.206":9779 in 1 space

I20221104 11:51:48.198236 18322 MetaClient.cpp:3100] Load leader ok

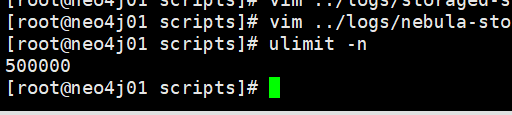

尝试调整了ulimit设置为500000也不可以正常启动