nebula 版本:3.2.1

Studio版本:3.2.3

部署方式:分布式

安装方式:RPM

是否为线上版本:Y

硬件信息

磁盘 SSD

CPU、内存信息 单节点 4core8g

问题的具体描述

项目中引入nebula spark connector版本如下

<dependency>

<groupId>com.vesoft</groupId>

<artifactId>nebula-spark-connector</artifactId>

<version>3.0.0</version>

</dependency>

然后开始build我的项目(基于Maven)

mvn clean package -U -Dmaven.test.skip=true

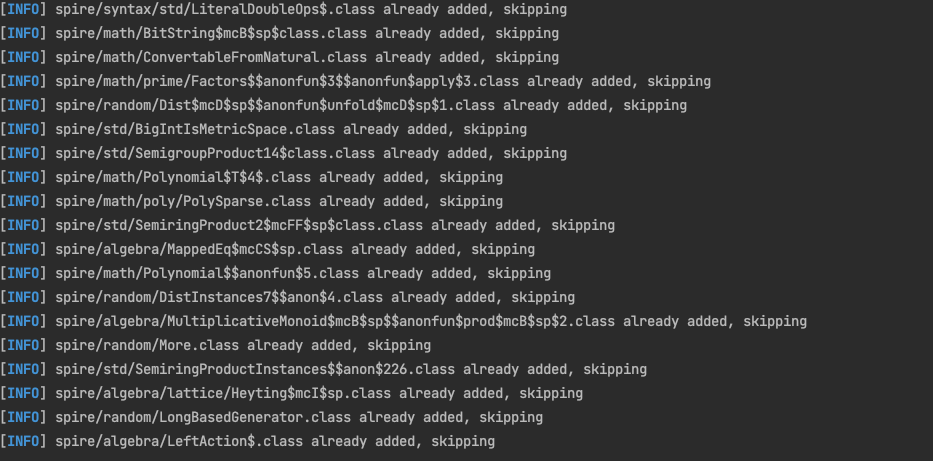

在加入nebula spark connector依赖之前,build时没有如下的这些日志,

大概看了nebula spark connector包中的包结构,猜测下这些日志应该均是来自nebula spark Connecctor jar包

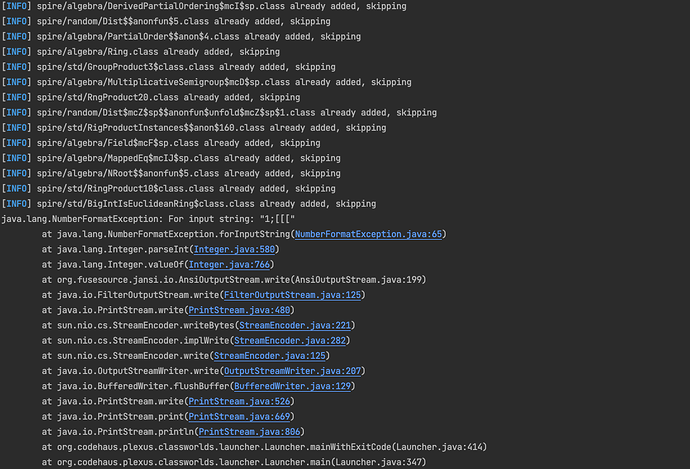

并且在build日志的最后出现了如下图中的错误

jar包构建失败了。

我的疑问是,为什么引入nebula spark connector依赖后会输出这些日志?这些日志能够关闭输出?出现的问题如何解决?

先,感谢啦

- 日志输出要看pom中plugin的配置,我用spark-connector 项目的example模块去编译打包(把pom中shade 插件去掉),没有这些日志,下面是打包命令和日志,你也可以把你的pom贴出来。

nicole@nicoledeMacBook-Pro ~/workspace/nebula/spark-utils/nebula-spark-connector/example v3.0.0 ± mvn clean package -Dgpg.skip -Dmaven.javadoc.skip=true -Dmaven.test.skip=true

[INFO] Scanning for projects...

[INFO] Inspecting build with total of 1 modules...

[INFO] Not installing Nexus Staging features:

[INFO] * Preexisting staging related goal bindings found in 1 modules.

[INFO]

[INFO] -------------------------< com.vesoft:example >-------------------------

[INFO] Building example 3.0.0

[INFO] --------------------------------[ jar ]---------------------------------

[INFO]

[INFO] --- maven-clean-plugin:2.5:clean (default-clean) @ example ---

[INFO] Deleting /Users/nicole/workspace/nebula/spark-utils/nebula-spark-connector/example/target

[INFO]

[INFO] --- maven-resources-plugin:2.6:resources (default-resources) @ example ---

[INFO] Using 'UTF-8' encoding to copy filtered resources.

[INFO] Copying 10 resources

[INFO]

[INFO] --- maven-compiler-plugin:3.8.1:compile (default-compile) @ example ---

[INFO] No sources to compile

[INFO]

[INFO] --- maven-scala-plugin:2.15.2:compile (scala-compile) @ example ---

[INFO] Checking for multiple versions of scala

[WARNING] Expected all dependencies to require Scala version: 2.11.12

[WARNING] com.twitter:chill_2.11:0.9.3 requires scala version: 2.11.12

[WARNING] org.apache.spark:spark-core_2.11:2.4.4 requires scala version: 2.11.12

[WARNING] org.json4s:json4s-jackson_2.11:3.5.3 requires scala version: 2.11.11

[WARNING] Multiple versions of scala libraries detected!

[INFO] includes = [**/*.java,**/*.scala,]

[INFO] excludes = [META-INF/*.DSA,com/vesoft/tools/**,META-INF/*.SF,META-INF/*.RSA,]

[INFO] /Users/nicole/workspace/nebula/spark-utils/nebula-spark-connector/example/src/main/scala:-1: info: compiling

[INFO] Compiling 2 source files to /Users/nicole/workspace/nebula/spark-utils/nebula-spark-connector/example/target/classes at 1677487638434

[INFO] prepare-compile in 0 s

[INFO] compile in 4 s

[INFO]

[INFO] --- maven-resources-plugin:2.6:testResources (default-testResources) @ example ---

[INFO] Not copying test resources

[INFO]

[INFO] --- maven-compiler-plugin:3.8.1:testCompile (default-testCompile) @ example ---

[INFO] Not compiling test sources

[INFO]

[INFO] --- maven-scala-plugin:2.15.2:testCompile (scala-test-compile) @ example ---

[INFO]

[INFO] --- maven-surefire-plugin:2.12.4:test (default-test) @ example ---

[INFO] Tests are skipped.

[INFO]

[INFO] --- maven-jar-plugin:3.2.0:jar (default-jar) @ example ---

[INFO] Building jar: /Users/nicole/workspace/nebula/spark-utils/nebula-spark-connector/example/target/example-3.0.0.jar

[INFO]

[INFO] --- maven-jar-plugin:3.2.0:test-jar (default) @ example ---

[INFO] Skipping packaging of the test-jar

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 8.225 s

[INFO] Finished at: 2023-02-27T16:47:22+08:00

[INFO] ------------------------------------------------------------------------

- 你可以 mvn dependency:tree 看下 打印spire相关日志 的 algebra 包是在哪里依赖进去的

- 你那个number format exception的错误,网络有相关帖子,参考java - Maven NumberFormatException when packaging - Stack Overflow

1 个赞

system

关闭

3

此话题已在最后回复的 30 天后被自动关闭。不再允许新回复。