- nebula 版本:3.4.1

- 部署方式:分布式

- 安装方式:RPM

- 是否上生产环境: N

- 问题的具体描述

部署全文索引,按官方流程操作无报错,但是lookup查询结果一直为空,是否可指定定位问题点。

索引创建之后,rebuild 过么?

rebuild任务失败,显示错误如下:

+----------------+--------------------------+------------+----------------------------+----------------------------+------------------+

| Job Id(TaskId) | Command(Dest) | Status | Start Time | Stop Time | Error Code |

+----------------+--------------------------+------------+----------------------------+----------------------------+------------------+

| 141 | "REBUILD_FULLTEXT_INDEX" | "FAILED" | 2023-03-13T02:39:25.000000 | 2023-03-13T02:39:25.000000 | "E_INVALID_HOST" |

| "Total:0" | "Succeeded:0" | "Failed:0" | "In Progress:0" | "" | "" |

+----------------+--------------------------+------------+----------------------------+----------------------------+------------------+

但是esclient和listener都配置了:

es:

+-----------------+----------------+------+

| Type | Host | Port |

+-----------------+----------------+------+

| "ELASTICSEARCH" | "192.168.1.35" | 9200 |

+-----------------+----------------+------+

listener:

+--------+-----------------+-----------------------+-----------+

| PartId | Type | Host | Status |

+--------+-----------------+-----------------------+-----------+

| 1 | "ELASTICSEARCH" | ""192.168.1.35":9789" | "OFFLINE" |

| 2 | "ELASTICSEARCH" | ""192.168.1.35":9789" | "OFFLINE" |

| 3 | "ELASTICSEARCH" | ""192.168.1.35":9789" | "OFFLINE" |

+--------+-----------------+-----------------------+-----------+

请问如何进一步排查

你的 listener 节点都是 offline 的呀

看到了,太粗心了,我发现我的listener挂了,目前重新启动无报错,但是启动不起来,查看错误日志nebula-storaged.ERROR → nebula-storaged.node10.root.log.ERROR.20230315-202148.187325内容如下:

Log file created at: 2023/03/15 20:21:48

Running on machine: node10

Running duration (h:mm:ss): 0:00:00

Log line format: [IWEF]yyyymmdd hh:mm:ss.uuuuuu threadid file:line] msg

E20230315 20:21:48.294158 187325 StorageDaemon.cpp:167] Invalid timezone file `share/resources/date_time_zonespec.csv', exception: `Unable to locate or access the required datafile. Filespec: share/resources/date_time_zonespec.csv'.

nebula-storaged.INFO → nebula-storaged.node10.root.log.INFO.20230315-202148.187325内容如下:

Log file created at: 2023/03/15 20:21:48

Running on machine: node10

Running duration (h:mm:ss): 0:00:00

Log line format: [IWEF]yyyymmdd hh:mm:ss.uuuuuu threadid file:line] msg

I20230315 20:21:48.293408 187325 StorageDaemon.cpp:132] localhost = "192.168.1.129":9789

I20230315 20:21:48.293905 187325 StorageDaemon.cpp:147] data path= /usr/local/nebula/bin/data

E20230315 20:21:48.294158 187325 StorageDaemon.cpp:167] Invalid timezone file `share/resources/date_time_zonespec.csv', exception: `Unable to locate or access the required datafile. Filespec: share/resources/date_time_zonespec.csv'.

listener配置文件如下:

########## nebula-storaged-listener ###########

########## basics ##########

# Whether to run as a daemon process

--daemonize=true

# The file to host the process id

--pid_file=pids/nebula-storaged-listener.pid

# Whether to use the configuration obtained from the configuration file

--local_config=true

########## logging ##########

# The directory to host logging files

--log_dir=logs_storaged_listener

# Log level, 0, 1, 2, 3 for INFO, WARNING, ERROR, FATAL respectively

--minloglevel=0

# Verbose log level, 1, 2, 3, 4, the higher of the level, the more verbose of the logging

--v=0

# Maximum seconds to buffer the log messages

--logbufsecs=0

# Whether to redirect stdout and stderr to separate output files

--redirect_stdout=true

# Destination filename of stdout and stderr, which will also reside in log_dir.

--stdout_log_file=storaged-listener-stdout.log

--stderr_log_file=storaged-listener-stderr.log

# Copy log messages at or above this level to stderr in addition to logfiles. The numbers of severity levels INFO, WARNING, ERROR, and FATAL are 0, 1, 2, and 3, respectively.

--stderrthreshold=3

# Wether logging files' name contain timestamp.

--timestamp_in_logfile_name=true

########## networking ##########

# Meta server address

--meta_server_addrs=192.168.1.35:9559,192.168.1.36:9559,192.168.1.37:9559

# Local ip

--local_ip=192.168.1.129

# Storage daemon listening port

--port=9789

--ws_ip=0.0.0.0

# HTTP service port

--ws_http_port=19789

# heartbeat with meta service

--heartbeat_interval_secs=10

########## storage ##########

# Listener wal directory. only one path is allowed.

--listener_path=data/listener

# This parameter can be ignored for compatibility. let's fill A default value of "data"

--data_path=data

# The type of part manager, [memory | meta]

--part_man_type=memory

# The default reserved bytes for one batch operation

--rocksdb_batch_size=4096

# The default block cache size used in BlockBasedTable.

# The unit is MB.

--rocksdb_block_cache=4

# The type of storage engine, `rocksdb', `memory', etc.

--engine_type=rocksdb

# The type of part, `simple', `consensus'...

--part_type=simple

可以帮忙看一下最新的问题嘛,感谢

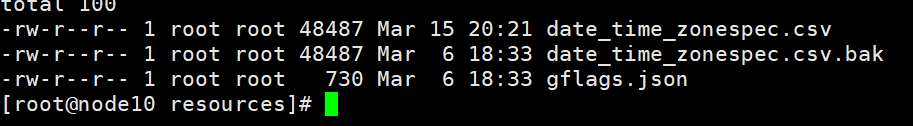

你的share/resources/date_time_zonespec.csv 缺失了。从安装包里找一下?

ownership/permission 都是正常的?

这个需要什么赋权吗,我看教程里没有提到赋权的问题

可以找一个干净安装的环境里比较一下权限

和 同目录的 json 文件权限一样就行

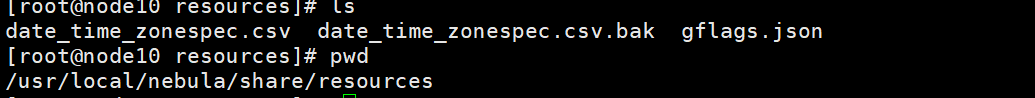

ll /usr/local/nebula/share/resources/

total 60

drwxr-xr-x 2 root root 4096 Mar 15 11:37 ./

drwxr-xr-x 3 root root 4096 Mar 15 11:37 ../

-rw-r--r-- 1 root root 48487 Jun 10 2022 date_time_zonespec.csv

-rw-r--r-- 1 root root 730 Oct 19 10:26 gflags.json

我怀疑是相对路径不对。

你可以在配置文件中使用 timezone_file 配置把路径配置为绝对路径。

问题已解决,通过scripts目录中的启动脚本启动无问题,通过教程中的参数启动无效,暂不清楚原因

1 个赞

大概率是相对路径问题。从命令行启动,你所在的working path的相对路径和从scripts启动的相对路径可能不一致。