还是没有正常跑起来,后来删了空间,重新导入数据了![]()

不是吧, 你之前贴的日志就是已经开始compaction了啊, 怎么看出来没跑的?

貌似社区版没啥办法了, 因为之前社区版放出来的有丢数据风险, 后来关掉了, 后面可能会再做.

就一直卡在第一句话那里, 后面log 也没数据,磁盘IO 也没拉起来,硬盘df -h 也没减少。

CompactionFilter.h:82] Do full/manual compaction!

能否帮忙看看这是不是一个bug,现在在考虑是否升级?

compact job 一直fail, 其中节点2, 看到listen log too many file error, 调整ulimit -n 由65535 → 100000 之后还是failed,

现在重新又跑了一次,但是看log 节点2没有触发compact job, 其他都正常触发再跑。

(root@nebula) [SupplyChain]> show jobs

+--------+------------------+------------+----------------------------+----------------------------+

| Job Id | Command | Status | Start Time | Stop Time |

+--------+------------------+------------+----------------------------+----------------------------+

| 40 | "LEADER_BALANCE" | "FINISHED" | 2023-03-27T11:55:05.000000 | 2023-03-27T11:55:10.000000 |

| 39 | "COMPACT" | "FAILED" | 2023-03-27T08:59:19.000000 | 2023-03-27T11:01:37.000000 |

| 38 | "LEADER_BALANCE" | "FINISHED" | 2023-03-27T08:51:57.000000 | 2023-03-27T08:52:22.000000 |

| 37 | "LEADER_BALANCE" | "FINISHED" | 2023-03-27T07:48:44.000000 | 2023-03-27T07:49:14.000000 |

| 36 | "COMPACT" | "FAILED" | 2023-03-27T03:57:44.000000 | 2023-03-27T07:48:44.000000 |

| 35 | "LEADER_BALANCE" | "FINISHED" | 2023-03-27T03:57:11.000000 | 2023-03-27T03:57:36.000000 |

| 34 | "LEADER_BALANCE" | "FINISHED" | 2023-03-27T03:54:22.000000 | 2023-03-27T03:54:57.000000 |

| 33 | "COMPACT" | "FAILED" | 2023-03-26T23:31:13.000000 | 2023-03-27T03:21:19.000000 |

| 32 | "COMPACT" | "FAILED" | 2023-03-26T12:05:04.000000 | 2023-03-26T23:01:05.000000 |

| 31 | "COMPACT" | "FINISHED" | 2023-03-25T02:01:00.000000 | 2023-03-25T08:59:08.000000 |

| 30 | "LEADER_BALANCE" | "FINISHED" | 2023-03-24T14:10:34.000000 | 2023-03-24T14:10:39.000000 |

+--------+------------------+------------+----------------------------+----------------------------+

Got 11 rows (time spent 31.208ms/32.240561ms)

nebula-storaged.nebula3-02.root.log.INFO.20230327-150637.1948 (28.6 KB)

nebula-storaged.nebula3-02.root.log.INFO.20230327-173238.2510 (24.2 KB)

nebula-graphd.nebula3-02.root.log.INFO.20230327-150637.1896 (8.9 KB)

节点二storage 内存太高好像被系统kill了,现在storage down 了。

看了下dmesg, executor-pri3-2 这个被kill 了,翻了下 之前有过类似的的schedule 问题 2.0版本一个奇怪操作引发coredump - #18,来自 sworduo

[29296.431825] [ pid ] uid tgid total_vm rss nr_ptes swapents oom_score_adj name

[29296.433521] [ 462] 0 462 9765 1129 23 0 0 systemd-journal

[29296.435560] [ 494] 0 494 11421 209 24 0 -1000 systemd-udevd

[29296.437315] [ 614] 0 614 13883 101 27 0 -1000 auditd

[29296.439233] [ 641] 0 641 11042 88 27 0 0 qemu-ga

[29296.441001] [ 642] 81 642 14559 165 34 0 -900 dbus-daemon

[29296.442927] [ 643] 0 643 1097 35 8 0 0 acpid

[29296.444567] [ 644] 0 644 6596 76 18 0 0 systemd-logind

[29296.446312] [ 645] 999 645 153058 1860 61 0 0 polkitd

[29296.448144] [ 647] 0 647 5404 77 16 0 0 irqbalance

[29296.450325] [ 653] 998 653 29452 115 30 0 0 chronyd

[29296.452199] [ 729] 0 729 137545 1032 86 0 0 NetworkManager

[29296.454267] [ 970] 0 970 181836 3250 25 0 0 node_exporter

[29296.456456] [ 971] 0 971 143569 3325 97 0 0 tuned

[29296.458253] [ 1214] 0 1214 22427 259 42 0 0 master

[29296.460040] [ 1240] 89 1240 22470 250 43 0 0 qmgr

[29296.461720] [ 1309] 0 1309 56680 486 46 0 0 rsyslogd

[29296.463367] [ 1314] 0 1314 285265 4237 42 0 0 elkeid-agent

[29296.465180] [ 1358] 0 1358 31596 156 19 0 0 crond

[29296.466960] [ 1425] 0 1425 28225 256 57 0 -1000 sshd

[29296.468582] [ 1426] 0 1426 27551 33 11 0 0 agetty

[29296.470228] [ 1428] 0 1428 27551 34 10 0 0 agetty

[29296.472064] [ 1671] 0 1671 25726 514 51 0 0 dhclient

[29296.474004] [ 1886] 0 1886 271750 71372 296 0 0 nebula-metad

[29296.475740] [ 1896] 0 1896 156429 71996 206 0 0 nebula-graphd

[29296.477556] [ 2399] 0 2399 40377 344 81 0 0 sshd

[29296.479332] [ 2401] 0 2401 28886 108 14 0 0 bash

[29296.480899] [ 2543] 0 2510 45081322 31527927 79735 0 0 executor-pri3-2

[29296.482541] [ 2945] 0 2945 40412 358 82 0 0 sshd

[29296.484046] [ 2947] 0 2947 28886 107 14 0 0 bash

[29296.485518] [ 2993] 0 2993 18063 188 40 0 0 sftp-server

[29296.487146] [ 3022] 89 3022 22453 249 43 0 0 pickup

[29296.488840] Out of memory: Kill process 2543 (executor-pri3-2) score 930 or sacrifice child

[29296.490471] Killed process 2543 (executor-pri3-2), UID 0, total-vm:180325288kB, anon-rss:126109248kB, file-rss:2460kB, shmem-rss:0kB

贴下完整日志, 没见过这个错诶. 你那环境之前是没有开自动compaction吧?

对没有开启,因为之前测试用nebula-spark 开启auto_comapct 速度很慢,就关了,导完数据之后,进行手动compact。现在这个space 的边比较多

listen log 这个日志内容比较少。最近的crash 和这个没有关系了

Could not create logging file: Too many open files

COULD NOT CREATE A LOGGINGFILE 20230327-171239.1948!F20230327 17:12:39.974443 2030 RaftPart.cpp:1092] [Port: 9780, Space: 29, Part: 36] Failed to commit logs

*** Check failure stack trace: ***

ExceptionHandler::GenerateDump sys_pipe failed:Too many open files

ExceptionHandler::SendContinueSignalToChild sys_write failed:ExceptionHandler::WaitForContinueSignal sys_read failed:Bad file descriptor

Bad file descriptor

*** Aborted at 1679908360 (Unix time, try 'date -d @1679908360') ***

*** Signal 6 (SIGABRT) (0x79c) received by PID 1948 (pthread TID 0x7fb2af2fe700) (linux TID 2030) (maybe from PID 1948, UID 0) (code: -6), stack trace: ***

(error retrieving stack trace)

~

~

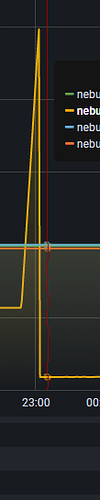

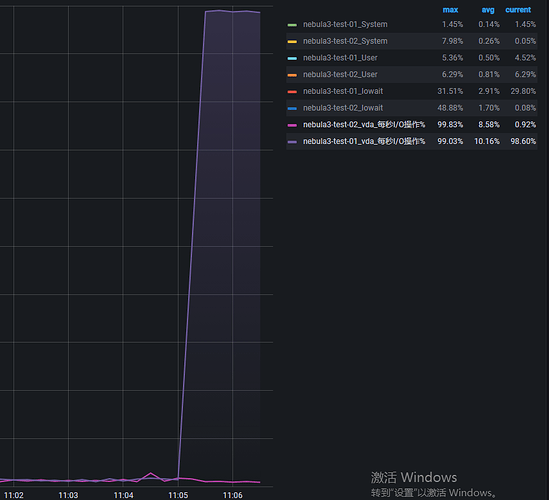

这是上午又一次compact 的日志。这个节点还是crash 了。 看内存曲线 还是内存100% 之后crash 了。

nebula-graphd.nebula3-02.root.log.INFO.20230327-150637.1896 (15.2 KB)

nebula-graphd.nebula3-02.root.log.WARNING.20230327-230137.1896 (502 字节)

nebula-storaged.nebula3-02.root.log.INFO.20230328-100531.3427 (24.8 KB)

nebula-storaged.nebula3-02.root.log.WARNING.20230328-103813.3427 (695 字节)

nebula-storaged.nebula3-02.root.log.ERROR.20230328-103813.3427 (375 字节)

请问有什么思路吗 这个crash 问题

句柄太少了, ulmit设置下

把compaction线程数调少点

ulimit -n 设为130000了,我把 subcompaction 由 8 调成4 试试

--rocksdb_db_options={"max_subcompactions":"4","max_background_jobs":"4"}

设置之后重启,原先的compact failed。重新 compact 任务,还是没有跑起来

比如一号节点,IO 和log 是这样的

I20230403 11:08:19.130861 27479 EventListener.h:35] Rocksdb compaction completed column family: default because of ManualCompaction, status: OK, compacted 13 files into 13, base level is 1, output level is 1

I20230403 11:08:19.261539 27480 EventListener.h:21] Rocksdb start compaction column family: default because of ManualCompaction, status: OK, compacted 13 files into 0, base level is 1, output level is 1

I20230403 11:08:19.261695 31659 CompactionFilter.h:82] Do full/manual compaction!

I20230403 11:08:19.261770 31660 CompactionFilter.h:82] Do full/manual compaction!

I20230403 11:08:19.261727 31658 CompactionFilter.h:82] Do full/manual compaction!

I20230403 11:08:19.261780 31661 CompactionFilter.h:82] Do full/manual compaction!

I20230403 11:08:19.261999 31662 CompactionFilter.h:82] Do full/manual compaction!

I20230403 11:08:19.262145 31663 CompactionFilter.h:82] Do full/manual compaction!

I20230403 11:08:19.262279 31664 CompactionFilter.h:82] Do full/manual compaction!

I20230403 11:08:19.262403 31665 CompactionFilter.h:82] Do full/manual compaction!

I20230403 11:08:19.262455 27480 CompactionFilter.h:82] Do full/manual compaction!

I20230403 11:08:19.262497 31666 CompactionFilter.h:82] Do full/manual compaction!

I20230403 11:08:37.096343 27480 EventListener.h:35] Rocksdb compaction completed column family: default because of ManualCompaction, status: OK, compacted 13 files into 13, base level is 1, output level is 1

I20230403 11:08:37.225275 27482 EventListener.h:21] Rocksdb start compaction column family: default because of ManualCompaction, status: OK, compacted 13 files into 0, base level is 1, output level is 1

I20230403 11:08:37.225440 31692 CompactionFilter.h:82] Do full/manual compaction!

I20230403 11:08:37.225473 31694 CompactionFilter.h:82] Do full/manual compaction!

I20230403 11:08:37.225489 31695 CompactionFilter.h:82] Do full/manual compaction!

I20230403 11:08:37.225448 31693 CompactionFilter.h:82] Do full/manual compaction!

I20230403 11:08:37.225710 31696 CompactionFilter.h:82] Do full/manual compaction!

I20230403 11:08:37.225968 31697 CompactionFilter.h:82] Do full/manual compaction!

I20230403 11:08:37.226039 31698 CompactionFilter.h:82] Do full/manual compaction!

I20230403 11:08:37.226140 27482 CompactionFilter.h:82] Do full/manual compaction!

I20230403 11:08:37.226152 31699 CompactionFilter.h:82] Do full/manual compaction!

二号节点 IO 没拉起来, 日志还是下面这种, 跟之前一样,等到job 然后过段时间 storaged 就crash 了

I20230403 10:52:38.517237 14512 NebulaStore.cpp:79] Register handler...

I20230403 10:52:38.517266 14512 StorageServer.cpp:253] Init LogMonitor

I20230403 10:52:38.517587 14512 StorageServer.cpp:120] Starting Storage HTTP Service

I20230403 10:52:38.519131 14512 StorageServer.cpp:124] Http Thread Pool started

I20230403 10:52:38.524035 14944 WebService.cpp:124] Web service started on HTTP[19779]

I20230403 10:52:38.524145 14512 RocksEngineConfig.cpp:371] Emplace rocksdb option max_background_jobs=4

I20230403 10:52:38.524164 14512 RocksEngineConfig.cpp:371] Emplace rocksdb option max_subcompactions=4

I20230403 10:52:38.524308 14512 RocksEngineConfig.cpp:371] Emplace rocksdb option max_bytes_for_level_base=268435456

I20230403 10:52:38.524323 14512 RocksEngineConfig.cpp:371] Emplace rocksdb option max_write_buffer_number=4

I20230403 10:52:38.524331 14512 RocksEngineConfig.cpp:371] Emplace rocksdb option write_buffer_size=67108864

I20230403 10:52:38.524339 14512 RocksEngineConfig.cpp:371] Emplace rocksdb option disable_auto_compactions=true

I20230403 10:52:38.524479 14512 RocksEngineConfig.cpp:371] Emplace rocksdb option block_size=8192

I20230403 10:52:38.626150 14512 RocksEngine.cpp:107] open rocksdb on /usr/local/nebula/data/storage/nebula/0/data

I20230403 10:52:38.626317 14512 AdminTaskManager.cpp:22] max concurrent subtasks: 10

I20230403 10:52:38.627151 14512 AdminTaskManager.cpp:40] exit AdminTaskManager::init()

I20230403 10:52:38.627336 14965 AdminTaskManager.cpp:224] waiting for incoming task

I20230403 10:52:38.627573 14966 AdminTaskManager.cpp:92] reportTaskFinish(), job=45, task=0, rc=E_TASK_EXECUTION_FAILED

I20230403 10:52:38.641672 14512 MemoryUtils.cpp:171] MemoryTracker set static ratio: 0.4

I20230403 10:52:38.682651 14966 AdminTaskManager.cpp:134] reportTaskFinish(), job=45, task=0, rc=SUCCEEDED

I20230403 10:52:49.496403 14575 MetaClient.cpp:3259] Load leader of "172.18.163.85":9779 in 3 space

I20230403 10:52:49.496490 14575 MetaClient.cpp:3259] Load leader of "172.18.163.114":9779 in 3 space

I20230403 10:52:49.496502 14575 MetaClient.cpp:3259] Load leader of "172.18.163.115":9779 in 0 space

I20230403 10:52:49.496527 14575 MetaClient.cpp:3259] Load leader of "172.18.163.124":9779 in 3 space

I20230403 10:52:49.496536 14575 MetaClient.cpp:3265] Load leader ok

E20230403 11:04:38.536161 14548 Serializer.h:43] Thrift serialization is only defined for structs and unions, not containers thereof. Attemping to deserialize a value of type `nebula::HostAddr`.

I20230403 11:04:52.175076 14575 MetaClient.cpp:3259] Load leader of "172.18.163.85":9779 in 3 space

I20230403 11:04:52.175132 14575 MetaClient.cpp:3259] Load leader of "172.18.163.114":9779 in 3 space

I20230403 11:04:52.175161 14575 MetaClient.cpp:3259] Load leader of "172.18.163.115":9779 in 3 space

I20230403 11:04:52.175199 14575 MetaClient.cpp:3259] Load leader of "172.18.163.124":9779 in 3 space

I20230403 11:04:52.175212 14575 MetaClient.cpp:3265] Load leader ok

I20230403 11:05:01.575136 14542 AdminTask.cpp:21] createAdminTask (47, 0)

I20230403 11:05:01.575318 14542 AdminTaskManager.cpp:155] enqueue task(47, 0)

I20230403 11:05:01.575482 14965 AdminTaskManager.cpp:236] dequeue task(47, 0)

I20230403 11:05:01.575554 14965 AdminTaskManager.cpp:279] run task(47, 0), 1 subtasks in 1 thread

I20230403 11:05:01.576241 14965 AdminTaskManager.cpp:224] waiting for incoming task

试了几次 storaged 还是crash 了

20230403 17:13:20.035351 17080 EventListener.h:21] Rocksdb start compaction column family: default because of ManualCompaction, status: OK, compacted 66311 files into 0, base level is 0, output level is 1

I20230403 17:13:20.065162 18972 CompactionFilter.h:82] Do full/manual compaction!

I20230403 17:13:20.065271 18973 CompactionFilter.h:82] Do full/manual compaction!

I20230403 17:13:20.065281 17080 CompactionFilter.h:82] Do full/manual compaction!

I20230403 17:13:20.065320 18974 CompactionFilter.h:82] Do full/manual compaction!

I20230403 17:14:18.985951 17324 MemoryUtils.cpp:227] sys:34.712GiB/125.754GiB 27.60% usr:28.763GiB/50.282GiB 57.20%

I20230403 17:15:18.986356 17324 MemoryUtils.cpp:227] sys:42.229GiB/125.754GiB 33.58% usr:36.218GiB/50.282GiB 72.03%

I20230403 17:16:19.985954 17324 MemoryUtils.cpp:227] sys:49.830GiB/125.754GiB 39.62% usr:43.767GiB/50.282GiB 87.04%

I20230403 17:17:19.986378 17324 MemoryUtils.cpp:227] sys:57.357GiB/125.754GiB 45.61% usr:51.232GiB/50.282GiB 101.89%

I20230403 17:18:19.985491 17324 MemoryUtils.cpp:227] sys:64.789GiB/125.754GiB 51.52% usr:58.604GiB/50.282GiB 116.55%

I20230403 17:19:19.986600 17324 MemoryUtils.cpp:227] sys:72.368GiB/125.754GiB 57.55% usr:66.142GiB/50.282GiB 131.54%

I20230403 17:20:19.985999 17324 MemoryUtils.cpp:227] sys:79.704GiB/125.754GiB 63.38% usr:73.421GiB/50.282GiB 146.02%

I20230403 17:21:19.986316 17324 MemoryUtils.cpp:227] sys:87.090GiB/125.754GiB 69.25% usr:80.751GiB/50.282GiB 160.60%

I20230403 17:22:19.985656 17324 MemoryUtils.cpp:227] sys:94.456GiB/125.754GiB 75.11% usr:88.057GiB/50.282GiB 175.13%

I20230403 17:23:19.985388 17324 MemoryUtils.cpp:227] sys:100.513GiB/125.754GiB 79.93% usr:95.376GiB/50.282GiB 189.68%

W20230403 17:23:47.987363 17324 MemoryUtils.cpp:133] Memory usage has hit the high watermark of system, available: 2.41871e+10 vs. total: 135026831360 in bytes.

I20230403 17:24:19.986222 17324 MemoryUtils.cpp:227] sys:106.319GiB/125.754GiB 84.55% usr:102.582GiB/50.282GiB 204.01%

I20230403 17:25:20.984989 17324 MemoryUtils.cpp:227] sys:112.217GiB/125.754GiB 89.24% usr:109.902GiB/50.282GiB 218.57%

W20230403 17:25:27.985296 17324 MemoryUtils.cpp:133] Memory usage has hit the high watermark of system, available: 1.38074e+10 vs. total: 135026831360 in bytes.

I20230403 17:26:20.986110 17324 MemoryUtils.cpp:227] sys:118.011GiB/125.754GiB 93.84% usr:117.088GiB/50.282GiB 232.86%

W20230403 17:27:07.985222 17324 MemoryUtils.cpp:133] Memory usage has hit the high watermark of system, available: 3.44659e+09 vs. total: 135026831360 in bytes.

I20230403 17:27:21.985656 17324 MemoryUtils.cpp:227] sys:123.895GiB/125.754GiB 98.52% usr:124.388GiB/50.282GiB 247.38%

能帮忙看看这个啥原因吗? 担心到时候上生产有这个问题

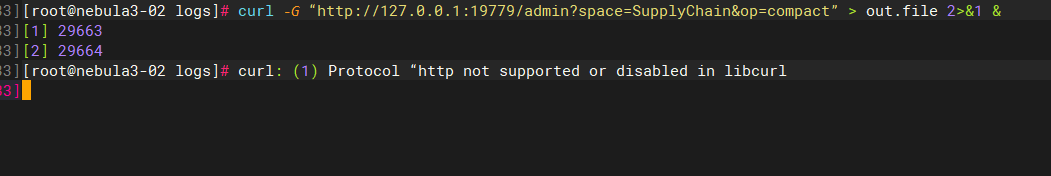

第一个节点已经没事了, 第二个节点L0文件太多了, 线程数改成1, 你可以直接走curl命令手动compact第二个节点, 比如这种curl -G “http://127.0.0.1:19779/admin?space=ldbc_snb_sf10&op=compact”

把space名字换下. 先改成1试试, 你上线最好不要关掉compaction, 否则这么多L0文件 内存是有可能扛不住

这个状态是对的把?

不能后台,看log 在跑

我准备一点点的导入数据试试,导入部分数据,compact 一次。

关闭compact 的原因是,用spark-import 试了下,这个打开auto compact 导入速度很慢,还不如nebula-importer csv 的tool。 当时没有好的办法,就关闭了。

改成1 之后,还是OOM storage down 了,重启storage 之后 请问我怎么查看通过curl 提交的这个compact job 状态

没法用curl查, 只有提交有HTTP接口.

那你试另外一招吧, 把自动compaction打开, 重启, 期望自动的不挂. 看上去也没啥辙了, 另外你可以看看系统参数THP关掉没有

好的。感谢!curl 提交的compact, 是没办法查看状态的意思吧? 我看show jobs 没有相关记录

THP 关闭的,已经先开了 auto compaction, 确实看log 在做minor compaction,只是我把compaction 线程调成1了,可能比较慢,后续看看什么时候结束。

[root@nebula-server-01 scripts]# cat /sys/kernel/mm/transparent_hugepage/enabled

always madvise [never]