-

nebula 版本:3.4.0

-

部署方式:分布式 k8s

-

安装方式:使用生态工具 kubectl方式

-

问题的具体描述:(以下所有操作都是在一个pod内进行的)

运行官方案例 https://github.com/vesoft-inc/nebula-algorithm/tree/master/example ,尝试按照调用算法接口的方式调用Louvain算法时发生错误。

把example目录当作一个项目。在此路径下mvn compile编译成功,后续运行生成的class文件时出错:Exception in thread “main” java.lang.NoClassDefFoundError: LouvainExample (wrong name: com/vesoft/nebula/algorithm/LouvainExample)

版本信息

java及maven版本:

spark版本及nebula-algorithm版本同官网的pom.xml文件

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<parent>

<artifactId>algorithm</artifactId>

<groupId>com.vesoft</groupId>

<version>3.0-SNAPSHOT</version>

<relativePath>../pom.xml</relativePath>

</parent>

<modelVersion>4.0.0</modelVersion>

<artifactId>example</artifactId>

<build>

<plugins>

<!-- deploy-plugin -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-deploy-plugin</artifactId>

<version>2.8.2</version>

<executions>

<execution>

<id>default-deploy</id>

<phase>deploy</phase>

<configuration>

<skip>true</skip>

</configuration>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.sonatype.plugins</groupId>

<artifactId>nexus-staging-maven-plugin</artifactId>

<executions>

<execution>

<id>default-deploy</id>

<phase>deploy</phase>

<goals>

<goal>deploy</goal>

</goals>

<configuration>

<serverId>ossrh</serverId>

<skipNexusStagingDeployMojo>true</skipNexusStagingDeployMojo>

</configuration>

</execution>

</executions>

</plugin>

<!-- compiler-plugin -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.8.1</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

</configuration>

</plugin>

<!-- maven-jar -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-jar-plugin</artifactId>

<version>3.2.0</version>

<executions>

<execution>

<goals>

<goal>test-jar</goal>

</goals>

</execution>

</executions>

</plugin>

<!-- maven-shade -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>3.2.1</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<createDependencyReducedPom>false</createDependencyReducedPom>

<artifactSet>

<excludes>

<exclude>org.apache.spark:*</exclude>

<exclude>org.apache.hadoop:*</exclude>

<exclude>org.apache.hive:*</exclude>

<exclude>log4j:log4j</exclude>

<exclude>org.apache.orc:*</exclude>

<exclude>xml-apis:xml-apis</exclude>

<exclude>javax.inject:javax.inject</exclude>

<exclude>org.spark-project.hive:hive-exec</exclude>

<exclude>stax:stax-api</exclude>

<exclude>org.glassfish.hk2.external:aopalliance-repackaged</exclude>

</excludes>

</artifactSet>

<filters>

<filter>

<artifact>*:*</artifact>

<excludes>

<exclude>com/vesoft/tools/**</exclude>

<exclude>META-INF/*.SF</exclude>

<exclude>META-INF/*.DSA</exclude>

<exclude>META-INF/*.RSA</exclude>

</excludes>

</filter>

</filters>

</configuration>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.scala-tools</groupId>

<artifactId>maven-scala-plugin</artifactId>

<version>2.15.2</version>

<configuration>

<scalaVersion>2.11.12</scalaVersion>

<args>

<arg>-target:jvm-1.8</arg>

</args>

<jvmArgs>

<jvmArg>-Xss4096K</jvmArg>

</jvmArgs>

</configuration>

<executions>

<execution>

<id>scala-compile</id>

<goals>

<goal>compile</goal>

</goals>

<configuration>

<excludes>

<exclude>com/vesoft/tools/**</exclude>

<exclude>META-INF/*.SF</exclude>

<exclude>META-INF/*.DSA</exclude>

<exclude>META-INF/*.RSA</exclude>

</excludes>

</configuration>

</execution>

<execution>

<id>scala-test-compile</id>

<goals>

<goal>testCompile</goal>

</goals>

<configuration>

<excludes>

com/vesoft/tools/**

</excludes>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>

<dependencies>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>1.7.25</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>1.7.25</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.11</artifactId>

<version>2.4.4</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.11</artifactId>

<version>2.4.4</version>

</dependency>

<dependency>

<groupId>com.vesoft</groupId>

<artifactId>nebula-algorithm</artifactId>

<version>3.0-SNAPSHOT</version>

</dependency>

</dependencies>

</project>

scala版本:

完整操作

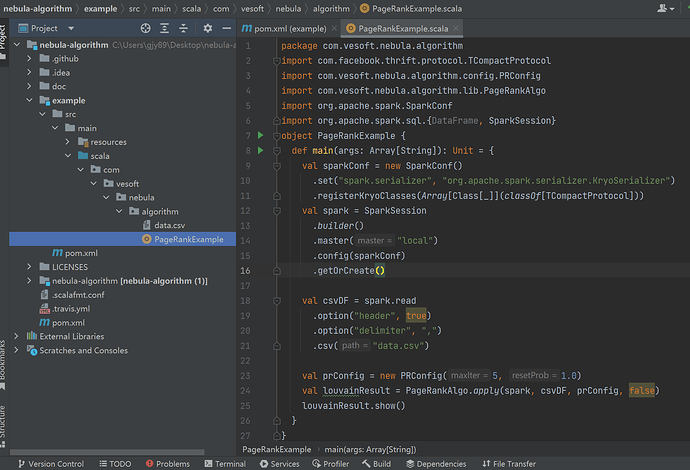

仅修改了LouvainExample.scala文件至如下:

/* Copyright (c) 2021 vesoft inc. All rights reserved.

*

* This source code is licensed under Apache 2.0 License.

*/

package com.vesoft.nebula.algorithm

import com.facebook.thrift.protocol.TCompactProtocol

import com.vesoft.nebula.algorithm.config.{LPAConfig, LouvainConfig}

import com.vesoft.nebula.algorithm.lib.{LabelPropagationAlgo, LouvainAlgo}

import org.apache.spark.SparkConf

import org.apache.spark.sql.{DataFrame, SparkSession}

object LouvainExample {

def main(args: Array[String]): Unit = {

val sparkConf = new SparkConf()

.set("spark.serializer", "org.apache.spark.serializer.KryoSerializer")

.registerKryoClasses(Array[Class[_]](classOf[TCompactProtocol]))

val spark = SparkSession

.builder()

.master("local")

.config(sparkConf)

.getOrCreate()

val csvDF = ReadData.readCsvData(spark)

// val nebulaDF = ReadData.readNebulaData(spark)

// val journalDF = ReadData.readLiveJournalData(spark)

louvain(spark, csvDF)

}

def louvain(spark: SparkSession, df: DataFrame): Unit = {

val louvainConfig = LouvainConfig(10, 5, 0.5)

val louvain = LouvainAlgo.apply(spark, df, louvainConfig, false)

louvain.show()

}

}

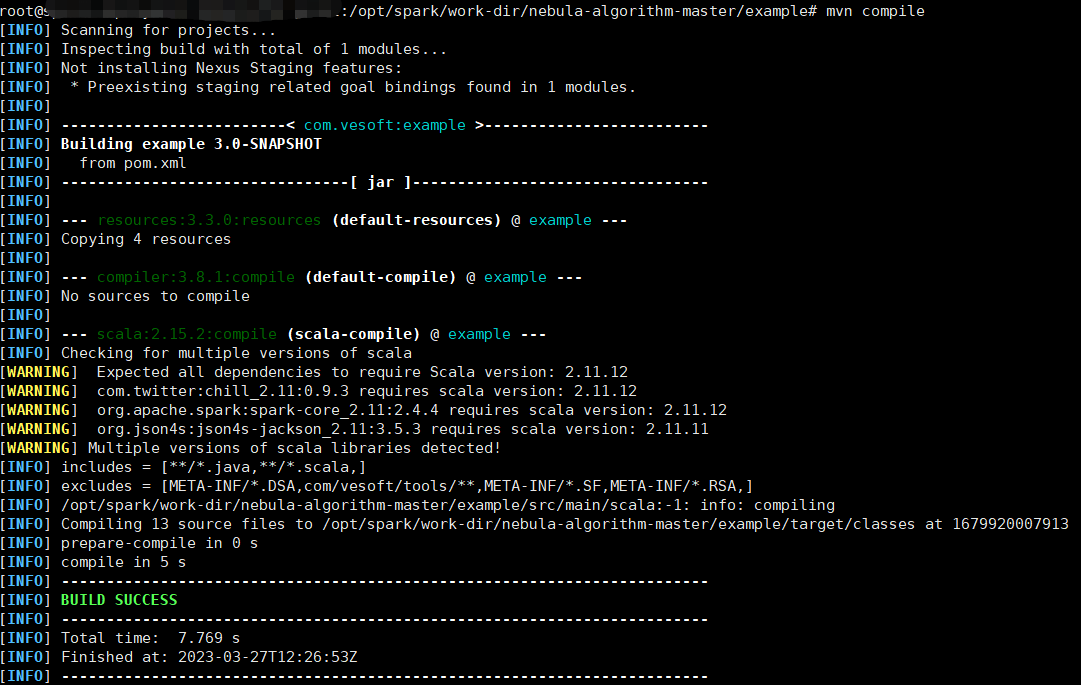

首先mvn compile结果:

项目结构变化至如下:

进入target,运行编译后生成的class文件报错:

文字版报错:

Exception in thread "main" java.lang.NoClassDefFoundError: LouvainExample (wrong name: com/vesoft/nebula/algorithm/LouvainExample)

at java.lang.ClassLoader.defineClass1(Native Method)

at java.lang.ClassLoader.defineClass(ClassLoader.java:756)

at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142)

at java.net.URLClassLoader.defineClass(URLClassLoader.java:473)

at java.net.URLClassLoader.access$100(URLClassLoader.java:74)

at java.net.URLClassLoader$1.run(URLClassLoader.java:369)

at java.net.URLClassLoader$1.run(URLClassLoader.java:363)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:362)

at java.lang.ClassLoader.loadClass(ClassLoader.java:418)

at java.lang.ClassLoader.loadClass(ClassLoader.java:351)

at java.lang.Class.forName0(Native Method)

at java.lang.Class.forName(Class.java:348)

at scala.reflect.internal.util.ScalaClassLoader.$anonfun$tryClass$1(ScalaClassLoader.scala:52)

at scala.util.control.Exception$Catch.$anonfun$opt$1(Exception.scala:246)

at scala.util.control.Exception$Catch.apply(Exception.scala:228)

at scala.util.control.Exception$Catch.opt(Exception.scala:246)

at scala.reflect.internal.util.ScalaClassLoader.tryClass(ScalaClassLoader.scala:52)

at scala.reflect.internal.util.ScalaClassLoader.tryToLoadClass(ScalaClassLoader.scala:46)

at scala.reflect.internal.util.ScalaClassLoader.tryToLoadClass$(ScalaClassLoader.scala:46)

at scala.reflect.internal.util.ScalaClassLoader$URLClassLoader.tryToLoadClass(ScalaClassLoader.scala:132)

at scala.reflect.internal.util.ScalaClassLoader$.classExists(ScalaClassLoader.scala:157)

at scala.tools.nsc.GenericRunnerCommand.guessHowToRun(GenericRunnerCommand.scala:43)

at scala.tools.nsc.GenericRunnerCommand.<init>(GenericRunnerCommand.scala:62)

at scala.tools.nsc.GenericRunnerCommand.<init>(GenericRunnerCommand.scala:25)

at scala.tools.nsc.MainGenericRunner.process(MainGenericRunner.scala:49)

at scala.tools.nsc.MainGenericRunner$.main(MainGenericRunner.scala:108)

at scala.tools.nsc.MainGenericRunner.main(MainGenericRunner.scala)

请问如何解决?

还有一个问题,以提交算法包的形式操作时,指定encodeId为true后,VID的映射表在哪里生成?