- nebula 版本:3.1.0

- 部署方式:分布式(3副本)

- 安装方式:源码编译

- 是否上生产环境:Y

- 硬件信息

- 磁盘:SSD

- CPU、内存:8c32g

- 问题的具体描述

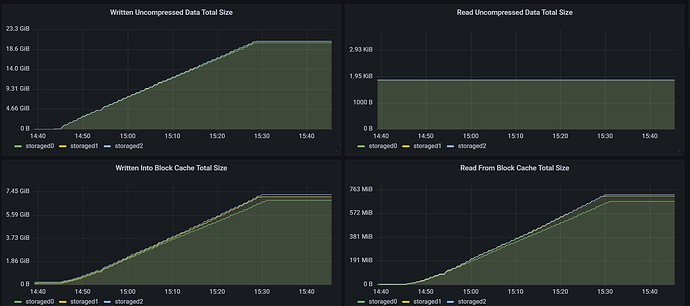

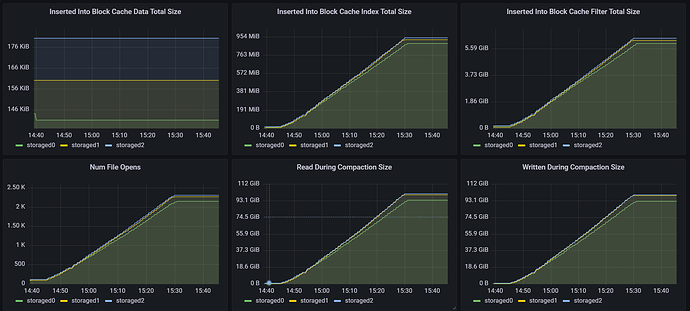

导入10GLDBC数据,nebula-storage的内存使用量为4.7G,其中我理解block_cache占用4G,其它0.7G的内存使用不知道在哪?另外,继续导入其它space数据(30G),内存还是会缓慢增长,并且不会释放,会增长到5.5G,现在就是想确认下,这个内存的增长点在哪?

还有一个问题想请教下

rocksdb BlockFetcher ReadBlockContents

rocksdb UncompressBlockContentsForCompressionType

nebula wal FileBasedWal appendLogs

rocksdb Arena AllocateFallback

分别代表啥内存,会释放吗?

使用

curl -G http://IP:19779/rocksdb_property?space=XXX&property=rocksdb.block-cache-usage

curl -G http://IP:19779/rocksdb_property?space=XXX&property=rocksdb.estimate-table-readers-mem

查询结果,block cache usage为4G ,index&filter为4098bytes,因为我使用"cache_index_and_filter_blocks":"true"参数,将index&filter放到block cache中竞争内存了。

jeprof top37

jeprof pdf

nebula-storaged.pdf (23.6 KB)

nebula-storaged.conf

########## basics ##########

# Whether to run as a daemon process

--daemonize=true

--local_config=true

# The file to host the process id

--pid_file=/data1/nebula/pids/nebula-storaged.pid

########## logging ##########

# The directory to host logging files, which must already exists

--log_dir=/data1/nebula/logs

# Log level, 0, 1, 2, 3 for INFO, WARNING, ERROR, FATAL respectively

--minloglevel=0

# Verbose log level, 1, 2, 3, 4, the higher of the level, the more verbose of the logging

--v=0

# Maximum seconds to buffer the log messages

--logbufsecs=0

# Whether to redirect stdout and stderr to separate output files

--redirect_stdout=true

# Destination filename of stdout and stderr, which will also reside in log_dir.

--stdout_log_file=storaged-stdout.log

--stderr_log_file=storaged-stderr.log

# Copy log messages at or above this level to stderr in addition to logfiles. The numbers of severity levels INFO, WARNING, ERROR, and FATAL are 0, 1, 2, and 3, respectively.

--stderrthreshold=2

########## networking ##########

# Comma separated Meta server addresses

--meta_server_addrs=

# Local IP used to identify the nebula-storaged process.

# Change it to an address other than loopback if the service is distributed or

# will be accessed remotely.

--local_ip=

# Storage daemon listening port

--port=9779

# HTTP service ip

--ws_ip=0.0.0.0

# HTTP service port

--ws_http_port=19779

# HTTP2 service port

--ws_h2_port=19780

# heartbeat with meta service

--heartbeat_interval_secs=5

######### Raft #########

# Raft election timeout

--raft_heartbeat_interval_secs=30

# RPC timeout for raft client (ms)

--raft_rpc_timeout_ms=500

## recycle Raft WAL

--wal_ttl=14400

########## Disk ##########

# Root data path. Split by comma. e.g. --data_path=/disk1/path1/,/disk2/path2/

# One path per Rocksdb instance.

--data_path=/data1/nebula/data/storage

--enable_partitioned_index_filter=true

# The default reserved bytes for one batch operation

--rocksdb_batch_size=4096

# The default block cache size used in BlockBasedTable. (MB)

# recommend: 1/3 of all memory

--rocksdb_block_cache=4096

# Compression algorithm, options: no,snappy,lz4,lz4hc,zlib,bzip2,zstd

# For the sake of binary compatibility, the default value is snappy.

# Recommend to use:

# * lz4 to gain more CPU performance, with the same compression ratio with snappy

# * zstd to occupy less disk space

# * lz4hc for the read-heavy write-light scenario

--rocksdb_compression=lz4

# Set different compressions for different levels

# For example, if --rocksdb_compression is snappy,

# "no:no:lz4:lz4::zstd" is identical to "no:no:lz4:lz4:snappy:zstd:snappy"

# In order to disable compression for level 0/1, set it to "no:no"

--rocksdb_compression_per_level=

############## rocksdb Options ##############

# rocksdb DBOptions in json, each name and value of option is a string, given as "option_name":"option_value" separated by comma

--rocksdb_db_options={"max_subcompactions":"2","max_background_jobs":"2","max_open_files":"5000"}

# rocksdb ColumnFamilyOptions in json, each name and value of option is string, given as "option_name":"option_value" separated by comma

--rocksdb_column_family_options={"disable_auto_compactions":"false","write_buffer_size":"67108864","max_write_buffer_number":"4","max_bytes_for_level_base":"268435456"}

# rocksdb BlockBasedTableOptions in json, each name and value of option is string, given as "option_name":"option_value" separated by comma

--rocksdb_block_based_table_options={"block_size":"8192","cache_index_and_filter_blocks":"true"}

# Whether or not to enable rocksdb's statistics, disabled by default

--enable_rocksdb_statistics=true

# Statslevel used by rocksdb to collection statistics, optional values are

# * kExceptHistogramOrTimers, disable timer stats, and skip histogram stats

# * kExceptTimers, Skip timer stats

# * kExceptDetailedTimers, Collect all stats except time inside mutex lock AND time spent on compression.

# * kExceptTimeForMutex, Collect all stats except the counters requiring to get time inside the mutex lock.

# * kAll, Collect all stats

--rocksdb_stats_level=kExceptHistogramOrTimers

# Whether or not to enable rocksdb's prefix bloom filter, disabled by default.

--enable_rocksdb_prefix_filtering=false

# Whether or not to enable the whole key filtering.

--enable_rocksdb_whole_key_filtering=true

# The prefix length for each key to use as the filter value.

# can be 12 bytes(PartitionId + VertexID), or 16 bytes(PartitionId + VertexID + TagID/EdgeType).

--rocksdb_filtering_prefix_length=12

############### misc ####################

--max_handlers_per_req=1