-

nebula operator版本:1.4.2

-

部署方式: 分布式 / 单机

采用三台虚拟机部署三个节点,一个master、两个node,xshell连接 -

安装方式:helm安装

-

是否上生产环境:N

-

硬件信息(虚拟机,参考文档测试环境配置)

- 磁盘SCSI( 非 SSD,没那么大容量)

- CPU 数量1 内核数量6

- 内存8G

-

问题的具体描述

执行 helm install nebula-operator nebula-operator/nebula-operator --namespace nebula-operator-system --create-namespace --version 1.4.2 --set image.kubeRBACProxy.image=kubesphere/kube-rbac-proxy:v0.8.0 --set image.kubeScheduler.image=kubesphere/kube-scheduler:v1.22.12 -f values.yaml(我本地下载好了镜像,拉取太慢了,values主要是将拉取策略改成本地读取)

P.S 因为国内无法拉取 gcr.io/kubebuilder/kube-rbac-proxy:v0.8.0 和 k8s.gcr.io/kube-scheduler:v1.18.8 ,我换成了kubesphere/kube-rbac-proxy:v0.8.0 和 kube-scheduler:v1.22.12

pod中的容器 controller-manager 出现

1)Liveness probe failed: Get “http://192.168.1.24:8081/healthz”: dial tcp 192.168.1.24:8081: connect: connon refused

2)Readiness probe failed: Get “http://192.168.1.24:8081/readyz”: dial tcp 192.168.1.24:8081: connect: connon refused -

相关的 meta / storage / graph info 日志信息

NAME READY STATUS RESTARTS AGE

nebula-operator-controller-manager-deployment-6bf55cbb8d-mwg86 1/2 Running 6 (2m53s ago) 8m14s

nebula-operator-controller-manager-deployment-6bf55cbb8d-pw6sg 1/2 Running 6 (2m53s ago) 8m14s

不就会出现crashloopback、error状态

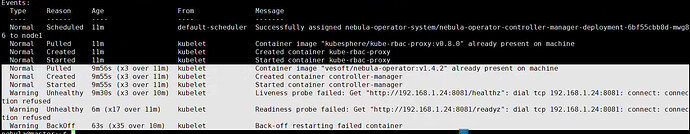

错误Pod的events

Normal Pulled 9m56s (x3 over 11m) kubelet Container image “vesoft/nebula-operator:v1.4.2” already present on machine

Normal Created 9m55s (x3 over 11m) kubelet Created container controller-manager

Normal Started 9m55s (x3 over 11m) kubelet Started container controller-manager

Warning Unhealthy 9m30s (x3 over 10m) kubelet Liveness probe failed: Get “http://192.168.1.24:8081/healthz”: dial tcp 192.168.1.24:8081: connect: connection refused

Warning Unhealthy 6m (x17 over 11m) kubelet Readiness probe failed: Get “http://192.168.1.24:8081/readyz”: dial tcp 192.168.1.24:8081: connect: connection refused

Warning BackOff 63s (x35 over 10m) kubelet Back-off restarting failed container

有其他需要的信息,我会马上回复,如果方便,可以提供远程连接服务,方便实际调试。