胸口碎大石

2023 年5 月 18 日 09:33

1

nebula 版本:3.4.1

部署方式: 单机

安装方式:RPM

是否上生产环境:N

硬件信息

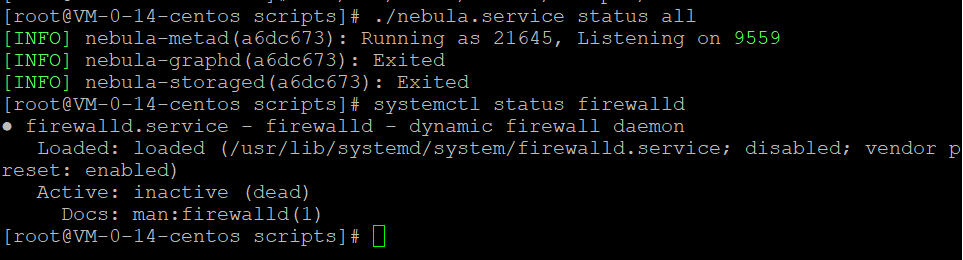

编辑配置文件nebula-graphd.conf,修改–enable_authorize参数为true,nebula.service stop all , nebula.service start all 之后,nebula-graphd一直是Exited状态:

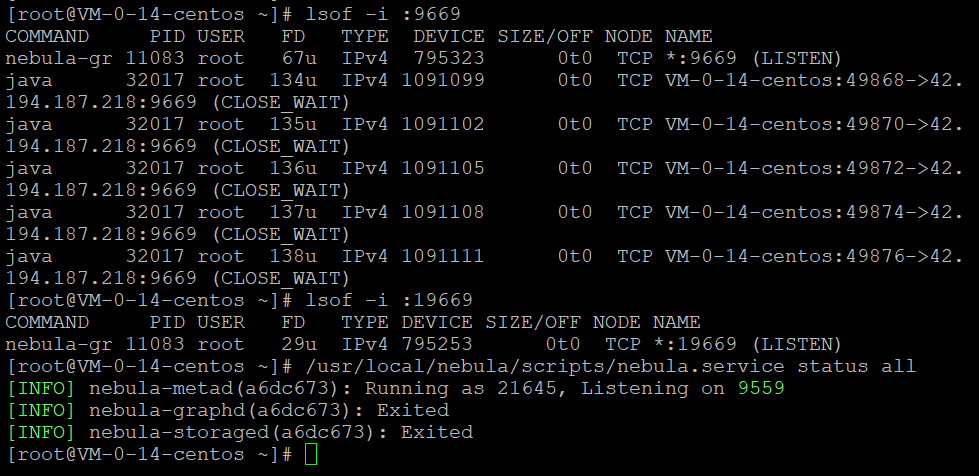

查询发现9669,19669已占用:

再次使用nebula.service stop all , nebula.service start all 情况依旧,重启电脑之后执行nebula.service start all 情况依旧, kill 32587 之后nebula.service start graphd 情况依旧

E20230518 14:43:42.762362 5710 WebService.cpp:152] Failed to start web service: failed to bind to async server socket: 0.0.0.0:19669: Address already in use

E20230518 16:52:18.535353 3385 MetaClient.cpp:772] Send request to "127.0.0.1":9559, exceed retry limit

E20230518 16:52:18.535396 3385 MetaClient.cpp:773] RpcResponse exception: apache::thrift::transport::TTransportException: Dropping unsent request. Connection closed after: apache::thrift::transport::TTransportException: AsyncSocketException: connect failed, type = Socket not open, errno = 111 (Connection refused): Connection refused

E20230518 16:52:18.535437 3459 MetaClient.cpp:192] Heartbeat failed, status:RPC failure in MetaClient: apache::thrift::transport::TTransportException: Dropping unsent request. Connection closed after: apache::thrift::transport::TTransportException: AsyncSocketException: connect failed, type = Socket not open, errno = 111 (Connect

wey

2023 年5 月 19 日 05:28

2

这是连不上 metad,metad 配置的是本地9559 ?看看 metad log正常不,看看防火墙允许 9559?

胸口碎大石

2023 年5 月 19 日 05:47

3

是9559,metad的ERRORlog正常:

胸口碎大石

2023 年5 月 20 日 01:30

7

端口就是被nebula-gr服务占用了,但是graphd在查询状态时还是exited

胸口碎大石

2023 年5 月 20 日 01:31

8

我kill了占用9669端口的服务,然后重启所有服务,graphd还是exited

wey

2023 年5 月 20 日 03:25

9

被占用的是 19669,graphd 不只需要 listen 在 9669

胸口碎大石

2023 年5 月 20 日 04:52

14

[root@VM-0-14-centos ~]# kill 32017https://docs.nebula-graph.io/

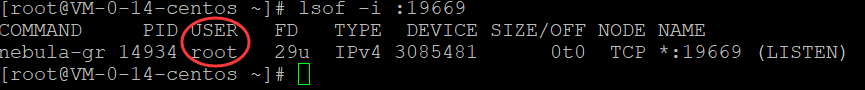

我把9669,19669占用的进程 32017和11083都kill了,然后重启之后还是Exited

min.wu

2023 年5 月 22 日 05:11

15

先看下日志,是不是端口占用。你是不是有多个账号在起服务?nebula-gr是谁的账号?

1 个赞

胸口碎大石

2023 年5 月 22 日 06:17

16

端口只有nebula-gr进程在LISTEN,只有一个账号root在操作,nebula-gr就是root账户

wey

2023 年5 月 22 日 06:26

17

要么关了 graph-gr 要么修改 graphd config 绕过这个端口,换成别的。

如果启动能过了 bind port 的阶段还是 exited 再看日志判断是什么其他原因

胸口碎大石

2023 年5 月 22 日 08:00

18

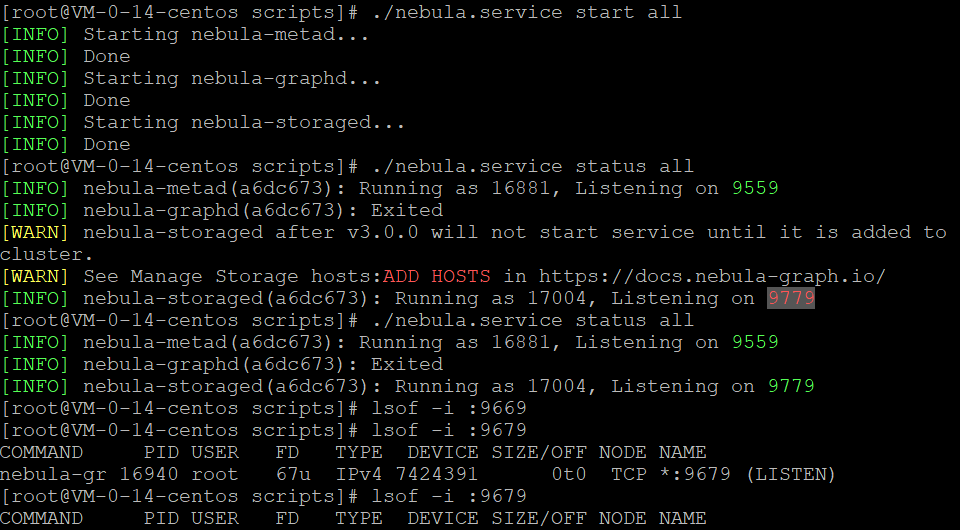

我把占用9669的server、graphd和java进程全部kill,之后把graphd的端口修改为 9679,19679,重启之后依然Exited

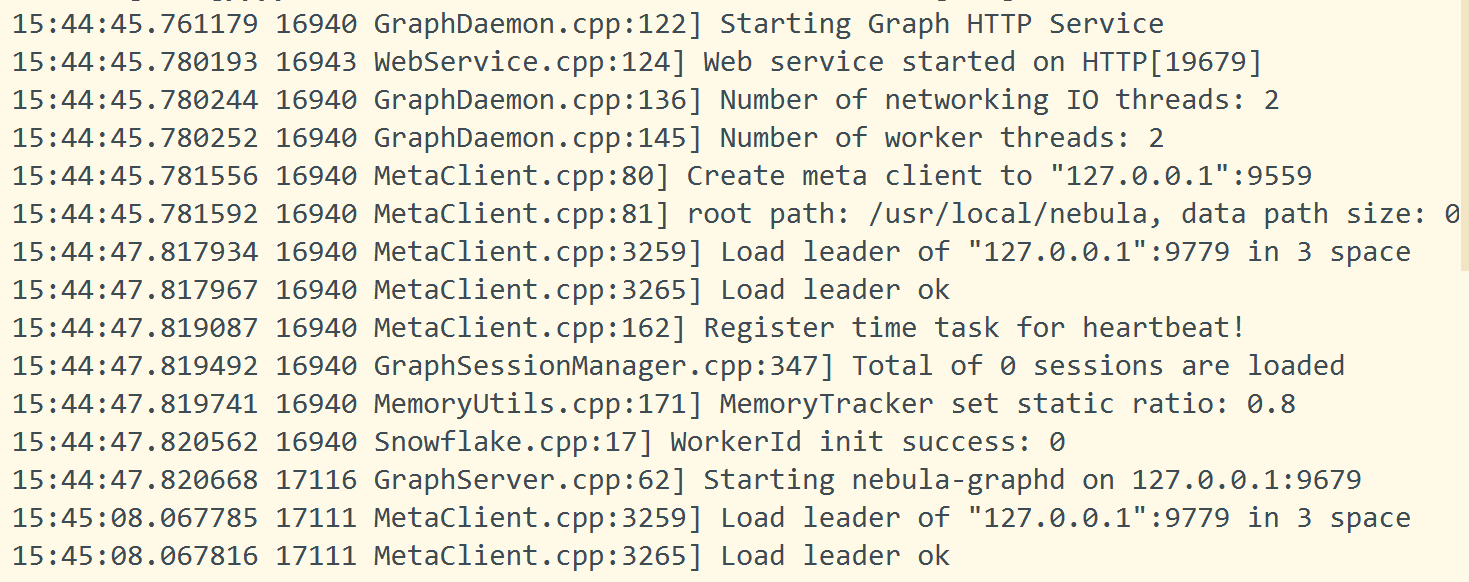

graphd日志如下:

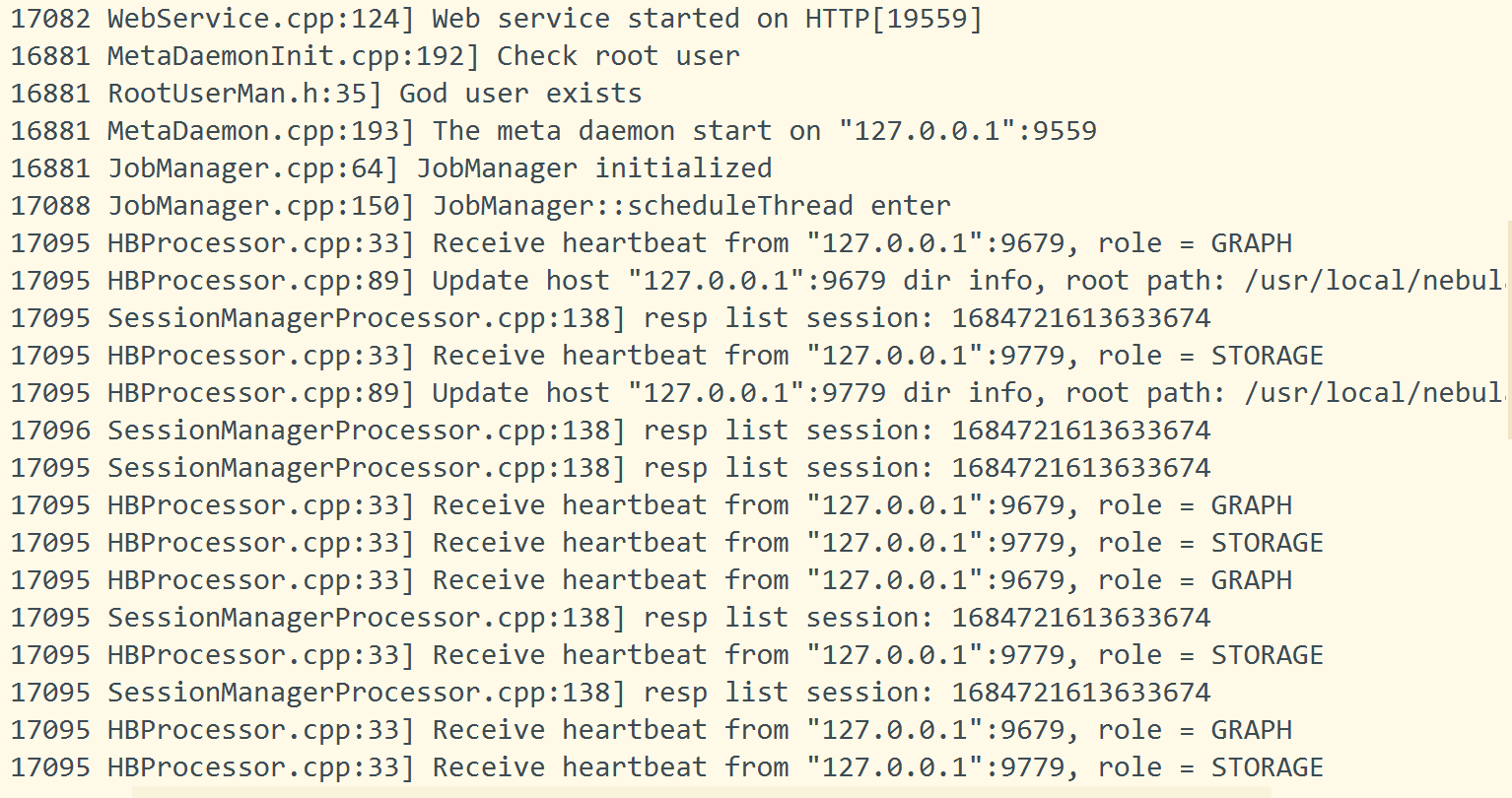

metad日志如下:

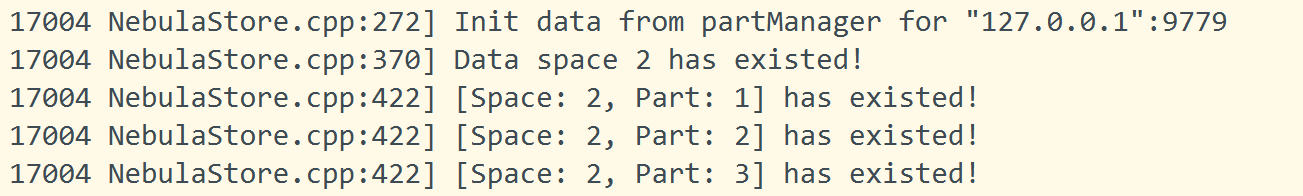

storaged日志如下:

还请大佬帮忙分析下,多谢

胸口碎大石

2023 年5 月 22 日 10:52

20