提问参考模版:

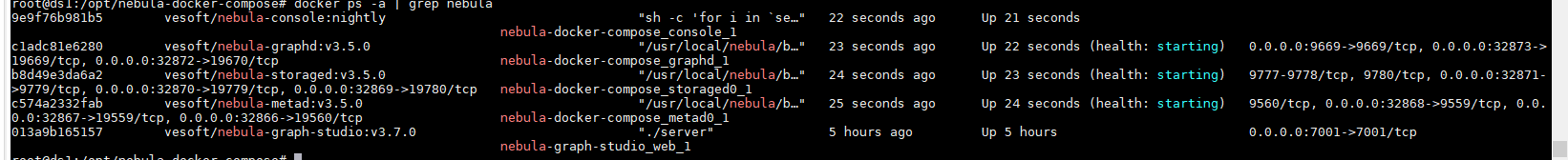

- nebula 版本:3.5.0 或者 3.4.0(为节省回复者核对版本信息的时间,首次发帖的版本信息记得以截图形式展示)

- 部署方式:单机

- 安装方式: Docker

- 是否上生产环境: N

- 硬件信息

- 磁盘( 推荐使用 SSD)

- CPU、内存信息

- 问题的具体描述

使用spark读取写入一直报错连接不上,换一种 tar.gz安装方式就可以正常写入读取。

import com.vesoft.nebula.connector.{NebulaConnectionConfig, ReadNebulaConfig}

import org.apache.spark.SparkConf

import org.apache.spark.sql.SparkSession

import com.vesoft.nebula.connector.connector._

object Test {

def main(args: Array[String]): Unit = {

val conf = new SparkConf()

.setIfMissing("spark.master", "local[*]")

.set("spark.io.compression.codec", "snappy")

.set("hive.exec.dynamic.partition.mode", "nonstrict")

.set("hive.exec.dynamic.partition", "true")

val spark = SparkSession.builder()

.appName(getClass.getName)

.config(conf)

.enableHiveSupport

.getOrCreate()

spark.sparkContext.setLogLevel("info")

val config = NebulaConnectionConfig

.builder()

.withMetaAddress("192.168.11.191:9559")

.withConenctionRetry(2)

.withExecuteRetry(2)

.withTimeout(6000)

.build()

val nebulaReadVertexConfig: ReadNebulaConfig = ReadNebulaConfig

.builder()

.withSpace("matrix")

.withLabel("multiArchive")

.withNoColumn(false)

// .withReturnCols(List("birthday"))

.withLimit(10)

.withPartitionNum(10)

.build()

val vertex = spark.read.nebula(config, nebulaReadVertexConfig).loadVerticesToDF()

vertex.show()

spark.close()

}

}

pom相关依赖

<dependency>

<groupId>com.vesoft</groupId>

<artifactId>nebula-spark-connector</artifactId>

<version>3.4.0</version>

</dependency>

报错日志

23/06/19 19:57:41 INFO NebulaDataSource: options {spacename=matrix, nocolumn=false, enablestoragessl=false, metaaddress=192.168.11.191:9559, label=multiArchive, type=vertex, connectionretry=2, timeout=6000, executionretry=2, enablemetassl=false, paths=[], limit=10, returncols=, partitionnumber=10}

Exception in thread "main" com.facebook.thrift.transport.TTransportException: java.net.ConnectException: Connection refused: connect

at com.facebook.thrift.transport.TSocket.open(TSocket.java:206)

at com.facebook.thrift.transport.TFramedTransport.open(TFramedTransport.java:70)

at com.vesoft.nebula.client.meta.MetaClient.getClient(MetaClient.java:151)

at com.vesoft.nebula.client.meta.MetaClient.doConnect(MetaClient.java:130)

at com.vesoft.nebula.client.meta.MetaClient.connect(MetaClient.java:119)

at com.vesoft.nebula.connector.nebula.MetaProvider.<init>(MetaProvider.scala:53)

at com.vesoft.nebula.connector.reader.NebulaSourceReader.getSchema(NebulaSourceReader.scala:44)

at com.vesoft.nebula.connector.reader.NebulaSourceReader.readSchema(NebulaSourceReader.scala:30)

at org.apache.spark.sql.execution.datasources.v2.DataSourceV2Relation$.create(DataSourceV2Relation.scala:175)

at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:204)

at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:167)

at com.vesoft.nebula.connector.connector.package$NebulaDataFrameReader.loadVerticesToDF(package.scala:73)

at com.lg.output.nebula.Test$.main(Test.scala:51)

at com.lg.output.nebula.Test.main(Test.scala)

Caused by: java.net.ConnectException: Connection refused: connect

at java.net.DualStackPlainSocketImpl.waitForConnect(Native Method)

at java.net.DualStackPlainSocketImpl.socketConnect(DualStackPlainSocketImpl.java:81)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:476)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:218)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:200)

at java.net.PlainSocketImpl.connect(PlainSocketImpl.java:162)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:394)

at java.net.Socket.connect(Socket.java:606)

at com.facebook.thrift.transport.TSocket.open(TSocket.java:201)

... 13 more

23/06/19 19:57:43 INFO SparkContext: Invoking stop() from shutdown hook

通过java方式连接都是正常的。

服务情况: