提问参考模版:

- nebula 版本:3.5.0

- 部署方式:分布式 3节点

- 安装方式:RPM

- 是否上生产环境:N

- 硬件信息

- 磁盘 :阿里云PL0 ESSD

- CPU、内存信息 2C16G

- 问题的具体描述

我们在测试环境执行 Match Path语句,双向边,10层查询时,发现再执行一段时间后(10s+),graphd出现了crash down;

执行SQL

MATCH p=(src)-[e:ScheduleTaskRelationship*10]-(dst) where id(src) in [1113869279948709888] RETURN DISTINCT p LIMIT 300

graphd配置

########## basics ##########

# Whether to run as a daemon process

--daemonize=true

# The file to host the process id

--pid_file=pids/nebula-graphd.pid

# Whether to enable optimizer

--enable_optimizer=true

# The default charset when a space is created

--default_charset=utf8

# The default collate when a space is created

--default_collate=utf8_bin

# Whether to use the configuration obtained from the configuration file

--local_config=true

########## logging ##########

# The directory to host logging files

--log_dir=/alidata2/nebula-graph3/log

# Log level, 0, 1, 2, 3 for INFO, WARNING, ERROR, FATAL respectively

--minloglevel=0

# Verbose log level, 1, 2, 3, 4, the higher of the level, the more verbose of the logging

--v=3

# Maximum seconds to buffer the log messages

--logbufsecs=0

# Whether to redirect stdout and stderr to separate output files

--redirect_stdout=true

# Destination filename of stdout and stderr, which will also reside in log_dir.

--stdout_log_file=graphd-stdout.log

--stderr_log_file=graphd-stderr.log

# Copy log messages at or above this level to stderr in addition to logfiles. The numbers of severity levels INFO, WARNING, ERROR, and FATAL are 0, 1, 2, and 3, respectively.

--stderrthreshold=2

# wether logging files' name contain time stamp.

--timestamp_in_logfile_name=true

########## query ##########

# Whether to treat partial success as an error.

# This flag is only used for Read-only access, and Modify access always treats partial success as an error.

--accept_partial_success=false

# Maximum sentence length, unit byte

--max_allowed_query_size=4194304

########## networking ##########

# Comma separated Meta Server Addresses

--meta_server_addrs=172.20.221.57:9559,172.20.221.58:9559,172.20.221.59:9559

# Local IP used to identify the nebula-graphd process.

# Change it to an address other than loopback if the service is distributed or

# will be accessed remotely.

--local_ip=172.20.221.58

# Network device to listen on

--listen_netdev=any

# Port to listen on

--port=9669

# To turn on SO_REUSEPORT or not

--reuse_port=false

# Backlog of the listen socket, adjust this together with net.core.somaxconn

--listen_backlog=1024

# The number of seconds Nebula service waits before closing the idle connections

--client_idle_timeout_secs=28800

# The number of seconds before idle sessions expire

# The range should be in [1, 604800]

--session_idle_timeout_secs=28800

# The number of threads to accept incoming connections

--num_accept_threads=1

# The number of networking IO threads, 0 for # of CPU cores

--num_netio_threads=0

# Max active connections for all networking threads. 0 means no limit.

# Max connections for each networking thread = num_max_connections / num_netio_threads

--num_max_connections=0

# The number of threads to execute user queries, 0 for # of CPU cores

--num_worker_threads=0

# HTTP service ip

--ws_ip=172.20.221.58

# HTTP service port

--ws_http_port=19669

# storage client timeout

--storage_client_timeout_ms=60000

# slow query threshold in us

--slow_query_threshold_us=200000

# Port to listen on Meta with HTTP protocol, it corresponds to ws_http_port in metad's configuration file

--ws_meta_http_port=19559

########## authentication ##########

# Enable authorization

--enable_authorize=false

# User login authentication type, password for nebula authentication, ldap for ldap authentication, cloud for cloud authentication

--auth_type=password

########## memory ##########

# System memory high watermark ratio, cancel the memory checking when the ratio greater than 1.0

--system_memory_high_watermark_ratio=0.9

########## metrics ##########

--enable_space_level_metrics=true

########## experimental feature ##########

# if use experimental features

--enable_experimental_feature=true

# if use balance data feature, only work if enable_experimental_feature is true

--enable_data_balance=true

# enable udf, written in c++ only for now

--enable_udf=false

# set the directory where the .so files of udf are stored, when enable_udf is true

--udf_path=/alidata2/nebula-graph3/udf/

########## session ##########

# Maximum number of sessions that can be created per IP and per user

--max_sessions_per_ip_per_user=500

########## memory tracker ##########

# trackable memory ratio (trackable_memory / (total_memory - untracked_reserved_memory) )

--memory_tracker_limit_ratio=0.8

# untracked reserved memory in Mib

--memory_tracker_untracked_reserved_memory_mb=50

# enable log memory tracker stats periodically

--memory_tracker_detail_log=true

# log memory tacker stats interval in milliseconds

--memory_tracker_detail_log_interval_ms=60000

# enable memory background purge (if jemalloc is used)

--memory_purge_enabled=true

# memory background purge interval in seconds

--memory_purge_interval_seconds=10

########## performance optimization ##########

# The max job size in multi job mode

--max_job_size=1

# The min batch size for handling dataset in multi job mode, only enabled when max_job_size is greater than 1

--min_batch_size=8192

# if true, return directly without go through RPC

--optimize_appendvertices=false

# number of paths constructed by each thread

--path_batch_size=10000

graphd info日志

I20230627 13:36:45.297482 5080 ThriftClientManager-inl.h:47] Getting a client to "172.20.221.57":9559

I20230627 13:36:45.297497 5080 MetaClient.cpp:730] Send request to meta "172.20.221.57":9559

I20230627 13:36:45.298338 5080 MetaClient.cpp:2680] Metad last update time: 1687844119609

I20230627 13:36:54.377835 5120 Acceptor.cpp:476] Acceptor=0x7f6db1874528 onEmpty()

I20230627 13:36:54.377879 5120 Acceptor.cpp:476] Acceptor=0x7f6db1874528 onEmpty()

I20230627 13:36:54.378486 5120 Acceptor.cpp:476] Acceptor=0x7f6db1874528 onEmpty()

I20230627 13:36:55.308418 5129 MetaClient.cpp:2662] Send heartbeat to "172.20.221.57":9559, clusterId 0

I20230627 13:36:55.308517 5120 ThriftClientManager-inl.h:47] Getting a client to "172.20.221.57":9559

I20230627 13:36:55.308532 5120 MetaClient.cpp:730] Send request to meta "172.20.221.57":9559

I20230627 13:36:55.309391 5120 MetaClient.cpp:2680] Metad last update time: 1687844119609

I20230627 13:37:04.249007 5080 Acceptor.cpp:476] Acceptor=0x7f6db1874028 onEmpty()

I20230627 13:37:04.249070 5080 Acceptor.cpp:476] Acceptor=0x7f6db1874028 onEmpty()

I20230627 13:37:04.253031 5080 Acceptor.cpp:476] Acceptor=0x7f6db1874028 onEmpty()

I20230627 13:37:05.318737 5129 MetaClient.cpp:2662] Send heartbeat to "172.20.221.57":9559, clusterId 0

I20230627 13:37:05.318833 5080 ThriftClientManager-inl.h:47] Getting a client to "172.20.221.57":9559

I20230627 13:37:05.318846 5080 MetaClient.cpp:730] Send request to meta "172.20.221.57":9559

I20230627 13:37:05.319660 5080 MetaClient.cpp:2680] Metad last update time: 1687844119609

I20230627 13:37:15.329375 5129 MetaClient.cpp:2662] Send heartbeat to "172.20.221.57":9559, clusterId 0

I20230627 13:37:15.329465 5120 ThriftClientManager-inl.h:47] Getting a client to "172.20.221.57":9559

I20230627 13:37:15.329480 5120 MetaClient.cpp:730] Send request to meta "172.20.221.57":9559

I20230627 13:37:15.330266 5120 MetaClient.cpp:2680] Metad last update time: 1687844119609

I20230627 13:37:18.544281 5120 Acceptor.cpp:476] Acceptor=0x7f6db1874528 onEmpty()

I20230627 13:37:18.544342 5120 Acceptor.cpp:476] Acceptor=0x7f6db1874528 onEmpty()

I20230627 13:37:18.590086 5120 Acceptor.cpp:476] Acceptor=0x7f6db1874528 onEmpty()

I20230627 13:37:18.612643 5080 Acceptor.cpp:476] Acceptor=0x7f6db1874028 onEmpty()

I20230627 13:37:18.612695 5080 Acceptor.cpp:476] Acceptor=0x7f6db1874028 onEmpty()

I20230627 13:37:18.638947 5080 Acceptor.cpp:476] Acceptor=0x7f6db1874028 onEmpty()

I20230627 13:37:25.340356 5129 MetaClient.cpp:2662] Send heartbeat to "172.20.221.57":9559, clusterId 0

I20230627 13:37:25.340485 5080 ThriftClientManager-inl.h:47] Getting a client to "172.20.221.57":9559

I20230627 13:37:25.340504 5080 MetaClient.cpp:730] Send request to meta "172.20.221.57":9559

I20230627 13:37:25.341346 5080 MetaClient.cpp:2680] Metad last update time: 1687844119609

I20230627 13:37:28.028215 5120 Acceptor.cpp:476] Acceptor=0x7f6db1874528 onEmpty()

I20230627 13:37:28.028281 5120 Acceptor.cpp:476] Acceptor=0x7f6db1874528 onEmpty()

I20230627 13:37:28.055213 5069 GraphService.cpp:77] Authenticating user root from 192.168.28.30:56540

I20230627 13:37:28.055370 5120 ThriftClientManager-inl.h:47] Getting a client to "172.20.221.57":9559

I20230627 13:37:28.055392 5120 MetaClient.cpp:730] Send request to meta "172.20.221.57":9559

I20230627 13:37:28.056380 5069 GraphSessionManager.cpp:139] Create session id: 1687844272165390, for user: root

I20230627 13:37:28.056427 5069 GraphService.cpp:111] Create session doFinish

I20230627 13:37:28.087116 5070 GraphSessionManager.cpp:40] Find session from cache: 1687844272165390

I20230627 13:37:28.087208 5070 ClientSession.cpp:43] Add query: USE data_asset_10022, epId: 0

I20230627 13:37:28.087226 5070 QueryInstance.cpp:80] Parsing query: USE data_asset_10022

I20230627 13:37:28.087379 5070 Symbols.cpp:48] New variable for: __Start_0

I20230627 13:37:28.087397 5070 PlanNode.cpp:27] New variable: __Start_0

I20230627 13:37:28.087441 5070 Symbols.cpp:48] New variable for: __RegisterSpaceToSession_1

I20230627 13:37:28.087450 5070 PlanNode.cpp:27] New variable: __RegisterSpaceToSession_1

I20230627 13:37:28.087466 5070 Validator.cpp:409] root: RegisterSpaceToSession tail: Start

I20230627 13:37:28.087786 5080 ThriftClientManager-inl.h:47] Getting a client to "172.20.221.57":9559

I20230627 13:37:28.087805 5080 MetaClient.cpp:730] Send request to meta "172.20.221.57":9559

I20230627 13:37:28.088167 5069 SwitchSpaceExecutor.cpp:45] Graph switched to `data_asset_10022', space id: 127

I20230627 13:37:28.088194 5069 QueryInstance.cpp:128] Finish query: USE data_asset_10022

I20230627 13:37:28.088249 5069 StatsManager.cpp:159] Registered histogram query_latency_us{space=data_asset_10022} [bucketSize: 1000, min value: 0, max value: 2000]

I20230627 13:37:28.088264 5069 ClientSession.cpp:52] Delete query, epId: 0

I20230627 13:37:28.102105 5070 GraphSessionManager.cpp:40] Find session from cache: 1687844272165390

I20230627 13:37:28.102183 5070 StatsManager.cpp:107] Registered stats num_queries{space=data_asset_10022}

I20230627 13:37:28.102208 5070 StatsManager.cpp:107] Registered stats num_active_queries{space=data_asset_10022}

I20230627 13:37:28.102223 5070 ClientSession.cpp:43] Add query: MATCH p=(src)-[e:ScheduleTaskRelationship*10]-(dst) where id(src) in [1113869279948709888] RETURN DISTINCT p LIMIT 300, epId: 1

I20230627 13:37:28.102232 5070 QueryInstance.cpp:80] Parsing query: MATCH p=(src)-[e:ScheduleTaskRelationship*10]-(dst) where id(src) in [1113869279948709888] RETURN DISTINCT p LIMIT 300

I20230627 13:37:28.102499 5070 StatsManager.cpp:107] Registered stats num_sentences{space=data_asset_10022}

I20230627 13:37:28.102524 5070 Symbols.cpp:48] New variable for: __Start_0

I20230627 13:37:28.102537 5070 PlanNode.cpp:27] New variable: __Start_0

I20230627 13:37:28.102552 5070 Validator.cpp:350] Space chosen, name: data_asset_10022 id: 127

I20230627 13:37:28.102717 5070 Symbols.cpp:48] New variable for: __VAR_0

I20230627 13:37:28.102731 5070 AnonVarGenerator.h:28] Build anon var: __VAR_0

I20230627 13:37:28.102749 5070 Symbols.cpp:48] New variable for: __PassThrough_1

I20230627 13:37:28.102762 5070 PlanNode.cpp:27] New variable: __PassThrough_1

I20230627 13:37:28.102774 5070 Symbols.cpp:48] New variable for: __Dedup_2

I20230627 13:37:28.102782 5070 PlanNode.cpp:27] New variable: __Dedup_2

I20230627 13:37:28.102797 5070 MatchPathPlanner.cpp:126] Find starts: 0, Pattern has 1 edges, root: __Dedup_2, colNames: _vid

I20230627 13:37:28.102809 5070 Symbols.cpp:48] New variable for: __Start_3

I20230627 13:37:28.102815 5070 PlanNode.cpp:27] New variable: __Start_3

I20230627 13:37:28.102830 5070 Symbols.cpp:48] New variable for: __Traverse_4

I20230627 13:37:28.102838 5070 PlanNode.cpp:27] New variable: __Traverse_4

I20230627 13:37:28.102991 5070 ServerBasedSchemaManager.cpp:68] Get Edge Schema Space 127, EdgeType 150, Version -1

I20230627 13:37:28.103017 5070 Symbols.cpp:48] New variable for: __AppendVertices_5

I20230627 13:37:28.103037 5070 PlanNode.cpp:27] New variable: __AppendVertices_5

I20230627 13:37:28.103166 5070 Symbols.cpp:48] New variable for: __Project_6

I20230627 13:37:28.103178 5070 PlanNode.cpp:27] New variable: __Project_6

I20230627 13:37:28.103204 5070 Symbols.cpp:48] New variable for: __Project_7

I20230627 13:37:28.103220 5070 PlanNode.cpp:27] New variable: __Project_7

I20230627 13:37:28.103232 5070 Symbols.cpp:48] New variable for: __Dedup_8

I20230627 13:37:28.103240 5070 PlanNode.cpp:27] New variable: __Dedup_8

I20230627 13:37:28.103255 5070 Symbols.cpp:48] New variable for: __Limit_9

I20230627 13:37:28.103261 5070 PlanNode.cpp:27] New variable: __Limit_9

I20230627 13:37:28.103281 5070 ReturnClausePlanner.cpp:52] return root: __Limit_9 colNames: p

I20230627 13:37:28.103292 5070 MatchPlanner.cpp:172] root(Limit_9): __Limit_9, tail(Start_3): __Start_3

I20230627 13:37:28.103304 5070 Validator.cpp:409] root: Limit tail: Start

I20230627 13:37:28.103313 5070 Validator.cpp:409] root: Limit tail: Start

I20230627 13:37:28.103390 5070 Symbols.cpp:48] New variable for: __Project_10

I20230627 13:37:28.103402 5070 PlanNode.cpp:27] New variable: __Project_10

I20230627 13:37:28.103574 5070 StatsManager.cpp:159] Registered histogram optimizer_latency_us{space=data_asset_10022} [bucketSize: 1000, min value: 0, max value: 2000]

I20230627 13:37:28.104048 5120 ThriftClientManager-inl.h:53] There is no existing client to "172.20.221.57":9779, trying to create one

I20230627 13:37:28.104074 5120 ThriftClientManager-inl.h:74] Connecting to "172.20.221.57":9779 for 1 times

I20230627 13:37:28.108047 5080 ThriftClientManager-inl.h:53] There is no existing client to "172.20.221.59":9779, trying to create one

I20230627 13:37:28.108075 5080 ThriftClientManager-inl.h:74] Connecting to "172.20.221.59":9779 for 1 times

I20230627 13:37:28.108608 5080 ThriftClientManager-inl.h:53] There is no existing client to "172.20.221.58":9779, trying to create one

I20230627 13:37:28.108656 5080 ThriftClientManager-inl.h:74] Connecting to "172.20.221.58":9779 for 2 times

I20230627 13:37:28.109315 5120 ThriftClientManager-inl.h:47] Getting a client to "172.20.221.57":9779

I20230627 13:37:28.149472 5120 ThriftClientManager-inl.h:47] Getting a client to "172.20.221.57":9779

I20230627 13:37:28.149734 5080 ThriftClientManager-inl.h:47] Getting a client to "172.20.221.59":9779

I20230627 13:37:28.149968 5120 ThriftClientManager-inl.h:53] There is no existing client to "172.20.221.58":9779, trying to create one

I20230627 13:37:28.149988 5120 ThriftClientManager-inl.h:74] Connecting to "172.20.221.58":9779 for 2 times

I20230627 13:37:28.174249 5080 ThriftClientManager-inl.h:47] Getting a client to "172.20.221.58":9779

I20230627 13:37:28.174695 5120 ThriftClientManager-inl.h:47] Getting a client to "172.20.221.57":9779

I20230627 13:37:28.185066 5080 ThriftClientManager-inl.h:47] Getting a client to "172.20.221.59":9779

I20230627 13:37:28.208976 5120 ThriftClientManager-inl.h:47] Getting a client to "172.20.221.57":9779

I20230627 13:37:28.209187 5080 ThriftClientManager-inl.h:47] Getting a client to "172.20.221.58":9779

I20230627 13:37:28.209724 5120 ThriftClientManager-inl.h:53] There is no existing client to "172.20.221.59":9779, trying to create one

I20230627 13:37:28.209753 5120 ThriftClientManager-inl.h:74] Connecting to "172.20.221.59":9779 for 3 times

I20230627 13:37:28.220980 5080 ThriftClientManager-inl.h:47] Getting a client to "172.20.221.58":9779

I20230627 13:37:28.221318 5120 ThriftClientManager-inl.h:47] Getting a client to "172.20.221.57":9779

I20230627 13:37:28.221410 5080 ThriftClientManager-inl.h:47] Getting a client to "172.20.221.59":9779

I20230627 13:37:28.225270 5120 ThriftClientManager-inl.h:47] Getting a client to "172.20.221.59":9779

I20230627 13:37:28.225446 5080 ThriftClientManager-inl.h:53] There is no existing client to "172.20.221.57":9779, trying to create one

I20230627 13:37:28.225466 5080 ThriftClientManager-inl.h:74] Connecting to "172.20.221.57":9779 for 3 times

I20230627 13:37:28.225734 5120 ThriftClientManager-inl.h:47] Getting a client to "172.20.221.58":9779

I20230627 13:37:35.350646 5129 MetaClient.cpp:2662] Send heartbeat to "172.20.221.57":9559, clusterId 0

I20230627 13:37:35.350788 5080 ThriftClientManager-inl.h:47] Getting a client to "172.20.221.57":9559

I20230627 13:37:35.350805 5080 MetaClient.cpp:730] Send request to meta "172.20.221.57":9559

I20230627 13:37:35.351648 5080 MetaClient.cpp:2680] Metad last update time: 1687844119609

I20230627 13:37:44.538403 5132 MemoryUtils.cpp:227] sys:12.870GiB/15.250GiB 84.39% usr:11.681GiB/12.161GiB 96.05%

I20230627 13:37:44.682644 5130 GraphSessionManager.cpp:199] Try to reclaim expired sessions out of 2 ones

I20230627 13:37:44.682714 5130 GraphSessionManager.cpp:205] SessionId: 1687844272165390, idleSecs: 17

I20230627 13:37:44.682724 5130 GraphSessionManager.cpp:205] SessionId: 1687836180124875, idleSecs: 180

I20230627 13:37:44.682732 5130 GraphSessionManager.cpp:240] Add Update session id: 1687844272165390

I20230627 13:37:44.682746 5130 GraphSessionManager.cpp:240] Add Update session id: 1687836180124875

I20230627 13:37:44.682858 5120 ThriftClientManager-inl.h:47] Getting a client to "172.20.221.57":9559

I20230627 13:37:44.682878 5120 MetaClient.cpp:730] Send request to meta "172.20.221.57":9559

I20230627 13:37:45.360337 5129 MetaClient.cpp:2662] Send heartbeat to "172.20.221.57":9559, clusterId 0

I20230627 13:37:45.360476 5080 ThriftClientManager-inl.h:47] Getting a client to "172.20.221.57":9559

I20230627 13:37:45.360492 5080 MetaClient.cpp:730] Send request to meta "172.20.221.57":9559

I20230627 13:37:45.361410 5080 MetaClient.cpp:2680] Metad last update time: 1687844119609

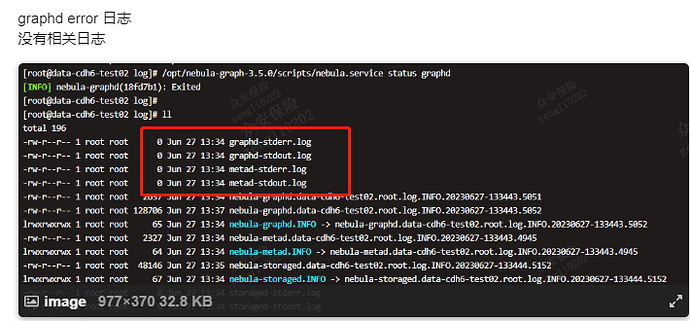

graphd error 日志

没有相关日志

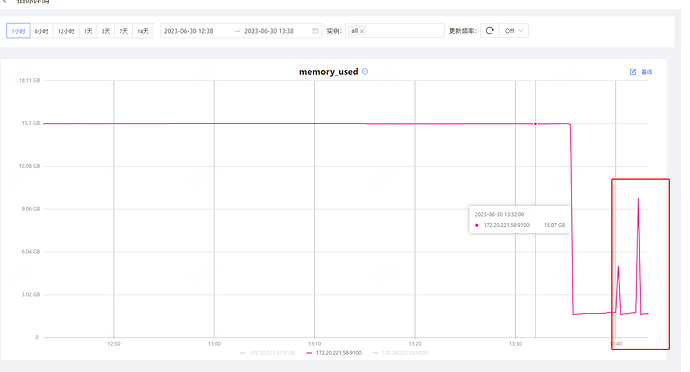

dashboard 节点内存水位线

dashboard cpu水位线