你好,按照你上面描述的数据和 importer 的 docker 使用方式,我在本地重现你的导入过程,是可以正常导入,目前猜测你那里没有读到数据,原因应该是之前你把 error 出错数据和原始数据文件指到了同一个路径,造成了数据文件被清空,建议你再看看你的 400w.csv 和 entity.csv 文件数据是否还在。

我本地复现流程如下:

nebula 使用 nebula-docker-compose 部署,启动流程:

$ docker-compose up -d

Creating network "yee-nebula-docker-compose_nebula-net" with the default driver

Creating yee-nebula-docker-compose_metad0_1 ... done

Creating yee-nebula-docker-compose_metad2_1 ... done

Creating yee-nebula-docker-compose_metad1_1 ... done

Creating yee-nebula-docker-compose_storaged2_1 ... done

Creating yee-nebula-docker-compose_storaged1_1 ... done

Creating yee-nebula-docker-compose_graphd2_1 ... done

Creating yee-nebula-docker-compose_storaged0_1 ... done

Creating yee-nebula-docker-compose_graphd0_1 ... done

Creating yee-nebula-docker-compose_graphd1_1 ... done

$ docker-compose ps

Name Command State Ports

----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

yee-nebula-docker-compose_graphd0_1 ./bin/nebula-graphd --flag ... Up (health: starting) 0.0.0.0:33949->13000/tcp, 0.0.0.0:33946->13002/tcp, 0.0.0.0:33952->3699/tcp

yee-nebula-docker-compose_graphd1_1 ./bin/nebula-graphd --flag ... Up (health: starting) 0.0.0.0:33950->13000/tcp, 0.0.0.0:33947->13002/tcp, 0.0.0.0:33954->3699/tcp

yee-nebula-docker-compose_graphd2_1 ./bin/nebula-graphd --flag ... Up (health: starting) 0.0.0.0:33955->13000/tcp, 0.0.0.0:33951->13002/tcp, 0.0.0.0:33958->3699/tcp

yee-nebula-docker-compose_metad0_1 ./bin/nebula-metad --flagf ... Up (health: starting) 0.0.0.0:33943->11000/tcp, 0.0.0.0:33941->11002/tcp, 0.0.0.0:45500->45500/tcp, 45501/tcp

yee-nebula-docker-compose_metad1_1 ./bin/nebula-metad --flagf ... Up (health: starting) 0.0.0.0:33944->11000/tcp, 0.0.0.0:33942->11002/tcp, 0.0.0.0:45501->45500/tcp, 45501/tcp

yee-nebula-docker-compose_metad2_1 ./bin/nebula-metad --flagf ... Up (health: starting) 0.0.0.0:33940->11000/tcp, 0.0.0.0:33939->11002/tcp, 0.0.0.0:45502->45500/tcp, 45501/tcp

yee-nebula-docker-compose_storaged0_1 ./bin/nebula-storaged --fl ... Up (health: starting) 0.0.0.0:33959->12000/tcp, 0.0.0.0:33956->12002/tcp, 0.0.0.0:44500->44500/tcp, 44501/tcp

yee-nebula-docker-compose_storaged1_1 ./bin/nebula-storaged --fl ... Up (health: starting) 0.0.0.0:33957->12000/tcp, 0.0.0.0:33953->12002/tcp, 0.0.0.0:44501->44500/tcp, 44501/tcp

yee-nebula-docker-compose_storaged2_1 ./bin/nebula-storaged --fl ... Up (health: starting) 0.0.0.0:33948->12000/tcp, 0.0.0.0:33945->12002/tcp, 0.0.0.0:44502->44500/tcp, 44501/tcp

$ docker network ls 130 ↵

NETWORK ID NAME DRIVER SCOPE

bcb651378eaf bridge bridge local

f4de8a27ecb2 docker-compose_nebula-net bridge local

21962cfccea4 host host local

b9a54fac4504 nebula-docker-compose_nebula-net bridge local

d62080e487f8 none null local

8e4a5f236380 yee-nebula-docker-compose_nebula-net bridge local

使用 nebula-console 去创建 space:

$ docker run --rm -ti --net yee-nebula-docker-compose_nebula-net vesoft/nebula-console:nightly -u root -p nebula --addr 172.28.3.1 --port 3699

Welcome to Nebula Graph (Version 2b22c91)

(root@nebula) [(none)]> SHOW SPACES

========

| Name |

========

| nba |

--------

Got 1 rows (Time spent: 4.246/4.762 ms)

Mon Nov 9 03:00:19 2020

(root@nebula) [(none)]> create space b400w(partition_num=1);

Execution succeeded (Time spent: 6.296/6.904 ms)

Mon Nov 9 03:00:40 2020

(root@nebula) [(none)]> SHOW SPACES

=========

| Name |

=========

| nba |

---------

| b400w |

---------

Got 2 rows (Time spent: 618/1295 us)

Mon Nov 9 03:03:52 2020

importer 的配置文件 conf.yaml

version: v1

description: example

removeTempFiles: false

clientSettings:

retry: 3

concurrency: 10

channelBufferSize: 128

space: b400w

connection:

user: user

password: password

address: 127.0.0.1:33952

postStart:

commands: |

use b400w;

UPDATE CONFIGS storage:wal_ttl=3600;

UPDATE CONFIGS storage:rocksdb_column_family_options = { disable_auto_compactions = true };

CREATE TAG IF NOT EXISTS a(entity string);

CREATE EDGE IF NOT EXISTS b(relation string);

afterPeriod: 8s

preStop:

commands: |

UPDATE CONFIGS storage:wal_ttl=86400;

UPDATE CONFIGS storage:rocksdb_column_family_options = { disable_auto_compactions = false };

logPath: /home/bigdata/zw/err/test.log

files:

- path: /home/bigdata/zw/entity.csv

failDataPath: /home/bigdata/zw/err/entity.csv

batchSize: 128

inOrder: false

type: csv

csv:

withHeader: false

withLabel: false

delimiter: ","

schema:

type: vertex

vertex:

vid:

index: 0

function: hash

tags:

- name: a

props:

- name: entity

type: string

index: 0

- path: /home/bigdata/zw/400w.csv

failDataPath: /home/bigdata/zw/err/400w.csv

batchSize: 128

inOrder: false

type: csv

csv:

withHeader: false

withLabel: false

delimiter: ","

schema:

type: edge

edge:

name: b

srcVID:

index: 0

function: hash

dstVID:

index: 2

function: hash

props:

- name: relation

type: string

index: 1

两个数据文件如下

entity.csv

$ cat entity.csv

实体

词条

400w.csv

$ cat 400w.csv

实体,属性,值

词条,标签,文化

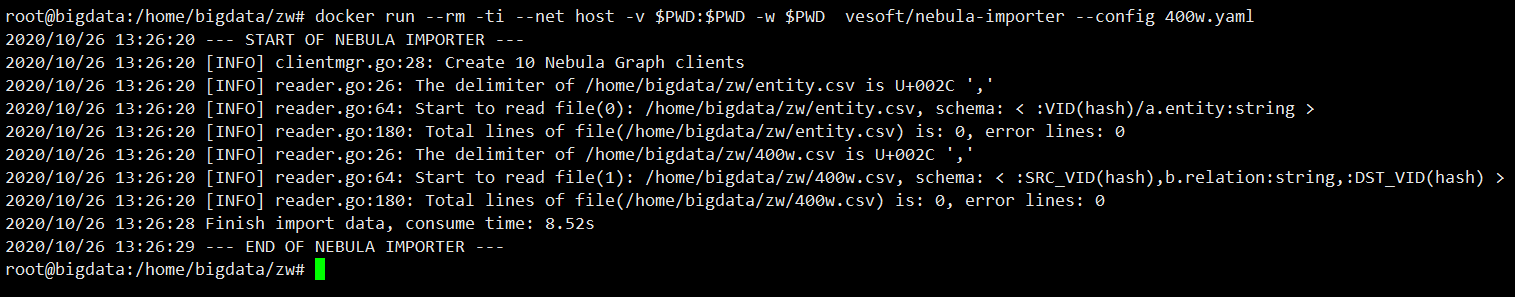

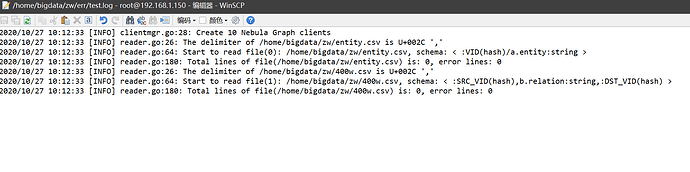

导入的流程输出如下:

$ docker run --rm -ti --net host -v $PWD:/home/bigdata/zw -w /home/bigdata/zw vesoft/nebula-importer --config conf.yml

2020/11/09 03:03:35 --- START OF NEBULA IMPORTER ---

2020/11/09 03:03:35 [INFO] clientmgr.go:28: Create 10 Nebula Graph clients

2020/11/09 03:03:35 [INFO] reader.go:26: The delimiter of /home/bigdata/zw/entity.csv is U+002C ','

2020/11/09 03:03:35 [INFO] reader.go:64: Start to read file(0): /home/bigdata/zw/entity.csv, schema: < :VID(hash)/a.entity:string >

2020/11/09 03:03:35 [INFO] reader.go:26: The delimiter of /home/bigdata/zw/400w.csv is U+002C ','

2020/11/09 03:03:35 [INFO] reader.go:64: Start to read file(1): /home/bigdata/zw/400w.csv, schema: < :SRC_VID(hash),b.relation:string,:DST_VID(hash) >

2020/11/09 03:03:35 [INFO] reader.go:180: Total lines of file(/home/bigdata/zw/entity.csv) is: 2, error lines: 0

2020/11/09 03:03:35 [INFO] reader.go:180: Total lines of file(/home/bigdata/zw/400w.csv) is: 2, error lines: 0

2020/11/09 03:03:43 [INFO] statsmgr.go:61: Done(/home/bigdata/zw/entity.csv): Time(8.02s), Finished(2), Failed(0), Latency AVG(7889us), Batches Req AVG(8349us), Rows AVG(0.25/s)

2020/11/09 03:03:43 [INFO] statsmgr.go:61: Done(/home/bigdata/zw/400w.csv): Time(8.03s), Finished(4), Failed(0), Latency AVG(5981us), Batches Req AVG(6332us), Rows AVG(0.50/s)

2020/11/09 03:03:44 Finish import data, consume time: 8.53s

2020/11/09 03:03:45 --- END OF NEBULA IMPORTER ---

从上面的输出可以看出,已经成功的导入上面的 4 条数据,去 console 中去查也是能查到:

(root@nebula) [b400w]> fetch prop on * hash('实体')

==================================

| VertexID | a.entity |

==================================

| -201035082963479683 | 实体 |

----------------------------------

Got 1 rows (Time spent: 4.63/5.239 ms)

Mon Nov 9 03:05:20 2020

所以对比以上过程,你看看能不能发现问题在哪里?也欢迎把后续的反馈发在这里。

![]() :

: