spark-submit用local[16]会报错,可以帮忙看看吗,spark hdfs不太熟悉。@nicole

spark-submit --class com.vesoft.nebula.tools.importer.Exchange --master "local[16]" exchange-1.1.0.jar -c xxxx.conf

spark-submit用local[16]会报错,可以帮忙看看吗,spark hdfs不太熟悉。@nicole

spark-submit --class com.vesoft.nebula.tools.importer.Exchange --master "local[16]" exchange-1.1.0.jar -c xxxx.conf

看上去像是HDFS文件系统操作异常导致的。

先通过50070端口查看下hdfs的web 管理界面,看集群是否正常。

看下hadoop的日志信息,如果有ERROR可以贴一下

看看我之前写的这篇 能不能帮到你

你好,可以贴一下你的hdfs和spark配置吗,我看你用的local[4] 没报错。我估计是我的hadoop配置可能有点问题。

看了下namenode日志,发现导入生成的tmp 目录文件会被不同的任务重复关闭。

2020-12-11 10:01:47,278 INFO BlockStateChange: BLOCK* BlockManager: ask xx.xx.xx.xx:50010 to delete [blk_1073741968_1144, blk_1073741972_1148, blk_1073741973_1149, blk_1073741974_1150, blk_1073741975_1151, blk_1073741976_1152, blk_1073741977_1153, blk_1073741978_1154, blk_1073741979_1155, blk_1073741965_1141]

2020-12-11 10:02:37,020 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073741980_1156{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.0

2020-12-11 10:02:37,127 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073741980_1156{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 0

2020-12-11 10:02:37,131 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.0 is closed by DFSClient_NONMAPREDUCE_-1318956742_80

2020-12-11 10:02:37,895 INFO BlockStateChange: BLOCK* addToInvalidates: blk_1073741980_1156 xx.xx.xx.xx:50010

2020-12-11 10:02:37,896 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073741981_1157{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.3

2020-12-11 10:02:37,897 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073741982_1158{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.0

2020-12-11 10:02:37,901 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073741983_1159{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.6

2020-12-11 10:02:37,904 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073741984_1160{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.5

2020-12-11 10:02:37,906 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073741981_1157{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 0

2020-12-11 10:02:37,907 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.3 is closed by DFSClient_NONMAPREDUCE_-1318956742_80

2020-12-11 10:02:37,907 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073741985_1161{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.9

2020-12-11 10:02:37,907 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073741982_1158{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 0

2020-12-11 10:02:37,909 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.0 is closed by DFSClient_NONMAPREDUCE_-1318956742_80

2020-12-11 10:02:37,913 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073741984_1160{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 0

2020-12-11 10:02:37,913 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073741983_1159{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 0

2020-12-11 10:02:37,915 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.5 is closed by DFSClient_NONMAPREDUCE_-1318956742_80

2020-12-11 10:02:37,916 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.6 is closed by DFSClient_NONMAPREDUCE_-1318956742_80

2020-12-11 10:02:37,916 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073741985_1161{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 0

2020-12-11 10:02:37,919 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073741986_1162{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.8

2020-12-11 10:02:37,919 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.9 is closed by DFSClient_NONMAPREDUCE_-1318956742_80

2020-12-11 10:02:37,919 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073741987_1163{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.1

2020-12-11 10:02:37,919 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073741988_1164{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.2

2020-12-11 10:02:37,919 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073741989_1165{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.7

2020-12-11 10:02:37,923 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073741990_1166{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.4

2020-12-11 10:02:37,929 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073741988_1164{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 0

2020-12-11 10:02:37,930 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073741989_1165{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 0

2020-12-11 10:02:37,930 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.2 is closed by DFSClient_NONMAPREDUCE_-1318956742_80

2020-12-11 10:02:37,930 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073741987_1163{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 0

2020-12-11 10:02:37,931 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073741986_1162{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 0

2020-12-11 10:02:37,932 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.7 is closed by DFSClient_NONMAPREDUCE_-1318956742_80

2020-12-11 10:02:37,933 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.1 is closed by DFSClient_NONMAPREDUCE_-1318956742_80

2020-12-11 10:02:37,933 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.8 is closed by DFSClient_NONMAPREDUCE_-1318956742_80

2020-12-11 10:02:37,933 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073741990_1166{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 0

2020-12-11 10:02:37,934 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.4 is closed by DFSClient_NONMAPREDUCE_-1318956742_80

2020-12-11 10:02:38,281 INFO BlockStateChange: BLOCK* BlockManager: ask xx.xx.xx.xx:50010 to delete [blk_1073741980_1156]

2020-12-11 10:02:41,145 INFO BlockStateChange: BLOCK* addToInvalidates: blk_1073741987_1163 xx.xx.xx.xx:50010

2020-12-11 10:02:41,147 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073741991_1167{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.1

2020-12-11 10:02:41,150 INFO BlockStateChange: BLOCK* addToInvalidates: blk_1073741989_1165 xx.xx.xx.xx:50010

2020-12-11 10:02:41,150 INFO BlockStateChange: BLOCK* addToInvalidates: blk_1073741990_1166 xx.xx.xx.xx:50010

2020-12-11 10:02:41,153 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073741992_1168{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.7

2020-12-11 10:02:41,153 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073741993_1169{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.4

2020-12-11 10:02:41,154 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073741991_1167{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 0

2020-12-11 10:02:41,155 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.1 is closed by DFSClient_NONMAPREDUCE_-1318956742_80

2020-12-11 10:02:41,161 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073741992_1168{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 0

2020-12-11 10:02:41,162 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.7 is closed by DFSClient_NONMAPREDUCE_-1318956742_80

2020-12-11 10:02:41,162 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: BLOCK* blk_1073741993_1169{UCState=COMMITTED, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} is not COMPLETE (ucState = COMMITTED, replication# = 0 < minimum = 1) in file /tmp/test/idCard.4

2020-12-11 10:02:41,162 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073741993_1169{UCState=COMMITTED, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 5

2020-12-11 10:02:41,163 INFO BlockStateChange: BLOCK* addToInvalidates: blk_1073741984_1160 xx.xx.xx.xx:50010

2020-12-11 10:02:41,164 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073741994_1170{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.5

2020-12-11 10:02:41,169 INFO BlockStateChange: BLOCK* addToInvalidates: blk_1073741988_1164 xx.xx.xx.xx:50010

2020-12-11 10:02:41,169 INFO BlockStateChange: BLOCK* addToInvalidates: blk_1073741983_1159 xx.xx.xx.xx:50010

2020-12-11 10:02:41,172 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073741994_1170{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 0

2020-12-11 10:02:41,174 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.5 is closed by DFSClient_NONMAPREDUCE_-1318956742_80

2020-12-11 10:02:41,174 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073741995_1171{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.2

2020-12-11 10:02:41,176 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073741996_1172{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.6

2020-12-11 10:02:41,177 INFO BlockStateChange: BLOCK* addToInvalidates: blk_1073741985_1161 xx.xx.xx.xx:50010

2020-12-11 10:02:41,177 INFO BlockStateChange: BLOCK* addToInvalidates: blk_1073741982_1158 xx.xx.xx.xx:50010

2020-12-11 10:02:41,181 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073741995_1171{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 0

2020-12-11 10:02:41,181 INFO BlockStateChange: BLOCK* addToInvalidates: blk_1073741981_1157 xx.xx.xx.xx:50010

2020-12-11 10:02:41,182 INFO BlockStateChange: BLOCK* addToInvalidates: blk_1073741986_1162 xx.xx.xx.xx:50010

2020-12-11 10:02:41,182 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073741997_1173{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.0

2020-12-11 10:02:41,182 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073741998_1174{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.9

2020-12-11 10:02:41,183 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073741996_1172{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 0

2020-12-11 10:02:41,183 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.2 is closed by DFSClient_NONMAPREDUCE_-1318956742_80

2020-12-11 10:02:41,183 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073741999_1175{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.3

2020-12-11 10:02:41,184 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.6 is closed by DFSClient_NONMAPREDUCE_-1318956742_80

2020-12-11 10:02:41,184 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073742000_1176{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.8

2020-12-11 10:02:41,190 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073741998_1174{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 0

2020-12-11 10:02:41,193 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.9 is closed by DFSClient_NONMAPREDUCE_-1318956742_80

2020-12-11 10:02:41,195 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073741997_1173{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 0

2020-12-11 10:02:41,195 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073742000_1176{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 0

2020-12-11 10:02:41,195 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073741999_1175{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 0

2020-12-11 10:02:41,196 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.0 is closed by DFSClient_NONMAPREDUCE_-1318956742_80

2020-12-11 10:02:41,196 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.3 is closed by DFSClient_NONMAPREDUCE_-1318956742_80

2020-12-11 10:02:41,196 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.8 is closed by DFSClient_NONMAPREDUCE_-1318956742_80

2020-12-11 10:02:41,281 INFO BlockStateChange: BLOCK* BlockManager: ask xx.xx.xx.xx:50010 to delete [blk_1073741984_1160, blk_1073741985_1161, blk_1073741986_1162, blk_1073741987_1163, blk_1073741988_1164, blk_1073741989_1165, blk_1073741990_1166, blk_1073741981_1157, blk_1073741982_1158, blk_1073741983_1159]

2020-12-11 10:02:41,563 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.4 is closed by DFSClient_NONMAPREDUCE_-1318956742_80

2020-12-11 10:02:46,174 INFO org.apache.hadoop.hdfs.server.namenode.FSEditLog: Number of transactions: 118 Total time for transactions(ms): 4 Number of transactions batched in Syncs: 1 Number of syncs: 75 SyncTimes(ms): 13

2020-12-11 10:02:46,174 INFO BlockStateChange: BLOCK* addToInvalidates: blk_1073741991_1167 xx.xx.xx.xx:50010

2020-12-11 10:02:46,180 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073742001_1177{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.1

2020-12-11 10:02:46,189 INFO BlockStateChange: BLOCK* addToInvalidates: blk_1073741992_1168 xx.xx.xx.xx:50010

2020-12-11 10:02:46,190 INFO BlockStateChange: BLOCK* addToInvalidates: blk_1073741994_1170 xx.xx.xx.xx:50010

2020-12-11 10:02:46,191 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073742001_1177{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 0

2020-12-11 10:02:46,192 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.1 is closed by DFSClient_NONMAPREDUCE_-1655340214_1783

2020-12-11 10:02:46,192 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073742002_1178{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.7

2020-12-11 10:02:46,193 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073742003_1179{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.5

2020-12-11 10:02:46,197 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073742002_1178{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 0

2020-12-11 10:02:46,198 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.7 is closed by DFSClient_NONMAPREDUCE_-1655340214_1783

2020-12-11 10:02:46,199 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073742003_1179{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 0

2020-12-11 10:02:46,200 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.5 is closed by DFSClient_NONMAPREDUCE_-1655340214_1783

2020-12-11 10:02:46,225 INFO BlockStateChange: BLOCK* addToInvalidates: blk_1073741993_1169 xx.xx.xx.xx:50010

2020-12-11 10:02:46,227 INFO BlockStateChange: BLOCK* addToInvalidates: blk_1073741995_1171 xx.xx.xx.xx:50010

2020-12-11 10:02:46,227 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073742004_1180{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.4

2020-12-11 10:02:46,229 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073742005_1181{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.2

2020-12-11 10:02:46,233 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073742005_1181{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 0

2020-12-11 10:02:46,234 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: BLOCK* blk_1073742004_1180{UCState=COMMITTED, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} is not COMPLETE (ucState = COMMITTED, replication# = 0 < minimum = 1) in file /tmp/test/idCard.4

2020-12-11 10:02:46,234 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.2 is closed by DFSClient_NONMAPREDUCE_-1655340214_1783

2020-12-11 10:02:46,234 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073742004_1180{UCState=COMMITTED, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 5

2020-12-11 10:02:46,235 INFO BlockStateChange: BLOCK* addToInvalidates: blk_1073741998_1174 xx.xx.xx.xx:50010

2020-12-11 10:02:46,237 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073742006_1182{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.9

2020-12-11 10:02:46,239 INFO BlockStateChange: BLOCK* addToInvalidates: blk_1073742000_1176 xx.xx.xx.xx:50010

2020-12-11 10:02:46,241 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073742007_1183{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} for /tmp/test/idCard.8

2020-12-11 10:02:46,242 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073742006_1182{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 0

2020-12-11 10:02:46,242 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.9 is closed by DFSClient_NONMAPREDUCE_-1655340214_1783

2020-12-11 10:02:46,245 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: xx.xx.xx.xx:50010 is added to blk_1073742007_1183{UCState=UNDER_CONSTRUCTION, truncateBlock=null, primaryNodeIndex=-1, replicas=[ReplicaUC[[DISK]DS-66ecb9d2-3aec-4e26-9d39-e4d1f89fc771:NORMAL:xx.xx.xx.xx:50010|RBW]]} size 0

2020-12-11 10:02:46,246 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.8 is closed by DFSClient_NONMAPREDUCE_-1655340214_1783

2020-12-11 10:02:46,636 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /tmp/test/idCard.4 is closed by DFSClient_NONMAPREDUCE_-1655340214_1783

2020-12-11 10:02:47,282 INFO BlockStateChange: BLOCK* BlockManager: ask xx.xx.xx.xx:50010 to delete [blk_1073742000_1176, blk_1073741991_1167, blk_1073741992_1168, blk_1073741993_1169, blk_1073741994_1170, blk_1073741995_1171, blk_1073741998_1174]

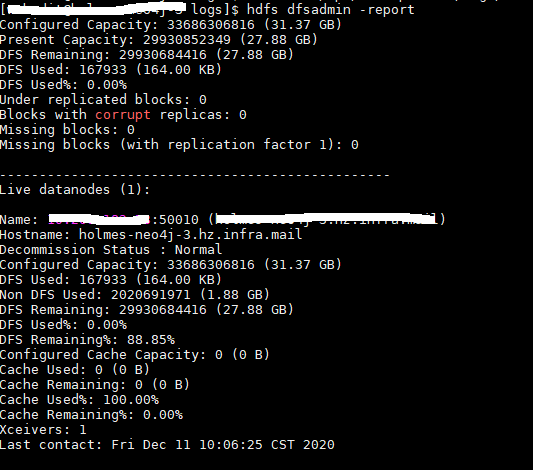

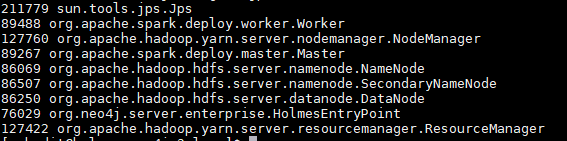

内网隔离的,不能打开web页面,这样看可以吗,看着是正常的

jps -l

看HDFS的服务是正常的,我的任务是用的spark://master:7077,你试下直接用 --master local 跑一下,不要指定核数

我贴一下我的hdfs配置,有哪里需要添加修改的配置吗?

core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<!-- hdfs的位置 -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://xxx:9000</value>

</property>

<!-- hadoop运行时产生的缓冲文件存储位置 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/hadoop-2.7.4/data/tmp</value>

</property>

</configuration>

hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<!-- hdfs 数据备份数量 -->

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<!-- hdfs namenode上存储hdfs名字空间元数据 -->

<property>

<name>dfs.namenode.name.dir</name>

<value>/opt/hadoop-2.7.4/hdfs/name</value>

</property>

<!-- hdfs datanode上数据块的物理存储位置 -->

<property>

<name>dfs.datanode.data.dir</name>

<value>/opt/hadoop-2.7.4/hdfs/data</value>

</property>

</configuration>

mapred-site.xml

<configuration>

<!-- mapreduce运行的平台 默认local本地模式 -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.app-submission.cross-platform</name>

<value>true</value>

</property>

</configuration>

yarn-site.xml

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<!-- Site specific YARN configuration properties -->

<!-- yarn 的 hostname -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>xxxxxxxx</value>

</property>

<!-- yarn Web UI address -->

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>${yarn.resourcemanager.hostname}:8088</value>

</property>

<!-- reducer 获取数据的方式 -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!--log-->

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<property>

<name>yarn.nodemanager.log-aggregation.roll-monitoring-interval-seconds</name>

<value>3600</value>

</property>

<property>

<name>yarn.nodemanager.remote-app-log-dir</name>

<value>/tmp/logs</value>

</property>

</configuration>

你的datanode是几个? 看是否都是正常状态。 若在数据处理过程中datanode挂了也会报这个错误。

不指定核数是没问题的 --master local ,我想提高一下并发导入速度,用了16

不指定的话 会按照默认资源来跑spark任务的

你的机器多少核

42核,400G内存

我计算了一下,失败之前导入的数据量和IO 指定16要比不指定local 数量大概快4倍。

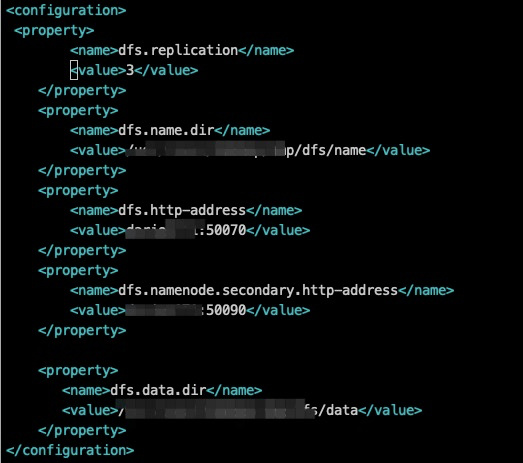

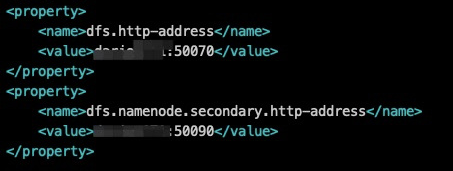

我用local[16]试一下. 下面是我的hdfs配置,副本数1或3都可

我少了这个两项,并且我的副本数是1

那看下spark的配置,是不是限制了使用的最大核数, spark.max.cores的配置。

在spark-env.sh文件中,- SPARK_EXECUTOR_CORES配置

这是web管理界面地址的配置,不影响hadoop功能。 副本数1或3都可以

我没有设置spark.max.cores,查了下默认值是1,估计是这个问题我改下试试

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.262.b10-0.el7_8.x86_64

export HADOOP_HOME=/opt/hadoop-2.7.4

export HADOOP_CONF_DIR=/opt/hadoop-2.7.4/etc/hadoop

export YARN_CONF_DIR=/opt/hadoop-2.7.4/etc/hadoop

export SPARK_MASTER_IP=xxx

export SPARK_LOCAL_IP=xxx

export SPARK_WORKER_MEMORY=32g

export SPARK_HOME=/opt/spark-2.3.1

我spark-env.sh添加了下面的配置,还是报错。

export SPARK_EXECUTOR_INSTANCES=16

export SPARK_EXECUTOR_CORES=2

export SPARK_EXECUTOR_MEMORY=32G

export SPARK_DRIVER_MEMORY=32G

查到这个帖子,修改hdfs-site.xml后重启hdfs解决,现在可以指定local并发不报错了。

http://arganzheng.life/hadoop-filesystem-closed-exception.html

<property>

<name>fs.hdfs.impl.disable.cache</name>

<value>true</value>

</property>