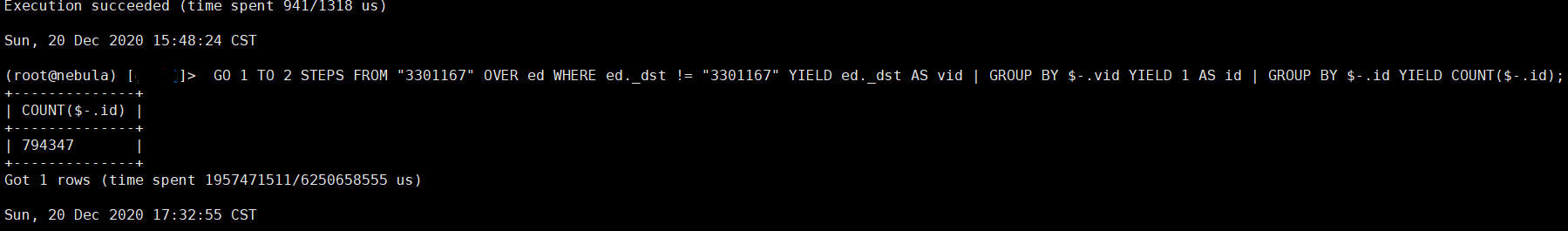

+--------------+

| COUNT($-.id) |

+--------------+

| 794347 |

+--------------+

Got 1 rows (time spent 1510095801/5805052847 us)

Execution Plan

-----+--------------+--------------+-----------------------------------------------------------------------+-------------------------------------------------------------------------------------

| id | name | dependencies | profiling data | operator info |

-----+--------------+--------------+-----------------------------------------------------------------------+-------------------------------------------------------------------------------------

| 9 | Aggregate | 8 | ver: 0, rows: 1, execTime: 78705us, totalTime: 78707us | outputVar: [{"colNames":["COUNT($-.id)"],"name":"__Aggregate_9","type":"DATASET"}] |

| | | | | inputVar: __Aggregate_8 |

| | | | | groupKeys: ["$-.id"] |

| | | | | groupItems: [{"expr":"$-.id","distinct":"false","funcType":1}] |

-----+--------------+--------------+-----------------------------------------------------------------------+-------------------------------------------------------------------------------------

| 8 | Aggregate | 7 | ver: 0, rows: 794347, execTime: 3113652us, totalTime: 3113657us | outputVar: [{"colNames":["id"],"name":"__Aggregate_8","type":"DATASET"}] |

| | | | | inputVar: __DataCollect_7 |

| | | | | groupKeys: ["$-.vid"] |

| | | | | groupItems: [{"expr":"1","distinct":"false","funcType":0}] |

-----+--------------+--------------+-----------------------------------------------------------------------+-------------------------------------------------------------------------------------

| 7 | DataCollect | 6 | ver: 0, rows: 3624132, execTime: 63125us, totalTime: 63130us | outputVar: [{"colNames":["vid"],"name":"__DataCollect_7","type":"DATASET"}] |

| | | | | inputVar: [{"colNames":["vid"],"name":"__Project_5","type":"DATASET"}] |

| | | | | kind: M TO N |

-----+--------------+--------------+-----------------------------------------------------------------------+-------------------------------------------------------------------------------------

| 6 | Loop | 11 | ver: 0, rows: 1, execTime: 9us, totalTime: 10us | outputVar: [{"colNames":[],"name":"__Loop_6","type":"DATASET"}] |

| | | | ver: 1, rows: 1, execTime: 6us, totalTime: 7us | inputVar: |

| | | | ver: 2, rows: 1, execTime: 9us, totalTime: 10us | condition: (++($__VAR_1{0})<=2) |

-----+--------------+--------------+-----------------------------------------------------------------------+-------------------------------------------------------------------------------------

| 5 | Project | 4 | ver: 0, rows: 2691, execTime: 1439us, totalTime: 1441us | branch: true, nodeId: 6 |

| | | | ver: 1, rows: 3621441, execTime: 628263us, totalTime: 628266us | |

| | | | | outputVar: [{"colNames":["vid"],"name":"__Project_5","type":"DATASET"}] |

| | | | | inputVar: __Filter_4 |

| | | | | columns: ed._dst AS vid |

-----+--------------+--------------+-----------------------------------------------------------------------+-------------------------------------------------------------------------------------

| 4 | Filter | 3 | ver: 0, rows: 2691, execTime: 1112us, totalTime: 1113us | outputVar: [{"colNames":["_vid"],"name":"__Filter_4","type":"DATASET"}] |

| | | | ver: 1, rows: 3621441, execTime: 463086us, totalTime: 463088us | inputVar: __GetNeighbors_1 |

| | | | | condition: (ed._dst!=3301167) |

-----+--------------+--------------+-----------------------------------------------------------------------+-------------------------------------------------------------------------------------

| 3 | Dedup | 2 | ver: 0, rows: 2691, execTime: 1456us, totalTime: 1458us | outputVar: [{"colNames":["_vid"],"name":"__VAR_0","type":"DATASET"}] |

| | | | ver: 1, rows: 794070, execTime: 5793673321us, totalTime: 5793673326us | inputVar: __Project_2 |

-----+--------------+--------------+-----------------------------------------------------------------------+-------------------------------------------------------------------------------------

| 2 | Project | 1 | ver: 0, rows: 2691, execTime: 1360us, totalTime: 1362us | outputVar: [{"colNames":["_vid"],"name":"__Project_2","type":"DATASET"}] |

| | | | ver: 1, rows: 3621441, execTime: 558786us, totalTime: 558790us | inputVar: __GetNeighbors_1 |

| | | | | columns: *._dst AS _vid |

-----+--------------+--------------+-----------------------------------------------------------------------+-------------------------------------------------------------------------------------

| 1 | GetNeighbors | 0 | ver: 0, rows: 2691, execTime: 772us, totalTime: 315501us | outputVar: [{"colNames":[],"name":"__GetNeighbors_1","type":"DATASET"}] |

| | | | ver: 1, rows: 3621441, execTime: 231401us, totalTime: 6162265us | inputVar: __VAR_0 |

| | | | | space: 1 |

| | | | | dedup: false |

| | | | | limit: 9223372036854775807 |

| | | | | filter: |

| | | | | orderBy: [] |

| | | | | src: $__VAR_0._vid |

| | | | | edgeTypes: [] |

| | | | | edgeDirection: OUT_EDGE |

| | | | | vertexProps: |

| | | | | edgeProps: [{"props":["_dst"],"type":"3"}] |

| | | | | statProps: |

| | | | | exprs: |

| | | | | random: false |

-----+--------------+--------------+-----------------------------------------------------------------------+-------------------------------------------------------------------------------------

| 0 | Start | | ver: 0, rows: 0, execTime: 0us, totalTime: 19us | outputVar: [{"colNames":[],"name":"__Start_0","type":"DATASET"}] |

| | | | ver: 1, rows: 0, execTime: 1us, totalTime: 63us | |

-----+--------------+--------------+-----------------------------------------------------------------------+-------------------------------------------------------------------------------------

| 11 | Start | | ver: 0, rows: 0, execTime: 1us, totalTime: 75us | outputVar: [{"colNames":[],"name":"__Start_11","type":"DATASET"}] |

-----+--------------+--------------+-----------------------------------------------------------------------+-------------------------------------------------------------------------------------

Wed, 23 Dec 2020 10:16:59 CST

, 能再提供点线索我们本地试着复现下吗?

, 能再提供点线索我们本地试着复现下吗?