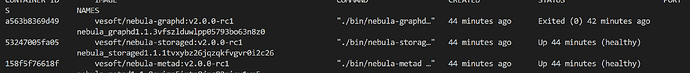

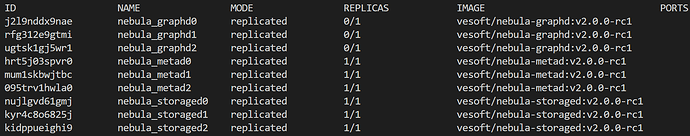

- nebula 版本:v2.0.0-rc1

- 部署方式(分布式 / 单机 / Docker / DBaaS):docker swarm

- 硬件信息

- 磁盘( 必须为 SSD ,不支持 HDD)

- CPU、内存信息:

如下,是我的启动脚本

version: '3.8'

services:

metad0:

image: vesoft/nebula-metad:v2.0.0-rc1

env_file:

- ./nebula.env

command:

- --meta_server_addrs=9.134.1.150:9559,9.134.3.25:9559,9.134.55.213:9559

- --local_ip=9.134.1.150

- --ws_ip=9.134.1.150

- --port=9559

- --data_path=/data/meta

- --log_dir=/logs

- --v=0

- --minloglevel=2

deploy:

replicas: 1

restart_policy:

condition: on-failure

placement:

constraints:

- node.hostname == VM-1-150-centos

healthcheck:

test: ["CMD", "curl", "-f", "http://9.134.1.150:19559/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- target: 19559

published: 19559

protocol: tcp

mode: host

- target: 19560

published: 19560

protocol: tcp

mode: host

- target: 9559

published: 9559

protocol: tcp

mode: host

volumes:

- /data/nebula/data/meta:/data/meta

- /data/nebula/logs/meta:/logs

networks:

- nebula-net

metad1:

image: vesoft/nebula-metad:v2.0.0-rc1

env_file:

- ./nebula.env

command:

- --meta_server_addrs=9.134.1.150:9559,9.134.3.25:9559,9.134.55.213:9559

- --local_ip=9.134.3.25

- --ws_ip=9.134.3.25

- --port=9559

- --data_path=/data/meta

- --log_dir=/logs

- --v=0

- --minloglevel=2

deploy:

replicas: 1

restart_policy:

condition: on-failure

placement:

constraints:

- node.hostname == VM-3-25-centos

healthcheck:

test: ["CMD", "curl", "-f", "http://9.134.3.25:19559/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- target: 19559

published: 19559

protocol: tcp

mode: host

- target: 19560

published: 19560

protocol: tcp

mode: host

- target: 9559

published: 9559

protocol: tcp

mode: host

volumes:

- /data/nebula/data/meta:/data/meta

- /data/nebula/logs/meta:/logs

networks:

- nebula-net

metad2:

image: vesoft/nebula-metad:v2.0.0-rc1

env_file:

- ./nebula.env

command:

- --meta_server_addrs=9.134.1.150:9559,9.134.3.25:9559,9.134.55.213:9559

- --local_ip=9.134.55.213

- --ws_ip=9.134.55.213

- --port=9559

- --data_path=/data/meta

- --log_dir=/logs

- --v=0

- --minloglevel=2

deploy:

replicas: 1

restart_policy:

condition: on-failure

placement:

constraints:

- node.hostname == VM_55_213_centos

healthcheck:

test: ["CMD", "curl", "-f", "http://9.134.55.213:19559/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- target: 19559

published: 19559

protocol: tcp

mode: host

- target: 19560

published: 19560

protocol: tcp

mode: host

- target: 9559

published: 9559

protocol: tcp

mode: host

volumes:

- /data/nebula/data/meta:/data/meta

- /data/nebula/logs/meta:/logs

networks:

- nebula-net

storaged0:

image: vesoft/nebula-storaged:v2.0.0-rc1

env_file:

- ./nebula.env

command:

- --meta_server_addrs=9.134.1.150:9559,9.134.3.25:9559,9.134.55.213:9559

- --local_ip=9.134.1.150

- --ws_ip=9.134.1.150

- --port=9779

- --data_path=/data/storaged

- --log_dir=/logs

- --v=0

- --minloglevel=2

- --raft_rpc_timeout_ms=5000

- --heartbeat_interval_secs=30

- --enable_rocksdb_prefix_filtering=true

deploy:

replicas: 1

restart_policy:

condition: on-failure

placement:

constraints:

- node.hostname == VM-1-150-centos

depends_on:

- metad0

- metad1

- metad2

healthcheck:

test: ["CMD", "curl", "-f", "http://9.134.1.150:19779/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- target: 19779

published: 19779

protocol: tcp

mode: host

- target: 19780

published: 19780

protocol: tcp

mode: host

- target: 9779

published: 9779

protocol: tcp

mode: host

volumes:

- /data/nebula/data/storaged:/data/storaged

- /data/nebula/logs/storaged:/logs

networks:

- nebula-net

storaged1:

image: vesoft/nebula-storaged:v2.0.0-rc1

env_file:

- ./nebula.env

command:

- --meta_server_addrs=9.134.1.150:9559,9.134.3.25:9559,9.134.55.213:9559

- --local_ip=9.134.3.25

- --ws_ip=9.134.3.25

- --port=9779

- --data_path=/data/storaged

- --log_dir=/logs

- --v=0

- --minloglevel=2

- --raft_rpc_timeout_ms=5000

- --heartbeat_interval_secs=30

- --enable_rocksdb_prefix_filtering=true

deploy:

replicas: 1

restart_policy:

condition: on-failure

placement:

constraints:

- node.hostname == VM-3-25-centos

depends_on:

- metad0

- metad1

- metad2

healthcheck:

test: ["CMD", "curl", "-f", "http://9.134.3.25:19779/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- target: 19779

published: 19779

protocol: tcp

mode: host

- target: 19780

published: 19780

protocol: tcp

mode: host

- target: 9779

published: 9779

protocol: tcp

mode: host

volumes:

- /data/nebula/data/storaged:/data/storaged

- /data/nebula/logs/storaged:/logs

networks:

- nebula-net

storaged2:

image: vesoft/nebula-storaged:v2.0.0-rc1

env_file:

- ./nebula.env

command:

- --meta_server_addrs=9.134.1.150:9559,9.134.3.25:9559,9.134.55.213:9559

- --local_ip=9.134.55.213

- --ws_ip=9.134.55.213

- --port=9779

- --data_path=/data/storaged

- --log_dir=/logs

- --v=0

- --minloglevel=2

- --raft_rpc_timeout_ms=5000

- --heartbeat_interval_secs=30

- --enable_rocksdb_prefix_filtering=true

deploy:

replicas: 1

restart_policy:

condition: on-failure

placement:

constraints:

- node.hostname == VM_55_213_centos

depends_on:

- metad0

- metad1

- metad2

healthcheck:

test: ["CMD", "curl", "-f", "http://9.134.55.213:19779/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- target: 19779

published: 19779

protocol: tcp

mode: host

- target: 19780

published: 19780

protocol: tcp

mode: host

- target: 9779

published: 9779

protocol: tcp

mode: host

volumes:

- /data/nebula/data/storaged:/data/storaged

- /data/nebula/logs/storaged:/logs

networks:

- nebula-net

graphd0:

image: vesoft/nebula-graphd:v2.0.0-rc1

env_file:

- ./nebula.env

command:

- --meta_server_addrs=9.134.1.150:9559,9.134.3.25:9559,9.134.55.213:9559

- --port=9699

- --ws_ip=9.134.1.150

- --log_dir=/logs

- --v=0

- --minloglevel=2

deploy:

replicas: 1

restart_policy:

condition: on-failure

placement:

constraints:

- node.hostname == VM-1-150-centos

depends_on:

- metad0

- metad1

- metad2

healthcheck:

test: ["CMD", "curl", "-f", "http://9.134.1.150:19699/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- target: 9699

published: 9699

protocol: tcp

mode: host

- target: 19699

published: 19699

protocol: tcp

mode: host

- target: 19670

published: 19670

protocol: tcp

mode: host

volumes:

- /data/nebula/logs/graphd:/logs

networks:

- nebula-net

graphd1:

image: vesoft/nebula-graphd:v2.0.0-rc1

env_file:

- ./nebula.env

command:

- --meta_server_addrs=9.134.1.150:9559,9.134.3.25:9559,9.134.55.213:9559

- --port=9699

- --ws_ip=9.134.3.25

- --log_dir=/logs

- --v=2

- --minloglevel=2

deploy:

replicas: 1

restart_policy:

condition: on-failure

placement:

constraints:

- node.hostname == VM-3-25-centos

depends_on:

- metad0

- metad1

- metad2

healthcheck:

test: ["CMD", "curl", "-f", "http://9.134.3.25:19699/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- target: 9699

published: 9699

protocol: tcp

mode: host

- target: 19699

published: 19699

protocol: tcp

mode: host

- target: 19670

published: 19670

protocol: tcp

mode: host

volumes:

- /data/nebula/logs/graphd:/logs

networks:

- nebula-net

graphd2:

image: vesoft/nebula-graphd:v2.0.0-rc1

env_file:

- ./nebula.env

command:

- --meta_server_addrs=9.134.1.150:9559,9.134.3.25:9559,9.134.55.213:9559

- --port=9699

- --ws_ip=9.134.55.213

- --log_dir=/logs

- --v=0

- --minloglevel=2

deploy:

replicas: 1

restart_policy:

condition: on-failure

placement:

constraints:

- node.hostname == VM_55_213_centos

depends_on:

- metad0

- metad1

- metad2

healthcheck:

test: ["CMD", "curl", "-f", "http://9.134.55.213:19699/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- target: 9699

published: 9699

protocol: tcp

mode: host

- target: 19699

published: 19699

protocol: tcp

mode: host

- target: 19670

published: 19670

protocol: tcp

mode: host

volumes:

- /data/nebula/logs/graphd:/logs

networks:

- nebula-net

networks:

nebula-net:

external: true

attachable: true

name: host

meta和storage可以启动,但是graph无法启动

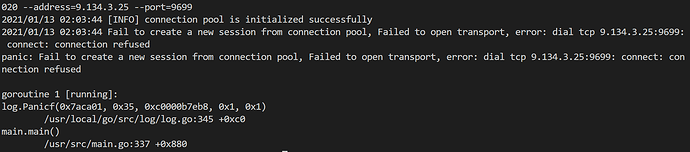

graph报如下错误日志

E0112 08:07:25.332816 13 MetaClient.cpp:581] Send request to [9.134.3.25:9559], exceed retry limit

E0112 08:07:25.334055 1 MetaClient.cpp:60] Heartbeat failed, status:RPC failure in MetaClient: N6apache6thrift9transport19TTransportExceptionE: AsyncSocketException: connect failed, type = Socket not open, errno = 111 (Connection refused): Connection refused

E0112 08:07:30.348592 14 MetaClient.cpp:581] Send request to [9.134.55.213:9559], exceed retry limit

E0112 08:07:30.348659 1 MetaClient.cpp:60] Heartbeat failed, status:RPC failure in MetaClient: N6apache6thrift9transport19TTransportExceptionE: AsyncSocketException: connect failed, type = Socket not open, errno = 111 (Connection refused): Connection refused

E0112 08:07:35.358232 15 MetaClient.cpp:581] Send request to [9.134.55.213:9559], exceed retry limit

E0112 08:07:35.358297 1 MetaClient.cpp:60] Heartbeat failed, status:RPC failure in MetaClient: N6apache6thrift9transport19TTransportExceptionE: AsyncSocketException: connect failed, type = Socket not open, errno = 111 (Connection refused): Connection refused

E0112 08:07:40.371402 16 MetaClient.cpp:581] Send request to [9.134.1.150:9559], exceed retry limit

E0112 08:07:40.371459 1 MetaClient.cpp:60] Heartbeat failed, status:RPC failure in MetaClient: N6apache6thrift9transport19TTransportExceptionE: AsyncSocketException: connect failed, type = Socket not open, errno = 111 (Connection refused): Connection refused

E0112 08:07:46.617467 17 MetaClient.cpp:581] Send request to [9.134.55.213:9559], exceed retry limit

E0112 08:07:46.617542 21 MetaClient.cpp:121] Heartbeat failed, status:RPC failure in MetaClient: N6apache6thrift9transport19TTransportExceptionE: AsyncSocketException: connect failed, type = Socket not open, errno = 111 (Connection refused): Connection refused

Running on machine: VM-1-150-centos

Log line format: [IWEF]mmdd hh:mm:ss.uuuuuu threadid file:line] msg

E0112 10:08:24.978436 13 MetaClient.cpp:581] Send request to [9.134.55.213:9559], exceed retry limit

E0112 10:08:24.978682 1 MetaClient.cpp:60] Heartbeat failed, status:RPC failure in MetaClient: N6apache6thrift9transport

19TTransportExceptionE: AsyncSocketException: connect failed, type = Socket not open, errno = 111 (Connection refused): Conn

ection refused

E0112 10:08:29.992230 14 MetaClient.cpp:581] Send request to [9.134.3.25:9559], exceed retry limit

E0112 10:08:29.992303 1 MetaClient.cpp:60] Heartbeat failed, status:RPC failure in MetaClient: N6apache6thrift9transport

19TTransportExceptionE: AsyncSocketException: connect failed, type = Socket not open, errno = 111 (Connection refused): Conn

ection refused

使用的是host模式,我使用telnet 9.134.55.213 9559 到对应机器是通的,请帮忙看下什么问题