- nebula 版本: 2.0 beta

- 部署方式(分布式 / 单机 / Docker / DBaaS):docker

- 硬件信息

- 磁盘( 必须为 SSD ,不支持 HDD)SSD

- CPU、内存信息:40C 126G

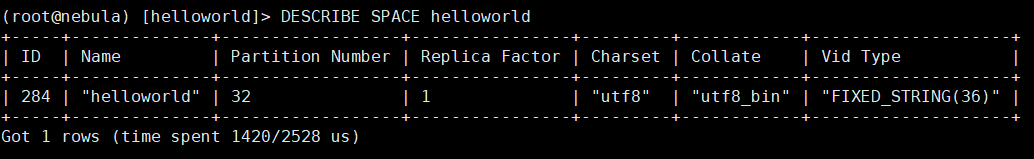

- 出问题的 Space 的创建方式:执行

describe space xxx;

- 问题的具体描述

你好,我使用的spark exchang来导入数据,spark使用的docker单机部署,spark版本是2.2.1,hadoop版本是2.8.2,exchange是在spark的容器内编译的,使用exchange提交导入任务时,会报如下错误:

> spark-submit --class com.vesoft.nebula.exchange.Exchange --master local ./nebula-spark-utils/nebula-exchange/target/nebula-exchange-2.0.0.jar -c nebula_application.conf

21/01/18 11:30:26 INFO state.StateStoreCoordinatorRef: Registered StateStoreCoordinator endpoint

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/spark/sql/sources/v2/StreamWriteSupport

at java.lang.ClassLoader.defineClass1(Native Method)

at java.lang.ClassLoader.defineClass(ClassLoader.java:763)

at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142)

at java.net.URLClassLoader.defineClass(URLClassLoader.java:468)

at java.net.URLClassLoader.access$100(URLClassLoader.java:74)

at java.net.URLClassLoader$1.run(URLClassLoader.java:369)

at java.net.URLClassLoader$1.run(URLClassLoader.java:363)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:362)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

at java.lang.Class.forName0(Native Method)

at java.lang.Class.forName(Class.java:348)

at java.util.ServiceLoader$LazyIterator.nextService(ServiceLoader.java:370)

at java.util.ServiceLoader$LazyIterator.next(ServiceLoader.java:404)

at java.util.ServiceLoader$1.next(ServiceLoader.java:480)

at scala.collection.convert.Wrappers$JIteratorWrapper.next(Wrappers.scala:43)

at scala.collection.Iterator$class.foreach(Iterator.scala:893)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1336)

at scala.collection.IterableLike$class.foreach(IterableLike.scala:72)

at scala.collection.AbstractIterable.foreach(Iterable.scala:54)

at scala.collection.TraversableLike$class.filterImpl(TraversableLike.scala:247)

at scala.collection.TraversableLike$class.filter(TraversableLike.scala:259)

at scala.collection.AbstractTraversable.filter(Traversable.scala:104)

at org.apache.spark.sql.execution.datasources.DataSource$.lookupDataSource(DataSource.scala:526)

at org.apache.spark.sql.execution.datasources.DataSource.providingClass$lzycompute(DataSource.scala:87)

at org.apache.spark.sql.execution.datasources.DataSource.providingClass(DataSource.scala:87)

at org.apache.spark.sql.execution.datasources.DataSource.resolveRelation(DataSource.scala:302)

at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:178)

at org.apache.spark.sql.DataFrameReader.json(DataFrameReader.scala:333)

at org.apache.spark.sql.DataFrameReader.json(DataFrameReader.scala:279)

at com.vesoft.nebula.exchange.reader.JSONReader.read(FileBaseReader.scala:67)

at com.vesoft.nebula.exchange.Exchange$.com$vesoft$nebula$exchange$Exchange$$createDataSource(Exchange.scala:219)

at com.vesoft.nebula.exchange.Exchange$$anonfun$main$2.apply(Exchange.scala:124)

at com.vesoft.nebula.exchange.Exchange$$anonfun$main$2.apply(Exchange.scala:116)

at scala.collection.immutable.List.foreach(List.scala:381)

at com.vesoft.nebula.exchange.Exchange$.main(Exchange.scala:116)

at com.vesoft.nebula.exchange.Exchange.main(Exchange.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:775)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:180)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:205)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:119)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: java.lang.ClassNotFoundException: org.apache.spark.sql.sources.v2.StreamWriteSupport

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 47 more

21/01/18 11:30:26 INFO spark.SparkContext: Invoking stop() from shutdown hook

exchange的使用文档有说明使用限制,请查看spark的版本限制

Exchange使用的Spark版本是2.4.4,该版本的SparkSQL读取json文件时使用DataSourceV2。 DataSourceV2是Spark2.3出的新特性,所以你服务端Spark版本2.2.1不具备DataSourceV2,也就不存在org/apache/spark/sql/sources/v2/StreamWriteSupport 这个类。