- nebula 版本:2.0-ga

- 部署方式(分布式 / 单机 / Docker / DBaaS):docker swarm 分布式

- 相关的 meta / storage / graph info 日志信息

用exchange导入数据时,找不到hive table,但是在hdfs的warehouse里能看到表。

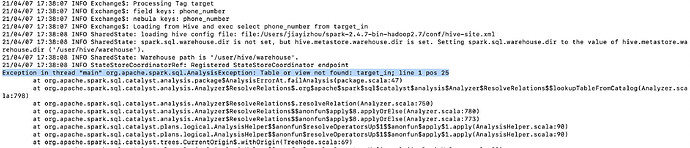

exchange报错:

Exception in thread "main" org.apache.spark.sql.AnalysisException: Table or view not found: target_in

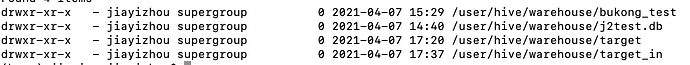

HDFS中:

配置文件:

{

# Spark related configuration

spark: {

app: {

name: Hive Exchange 2.0

}

driver: {

cores: 1

maxResultSize: 1G

}

executor: {

memory:1G

}

cores {

max: 16

}

}

# If Spark and HIVE are deployed in the different clusters,

# configure these parameters for HIVE. Otherwise, ignore them.

hive: {

waredir: "hdfs://localhost:9000/usr/hive/warehouse/"

connectionURL: "jdbc:mysql://localhost:3306/hive_spark?characterEncoding=UTF-8"

connectionDriverName: "com.mysql.jdbc.Driver"

connectionUserName: "jiayizhou"

connectionPassword: "123456"

}

# Nebula Graph related configuration

nebula: {

address:{

# Specifies the IP addresses and ports of the Graph Service and the Meta Service of Nebula Graph

# If multiple servers are used, separate the addresses with commas.

# Format: "ip1:port","ip2:port","ip3:port"

graph:["192.168.5.100:3699"]

meta:["192.168.5.100:45500"]

}

# Specifies an account that has the WriteData privilege in Nebula Graph and its password

user: user

pswd: password

# Specifies a graph space name

space: bukong

connection {

timeout: 3000

retry: 3

}

execution {

retry: 3

}

error: {

max: 32

output: /tmp/errors

}

rate: {

limit: 1024

timeout: 1000

}

}

# Process vertices

tags: [

# Sets for the user tag

{

# Specifies a tag name defined in Nebula Graph

name: target

type: {

# Specifies the data source. hive is used.

source: hive

# Specifies how to import vertex data into Nebula Graph: client or sst.

# For more information about importing sst files, see Import SST files (doc to do).

sink: client

}

# Specifies the SQL statement to read data from the users table in the mooc database

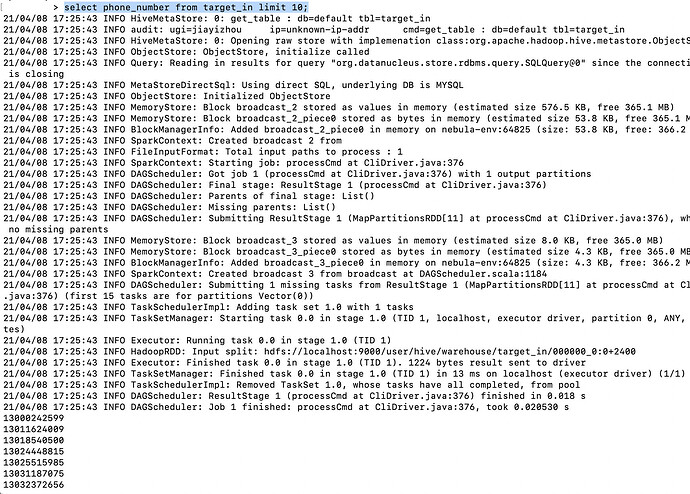

exec: "select phone_number from target_in"

# Specifies the column names from the users table to fields.

# Their values are used as the source of the userId (nebula.fields) property defined in Nebula Graph.

# If more than one column name is specified, separate them with commas.

# fields for the HIVE and nebula.fields for Nebula Graph must have the one-to-one correspondence relationship.

fields: [phone_number] # fields是hive表中的字段名

nebula.fields: [phone_number] # nebula.fields是nebula中某个类型的点(tag)的属性

# Specifies a column as the source of VIDs.

# The value of vertex must be one column name in the exec sentence.

# If the values are not of the int type, use vertex.policy to

# set the mapping policy. "hash" is preferred.

# Refer to the configuration of the course tag.

vertex: phone_number # hive表中选取一个字段作为点的唯一unique key,由于v2只支持string做vertex id,不再支持int

# Specifies the maximum number of vertex data to be written into

# Nebula Graph in a single batch.

batch: 256

# Specifies the partition number of Spark.

partition: 32

}

]

# Process edges

edges: [

# Sets for the action edge type

{

# Specifies an edge type name defined in Nebula Graph

name: call

type: {

# Specifies the data source. hive is used.

source: hive

# Specifies how to import vertex data into Nebula Graph: client or sst

# For more information about importing sst files,

# see Import SST files (doc to do).

sink: client

}

# Specifies the SQL statement to read data from the actions table in

# the mooc database.

exec: "select last_time, sender, receiver from bukong_test"

# Specifies the column names from the actions table to fields.

# Their values are used as the source of the properties of

# the action edge type defined in Nebula Graph.

# If more than one column name is specified, separate them with commas.

# fields for the HIVE and nebula.fields for Nebula Graph must

# have the one-to-one correspondence relationship.

fields: [last_time]

nebula.fields: [latest_time]

# source specifies a column as the source of the IDs of

# the source vertex of an edge.

# target specifies a column as the source of the IDs of

# the target vertex of an edge.

# The value of source.field and target.field must be

# column names set in the exec sentence.

source: sender

target: receiver

# For now, only string type VIDs are supported in Nebula Graph v2.x.

# Do not use vertex.policy for mapping.

#target: {

# field: dstid

# policy: "hash"

#}

# Specifies the maximum number of vertex data to be

# written into Nebula Graph in a single batch.

batch: 256

# Specifies the partition number of Spark.

partition: 32

}

]

}