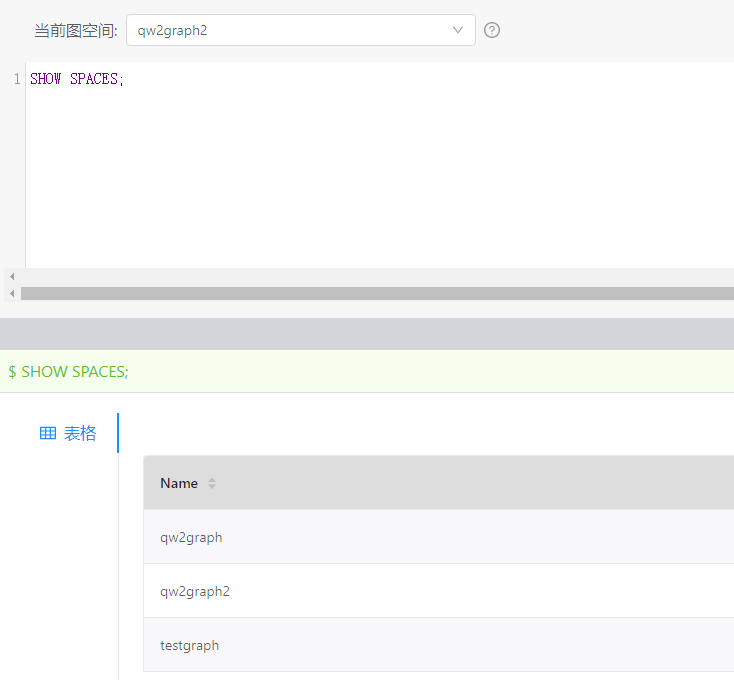

1、space

2、exchange日志,后面的报错是我把任务给kill掉导致的

21/05/25 19:37:05 INFO Configs$: Execution Config com.vesoft.nebula.exchange.config.ExecutionConfigEntry@7f9c3944

21/05/25 19:37:05 INFO Configs$: Source Config Hive source exec: select distinct gmsfhm, xm from rzx_dw.sta_0_graph_czrk

21/05/25 19:37:05 INFO Configs$: Sink Config Hive source exec: select distinct gmsfhm, xm from rzx_dw.sta_0_graph_czrk

21/05/25 19:37:05 INFO Configs$: name person batch 256

21/05/25 19:37:05 INFO Configs$: Tag Config: Tag name: person, source: Hive source exec: select distinct gmsfhm, xm from rzx_dw.sta_0_graph_czrk, sink: Nebula sink addresses: [15.48.83.134:9669], vertex field: gmsfhm, vertex policy: None, batch: 256, partition: 32.

21/05/25 19:37:05 INFO Exchange$: Config Configs(com.vesoft.nebula.exchange.config.DataBaseConfigEntry@48ebce90,com.vesoft.nebula.exchange.config.UserConfigEntry@b7df0731,com.vesoft.nebula.exchange.config.ConnectionConfigEntry@c419f174,com.vesoft.nebula.exchange.config.ExecutionConfigEntry@7f9c3944,com.vesoft.nebula.exchange.config.ErrorConfigEntry@55508fa6,com.vesoft.nebula.exchange.config.RateConfigEntry@fc4543af,,List(Tag name: person, source: Hive source exec: select distinct gmsfhm, xm from rzx_dw.sta_0_graph_czrk, sink: Nebula sink addresses: [15.48.83.134:9669], vertex field: gmsfhm, vertex policy: None, batch: 256, partition: 32.),List(),None)

21/05/25 19:37:05 INFO Exchange$: you don't config hive source, so using hive tied with spark.

21/05/25 19:37:05 INFO SparkContext: Running Spark version 2.3.1.3.0.1.0-187

21/05/25 19:37:05 INFO SparkContext: Submitted application: com.vesoft.nebula.exchange.Exchange

21/05/25 19:37:05 INFO SecurityManager: Changing view acls to: root,renzixing

21/05/25 19:37:05 INFO SecurityManager: Changing modify acls to: root,renzixing

21/05/25 19:37:05 INFO SecurityManager: Changing view acls groups to:

21/05/25 19:37:05 INFO SecurityManager: Changing modify acls groups to:

21/05/25 19:37:05 INFO SecurityManager: SecurityManager: authentication disabled; ui acls enabled; users with view permissions: Set(root, renzixing); groups with view permissions: Set(); users with modify permissions: Set(root, renzixing); groups with modify permissions: Set()

21/05/25 19:37:06 INFO Utils: Successfully started service 'sparkDriver' on port 45905.

21/05/25 19:37:06 INFO SparkEnv: Registering MapOutputTracker

21/05/25 19:37:06 INFO SparkEnv: Registering BlockManagerMaster

21/05/25 19:37:06 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

21/05/25 19:37:06 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

21/05/25 19:37:06 INFO DiskBlockManager: Created local directory at /tmp/blockmgr-a1af8d4d-a183-4a09-988d-3cdfa84dc808

21/05/25 19:37:06 INFO MemoryStore: MemoryStore started with capacity 366.3 MB

21/05/25 19:37:06 INFO SparkEnv: Registering OutputCommitCoordinator

21/05/25 19:37:06 INFO log: Logging initialized @3433ms

21/05/25 19:37:06 INFO Server: jetty-9.3.z-SNAPSHOT, build timestamp: 2018-06-06T01:11:56+08:00, git hash: 84205aa28f11a4f31f2a3b86d1bba2cc8ab69827

21/05/25 19:37:06 INFO Server: Started @3520ms

21/05/25 19:37:06 WARN Utils: Service 'SparkUI' could not bind on port 4040. Attempting port 4041.

21/05/25 19:37:06 WARN Utils: Service 'SparkUI' could not bind on port 4041. Attempting port 4042.

21/05/25 19:37:06 INFO AbstractConnector: Started ServerConnector@2c42b421{HTTP/1.1,[http/1.1]}{0.0.0.0:4042}

21/05/25 19:37:06 INFO Utils: Successfully started service 'SparkUI' on port 4042.

21/05/25 19:37:06 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@4351171a{/jobs,null,AVAILABLE,@Spark}

21/05/25 19:37:06 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@601cbd8c{/jobs/json,null,AVAILABLE,@Spark}

21/05/25 19:37:06 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@7180e701{/jobs/job,null,AVAILABLE,@Spark}

21/05/25 19:37:06 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@5a5c128{/jobs/job/json,null,AVAILABLE,@Spark}

21/05/25 19:37:06 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@73eb8672{/stages,null,AVAILABLE,@Spark}

21/05/25 19:37:06 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@5942ee04{/stages/json,null,AVAILABLE,@Spark}

21/05/25 19:37:06 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@5e76a2bb{/stages/stage,null,AVAILABLE,@Spark}

21/05/25 19:37:06 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@14faa38c{/stages/stage/json,null,AVAILABLE,@Spark}

21/05/25 19:37:06 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@7ff2b8d2{/stages/pool,null,AVAILABLE,@Spark}

21/05/25 19:37:06 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@6dc1484{/stages/pool/json,null,AVAILABLE,@Spark}

21/05/25 19:37:06 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@6e92c6ad{/storage,null,AVAILABLE,@Spark}

21/05/25 19:37:06 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@2fb5fe30{/storage/json,null,AVAILABLE,@Spark}

21/05/25 19:37:06 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@456be73c{/storage/rdd,null,AVAILABLE,@Spark}

21/05/25 19:37:06 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@2375b321{/storage/rdd/json,null,AVAILABLE,@Spark}

21/05/25 19:37:06 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@5baaae4c{/environment,null,AVAILABLE,@Spark}

21/05/25 19:37:06 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@5b6e8f77{/environment/json,null,AVAILABLE,@Spark}

21/05/25 19:37:06 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@41a6d121{/executors,null,AVAILABLE,@Spark}

21/05/25 19:37:06 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@4f449e8f{/executors/json,null,AVAILABLE,@Spark}

21/05/25 19:37:06 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@411291e5{/executors/threadDump,null,AVAILABLE,@Spark}

21/05/25 19:37:06 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@6e28bb87{/executors/threadDump/json,null,AVAILABLE,@Spark}

21/05/25 19:37:06 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@19f040ba{/static,null,AVAILABLE,@Spark}

21/05/25 19:37:06 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@40226788{/,null,AVAILABLE,@Spark}

21/05/25 19:37:06 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@4159e81b{/api,null,AVAILABLE,@Spark}

21/05/25 19:37:06 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@7e97551f{/jobs/job/kill,null,AVAILABLE,@Spark}

21/05/25 19:37:06 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@400d912a{/stages/stage/kill,null,AVAILABLE,@Spark}

21/05/25 19:37:06 INFO SparkUI: Bound SparkUI to 0.0.0.0, and started at http://15.48.83.120:4042

21/05/25 19:37:06 INFO SparkContext: Added JAR file:/apps/nebula/nebula-exchange/nebula-exchange-2.0.1.jar at spark://15.48.83.120:45905/jars/nebula-exchange-2.0.1.jar with timestamp 1621942626499

21/05/25 19:37:07 INFO ConfiguredRMFailoverProxyProvider: Failing over to rm2

21/05/25 19:37:07 INFO Client: Requesting a new application from cluster with 63 NodeManagers

21/05/25 19:37:07 INFO Configuration: found resource resource-types.xml at file:/etc/hadoop/3.0.1.0-187/0/resource-types.xml

21/05/25 19:37:07 INFO Client: Verifying our application has not requested more than the maximum memory capability of the cluster (184320 MB per container)

21/05/25 19:37:07 INFO Client: Will allocate AM container, with 896 MB memory including 384 MB overhead

21/05/25 19:37:07 INFO Client: Setting up container launch context for our AM

21/05/25 19:37:07 INFO Client: Setting up the launch environment for our AM container

21/05/25 19:37:07 INFO Client: Preparing resources for our AM container

21/05/25 19:37:07 INFO HadoopFSDelegationTokenProvider: getting token for: DFS[DFSClient[clientName=DFSClient_NONMAPREDUCE_-1705878868_1, ugi=renzixing@JNWJ (auth:KERBEROS)]]

21/05/25 19:37:07 INFO DFSClient: Created token for renzixing: HDFS_DELEGATION_TOKEN owner=renzixing@JNWJ, renewer=yarn, realUser=, issueDate=1621942644752, maxDate=1622547444752, sequenceNumber=214491, masterKeyId=118 on ha-hdfs:mycluster

21/05/25 19:37:09 INFO Client: Use hdfs cache file as spark.yarn.archive for HDP, hdfsCacheFile:hdfs://mycluster/hdp/apps/3.0.1.0-187/spark2/spark2-hdp-yarn-archive.tar.gz

21/05/25 19:37:09 INFO Client: Source and destination file systems are the same. Not copying hdfs://mycluster/hdp/apps/3.0.1.0-187/spark2/spark2-hdp-yarn-archive.tar.gz

21/05/25 19:37:09 INFO Client: Distribute hdfs cache file as spark.sql.hive.metastore.jars for HDP, hdfsCacheFile:hdfs://mycluster/hdp/apps/3.0.1.0-187/spark2/spark2-hdp-hive-archive.tar.gz

21/05/25 19:37:09 INFO Client: Source and destination file systems are the same. Not copying hdfs://mycluster/hdp/apps/3.0.1.0-187/spark2/spark2-hdp-hive-archive.tar.gz

21/05/25 19:37:09 INFO Client: Uploading resource file:/tmp/spark-dddc41be-298b-4428-bcea-16ba5f2ca63e/__spark_conf__9213861236337451333.zip -> hdfs://mycluster/user/renzixing/.sparkStaging/application_1619435766040_41321/__spark_conf__.zip

21/05/25 19:37:10 INFO SecurityManager: Changing view acls to: root,renzixing

21/05/25 19:37:10 INFO SecurityManager: Changing modify acls to: root,renzixing

21/05/25 19:37:10 INFO SecurityManager: Changing view acls groups to:

21/05/25 19:37:10 INFO SecurityManager: Changing modify acls groups to:

21/05/25 19:37:10 INFO SecurityManager: SecurityManager: authentication disabled; ui acls enabled; users with view permissions: Set(root, renzixing); groups with view permissions: Set(); users with modify permissions: Set(root, renzixing); groups with modify permissions: Set()

21/05/25 19:37:10 INFO Client: Submitting application application_1619435766040_41321 to ResourceManager

21/05/25 19:37:10 INFO YarnClientImpl: Submitted application application_1619435766040_41321

21/05/25 19:37:10 INFO SchedulerExtensionServices: Starting Yarn extension services with app application_1619435766040_41321 and attemptId None

21/05/25 19:37:11 INFO Client: Application report for application_1619435766040_41321 (state: ACCEPTED)

21/05/25 19:37:11 INFO Client:

client token: Token { kind: YARN_CLIENT_TOKEN, service: }

diagnostics: AM container is launched, waiting for AM container to Register with RM

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: renzixing

start time: 1621942647076

final status: UNDEFINED

tracking URL: http://master4.jnwj.com:8088/proxy/application_1619435766040_41321/

user: renzixing

21/05/25 19:37:12 INFO Client: Application report for application_1619435766040_41321 (state: ACCEPTED)

21/05/25 19:37:13 INFO Client: Application report for application_1619435766040_41321 (state: ACCEPTED)

21/05/25 19:37:14 INFO Client: Application report for application_1619435766040_41321 (state: ACCEPTED)

21/05/25 19:37:15 INFO Client: Application report for application_1619435766040_41321 (state: ACCEPTED)

21/05/25 19:37:16 INFO Client: Application report for application_1619435766040_41321 (state: ACCEPTED)

21/05/25 19:37:16 INFO YarnClientSchedulerBackend: Add WebUI Filter. org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter, Map(PROXY_HOSTS -> master3.jnwj.com,master4.jnwj.com, PROXY_URI_BASES -> http://master3.jnwj.com:8088/proxy/application_1619435766040_41321,http://master4.jnwj.com:8088/proxy/application_1619435766040_41321, RM_HA_URLS -> master3.jnwj.com:8088,master4.jnwj.com:8088), /proxy/application_1619435766040_41321

21/05/25 19:37:16 INFO JettyUtils: Adding filter org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter to /jobs, /jobs/json, /jobs/job, /jobs/job/json, /stages, /stages/json, /stages/stage, /stages/stage/json, /stages/pool, /stages/pool/json, /storage, /storage/json, /storage/rdd, /storage/rdd/json, /environment, /environment/json, /executors, /executors/json, /executors/threadDump, /executors/threadDump/json, /static, /, /api, /jobs/job/kill, /stages/stage/kill.

21/05/25 19:37:17 INFO Client: Application report for application_1619435766040_41321 (state: ACCEPTED)

21/05/25 19:37:18 INFO YarnSchedulerBackend$YarnSchedulerEndpoint: ApplicationMaster registered as NettyRpcEndpointRef(spark-client://YarnAM)

21/05/25 19:37:18 INFO Client: Application report for application_1619435766040_41321 (state: RUNNING)

21/05/25 19:37:18 INFO Client:

client token: Token { kind: YARN_CLIENT_TOKEN, service: }

diagnostics: N/A

ApplicationMaster host: 15.48.91.73

ApplicationMaster RPC port: 0

queue: renzixing

start time: 1621942647076

final status: UNDEFINED

tracking URL: http://master4.jnwj.com:8088/proxy/application_1619435766040_41321/

user: renzixing

21/05/25 19:37:18 INFO YarnClientSchedulerBackend: Application application_1619435766040_41321 has started running.

21/05/25 19:37:18 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 41896.

21/05/25 19:37:18 INFO NettyBlockTransferService: Server created on 15.48.83.120:41896

21/05/25 19:37:18 INFO BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

21/05/25 19:37:18 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(driver, 15.48.83.120, 41896, None)

21/05/25 19:37:18 INFO BlockManagerMasterEndpoint: Registering block manager 15.48.83.120:41896 with 366.3 MB RAM, BlockManagerId(driver, 15.48.83.120, 41896, None)

21/05/25 19:37:18 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(driver, 15.48.83.120, 41896, None)

21/05/25 19:37:18 INFO BlockManager: Initialized BlockManager: BlockManagerId(driver, 15.48.83.120, 41896, None)

21/05/25 19:37:18 INFO JettyUtils: Adding filter org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter to /metrics/json.

21/05/25 19:37:18 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@64dfb31d{/metrics/json,null,AVAILABLE,@Spark}

21/05/25 19:37:18 INFO EventLoggingListener: Logging events to hdfs:/spark2-history/application_1619435766040_41321

21/05/25 19:37:23 INFO YarnSchedulerBackend$YarnDriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (15.48.91.102:41386) with ID 1

21/05/25 19:37:23 INFO YarnSchedulerBackend$YarnDriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (15.48.91.106:44396) with ID 2

21/05/25 19:37:23 INFO YarnClientSchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.8

21/05/25 19:37:23 INFO BlockManagerMasterEndpoint: Registering block manager hd49.jnwj.com:38845 with 2.5 GB RAM, BlockManagerId(2, hd49.jnwj.com, 38845, None)

21/05/25 19:37:23 INFO BlockManagerMasterEndpoint: Registering block manager hd45.jnwj.com:43397 with 2.5 GB RAM, BlockManagerId(1, hd45.jnwj.com, 43397, None)

21/05/25 19:37:23 INFO Exchange$: Processing Tag person

21/05/25 19:37:23 INFO Exchange$: field keys: gmsfhm, xm

21/05/25 19:37:23 INFO Exchange$: nebula keys: idcard, name

21/05/25 19:37:23 INFO Exchange$: Loading from Hive and exec select distinct gmsfhm, xm from rzx_dw.sta_0_graph_czrk

21/05/25 19:37:23 INFO SharedState: loading hive config file: file:/etc/spark2/3.0.1.0-187/0/hive-site.xml

21/05/25 19:37:23 INFO SharedState: Setting hive.metastore.warehouse.dir ('null') to the value of spark.sql.warehouse.dir ('/apps/spark/warehouse').

21/05/25 19:37:23 INFO SharedState: Warehouse path is '/apps/spark/warehouse'.

21/05/25 19:37:23 INFO JettyUtils: Adding filter org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter to /SQL.

21/05/25 19:37:23 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@3e28dc96{/SQL,null,AVAILABLE,@Spark}

21/05/25 19:37:23 INFO JettyUtils: Adding filter org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter to /SQL/json.

21/05/25 19:37:23 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@31940704{/SQL/json,null,AVAILABLE,@Spark}

21/05/25 19:37:23 INFO JettyUtils: Adding filter org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter to /SQL/execution.

21/05/25 19:37:23 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@49c83262{/SQL/execution,null,AVAILABLE,@Spark}

21/05/25 19:37:23 INFO JettyUtils: Adding filter org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter to /SQL/execution/json.

21/05/25 19:37:23 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@77bb916f{/SQL/execution/json,null,AVAILABLE,@Spark}

21/05/25 19:37:23 INFO JettyUtils: Adding filter org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter to /static/sql.

21/05/25 19:37:23 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@184de357{/static/sql,null,AVAILABLE,@Spark}

21/05/25 19:37:24 INFO StateStoreCoordinatorRef: Registered StateStoreCoordinator endpoint

21/05/25 19:37:25 INFO HiveUtils: Initializing HiveMetastoreConnection version 3.0 using file:/usr/hdp/current/spark2-client/standalone-metastore/standalone-metastore-1.21.2.3.0.1.0-187-hive3.jar:file:/usr/hdp/current/spark2-client/standalone-metastore/standalone-metastore-1.21.2.3.0.1.0-187-hive3.jar

21/05/25 19:37:25 INFO HiveConf: Found configuration file file:/usr/hdp/3.0.1.0-187/hive/conf/hive-site.xml

21/05/25 19:37:25 WARN HiveConf: HiveConf of name hive.heapsize does not exist

21/05/25 19:37:25 WARN HiveConf: HiveConf of name hive.stats.fetch.partition.stats does not exist

Hive Session ID = b03c9115-cd04-450c-9459-7385a69f4fc3

21/05/25 19:37:25 INFO SessionState: Hive Session ID = b03c9115-cd04-450c-9459-7385a69f4fc3

21/05/25 19:37:25 INFO SessionState: Created HDFS directory: /tmp/spark/renzixing/b03c9115-cd04-450c-9459-7385a69f4fc3

21/05/25 19:37:25 INFO SessionState: Created local directory: /tmp/root/b03c9115-cd04-450c-9459-7385a69f4fc3

21/05/25 19:37:25 INFO SessionState: Created HDFS directory: /tmp/spark/renzixing/b03c9115-cd04-450c-9459-7385a69f4fc3/_tmp_space.db

21/05/25 19:37:25 INFO HiveClientImpl: Warehouse location for Hive client (version 3.0.0) is /apps/spark/warehouse

21/05/25 19:37:26 INFO HiveMetaStoreClient: Trying to connect to metastore with URI thrift://master3.jnwj.com:9083

21/05/25 19:37:26 INFO HiveMetaStoreClient: HMSC::open(): Could not find delegation token. Creating KERBEROS-based thrift connection.

21/05/25 19:37:26 INFO HiveMetaStoreClient: Opened a connection to metastore, current connections: 1

21/05/25 19:37:26 INFO HiveMetaStoreClient: Connected to metastore.

21/05/25 19:37:26 INFO RetryingMetaStoreClient: RetryingMetaStoreClient proxy=class org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient ugi=renzixing@JNWJ (auth:KERBEROS) retries=24 delay=5 lifetime=0

21/05/25 19:37:27 INFO HiveMetastoreCatalog: Inferring case-sensitive schema for table rzx_dw.sta_0_graph_czrk (inference mode: INFER_AND_SAVE)

21/05/25 19:37:27 WARN HiveMetastoreCatalog: Unable to infer schema for table rzx_dw.sta_0_graph_czrk from file format ORC (inference mode: INFER_AND_SAVE). Using metastore schema.

21/05/25 19:37:28 INFO FileSourceStrategy: Pruning directories with:

21/05/25 19:37:28 INFO FileSourceStrategy: Post-Scan Filters:

21/05/25 19:37:28 INFO FileSourceStrategy: Output Data Schema: struct<gmsfhm: string, xm: string>

21/05/25 19:37:28 INFO FileSourceScanExec: Pushed Filters:

21/05/25 19:37:28 INFO FileSourceScanExec: Pushed Filters:

21/05/25 19:37:28 INFO HashAggregateExec: spark.sql.codegen.aggregate.map.twolevel.enabled is set to true, but current version of codegened fast hashmap does not support this aggregate.

21/05/25 19:37:29 INFO CodeGenerator: Code generated in 220.733693 ms

21/05/25 19:37:29 INFO HashAggregateExec: spark.sql.codegen.aggregate.map.twolevel.enabled is set to true, but current version of codegened fast hashmap does not support this aggregate.

21/05/25 19:37:29 INFO CodeGenerator: Code generated in 36.197873 ms

21/05/25 19:37:29 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 447.0 KB, free 365.9 MB)

21/05/25 19:37:29 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 47.2 KB, free 365.8 MB)

21/05/25 19:37:29 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 15.48.83.120:41896 (size: 47.2 KB, free: 366.3 MB)

21/05/25 19:37:29 INFO SparkContext: Created broadcast 0 from foreachPartition at VerticesProcessor.scala:274

21/05/25 19:37:29 INFO FileSourceScanExec: Planning scan with bin packing, max size: 4194304 bytes, open cost is considered as scanning 4194304 bytes.

21/05/25 19:37:29 INFO SparkContext: Starting job: foreachPartition at VerticesProcessor.scala:274

21/05/25 19:37:29 INFO DAGScheduler: Registering RDD 2 (foreachPartition at VerticesProcessor.scala:274)

21/05/25 19:37:29 INFO DAGScheduler: Registering RDD 6 (foreachPartition at VerticesProcessor.scala:274)

21/05/25 19:37:29 INFO DAGScheduler: Got job 0 (foreachPartition at VerticesProcessor.scala:274) with 32 output partitions

21/05/25 19:37:29 INFO DAGScheduler: Final stage: ResultStage 2 (foreachPartition at VerticesProcessor.scala:274)

21/05/25 19:37:29 INFO DAGScheduler: Parents of final stage: List(ShuffleMapStage 1)

21/05/25 19:37:29 INFO DAGScheduler: Missing parents: List(ShuffleMapStage 1)

21/05/25 19:37:29 INFO DAGScheduler: Submitting ShuffleMapStage 1 (MapPartitionsRDD[6] at foreachPartition at VerticesProcessor.scala:274), which has no missing parents

21/05/25 19:37:29 INFO ContextCleaner: Cleaned accumulator 4

21/05/25 19:37:29 INFO ContextCleaner: Cleaned accumulator 8

21/05/25 19:37:29 INFO ContextCleaner: Cleaned accumulator 2

21/05/25 19:37:29 INFO ContextCleaner: Cleaned accumulator 5

21/05/25 19:37:29 INFO MemoryStore: Block broadcast_1 stored as values in memory (estimated size 19.8 KB, free 365.8 MB)

21/05/25 19:37:29 INFO MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 8.9 KB, free 365.8 MB)

21/05/25 19:37:29 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on 15.48.83.120:41896 (size: 8.9 KB, free: 366.2 MB)

21/05/25 19:37:29 INFO SparkContext: Created broadcast 1 from broadcast at DAGScheduler.scala:1039

21/05/25 19:37:30 INFO DAGScheduler: Submitting 1 missing tasks from ShuffleMapStage 1 (MapPartitionsRDD[6] at foreachPartition at VerticesProcessor.scala:274) (first 15 tasks are for partitions Vector(0))

21/05/25 19:37:30 INFO YarnScheduler: Adding task set 1.0 with 1 tasks

21/05/25 19:37:30 INFO TaskSetManager: Starting task 0.0 in stage 1.0 (TID 0, hd45.jnwj.com, executor 1, partition 0, PROCESS_LOCAL, 7755 bytes)

21/05/25 19:37:32 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on hd45.jnwj.com:43397 (size: 8.9 KB, free: 2.5 GB)

21/05/25 19:37:33 INFO MapOutputTrackerMasterEndpoint: Asked to send map output locations for shuffle 0 to 15.48.91.102:41386

21/05/25 19:37:34 INFO TaskSetManager: Finished task 0.0 in stage 1.0 (TID 0) in 4129 ms on hd45.jnwj.com (executor 1) (1/1)

21/05/25 19:37:34 INFO YarnScheduler: Removed TaskSet 1.0, whose tasks have all completed, from pool

21/05/25 19:37:34 INFO DAGScheduler: ShuffleMapStage 1 (foreachPartition at VerticesProcessor.scala:274) finished in 4.298 s

21/05/25 19:37:34 INFO DAGScheduler: looking for newly runnable stages

21/05/25 19:37:34 INFO DAGScheduler: running: Set()

21/05/25 19:37:34 INFO DAGScheduler: waiting: Set(ResultStage 2)

21/05/25 19:37:34 INFO DAGScheduler: failed: Set()

21/05/25 19:37:34 INFO DAGScheduler: Submitting ResultStage 2 (MapPartitionsRDD[10] at foreachPartition at VerticesProcessor.scala:274), which has no missing parents

21/05/25 19:37:34 INFO MemoryStore: Block broadcast_2 stored as values in memory (estimated size 22.8 KB, free 365.8 MB)

21/05/25 19:37:34 INFO MemoryStore: Block broadcast_2_piece0 stored as bytes in memory (estimated size 11.0 KB, free 365.8 MB)

21/05/25 19:37:34 INFO BlockManagerInfo: Added broadcast_2_piece0 in memory on 15.48.83.120:41896 (size: 11.0 KB, free: 366.2 MB)

21/05/25 19:37:34 INFO SparkContext: Created broadcast 2 from broadcast at DAGScheduler.scala:1039

21/05/25 19:37:34 INFO DAGScheduler: Submitting 32 missing tasks from ResultStage 2 (MapPartitionsRDD[10] at foreachPartition at VerticesProcessor.scala:274) (first 15 tasks are for partitions Vector(0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14))

21/05/25 19:37:34 INFO YarnScheduler: Adding task set 2.0 with 32 tasks

21/05/25 19:37:34 INFO TaskSetManager: Starting task 0.0 in stage 2.0 (TID 1, hd45.jnwj.com, executor 1, partition 0, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:34 INFO TaskSetManager: Starting task 1.0 in stage 2.0 (TID 2, hd49.jnwj.com, executor 2, partition 1, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:34 INFO BlockManagerInfo: Added broadcast_2_piece0 in memory on hd45.jnwj.com:43397 (size: 11.0 KB, free: 2.5 GB)

21/05/25 19:37:34 INFO MapOutputTrackerMasterEndpoint: Asked to send map output locations for shuffle 1 to 15.48.91.102:41386

21/05/25 19:37:35 INFO BlockManagerInfo: Added broadcast_2_piece0 in memory on hd49.jnwj.com:38845 (size: 11.0 KB, free: 2.5 GB)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 2.0 in stage 2.0 (TID 3, hd45.jnwj.com, executor 1, partition 2, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 0.0 in stage 2.0 (TID 1) in 1593 ms on hd45.jnwj.com (executor 1) (1/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 3.0 in stage 2.0 (TID 4, hd45.jnwj.com, executor 1, partition 3, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 2.0 in stage 2.0 (TID 3) in 54 ms on hd45.jnwj.com (executor 1) (2/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 4.0 in stage 2.0 (TID 5, hd45.jnwj.com, executor 1, partition 4, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 3.0 in stage 2.0 (TID 4) in 40 ms on hd45.jnwj.com (executor 1) (3/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 5.0 in stage 2.0 (TID 6, hd45.jnwj.com, executor 1, partition 5, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 4.0 in stage 2.0 (TID 5) in 37 ms on hd45.jnwj.com (executor 1) (4/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 6.0 in stage 2.0 (TID 7, hd45.jnwj.com, executor 1, partition 6, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 5.0 in stage 2.0 (TID 6) in 31 ms on hd45.jnwj.com (executor 1) (5/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 7.0 in stage 2.0 (TID 8, hd45.jnwj.com, executor 1, partition 7, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 6.0 in stage 2.0 (TID 7) in 29 ms on hd45.jnwj.com (executor 1) (6/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 8.0 in stage 2.0 (TID 9, hd45.jnwj.com, executor 1, partition 8, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 7.0 in stage 2.0 (TID 8) in 30 ms on hd45.jnwj.com (executor 1) (7/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 9.0 in stage 2.0 (TID 10, hd45.jnwj.com, executor 1, partition 9, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 8.0 in stage 2.0 (TID 9) in 27 ms on hd45.jnwj.com (executor 1) (8/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 10.0 in stage 2.0 (TID 11, hd45.jnwj.com, executor 1, partition 10, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 9.0 in stage 2.0 (TID 10) in 26 ms on hd45.jnwj.com (executor 1) (9/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 11.0 in stage 2.0 (TID 12, hd45.jnwj.com, executor 1, partition 11, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 10.0 in stage 2.0 (TID 11) in 45 ms on hd45.jnwj.com (executor 1) (10/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 12.0 in stage 2.0 (TID 13, hd45.jnwj.com, executor 1, partition 12, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 11.0 in stage 2.0 (TID 12) in 27 ms on hd45.jnwj.com (executor 1) (11/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 13.0 in stage 2.0 (TID 14, hd45.jnwj.com, executor 1, partition 13, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 12.0 in stage 2.0 (TID 13) in 26 ms on hd45.jnwj.com (executor 1) (12/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 14.0 in stage 2.0 (TID 15, hd45.jnwj.com, executor 1, partition 14, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 13.0 in stage 2.0 (TID 14) in 49 ms on hd45.jnwj.com (executor 1) (13/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 15.0 in stage 2.0 (TID 16, hd45.jnwj.com, executor 1, partition 15, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 14.0 in stage 2.0 (TID 15) in 25 ms on hd45.jnwj.com (executor 1) (14/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 16.0 in stage 2.0 (TID 17, hd45.jnwj.com, executor 1, partition 16, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 15.0 in stage 2.0 (TID 16) in 26 ms on hd45.jnwj.com (executor 1) (15/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 17.0 in stage 2.0 (TID 18, hd45.jnwj.com, executor 1, partition 17, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 16.0 in stage 2.0 (TID 17) in 21 ms on hd45.jnwj.com (executor 1) (16/32)

21/05/25 19:37:36 INFO MapOutputTrackerMasterEndpoint: Asked to send map output locations for shuffle 1 to 15.48.91.106:44396

21/05/25 19:37:36 INFO TaskSetManager: Starting task 18.0 in stage 2.0 (TID 19, hd45.jnwj.com, executor 1, partition 18, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 17.0 in stage 2.0 (TID 18) in 21 ms on hd45.jnwj.com (executor 1) (17/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 19.0 in stage 2.0 (TID 20, hd45.jnwj.com, executor 1, partition 19, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 18.0 in stage 2.0 (TID 19) in 24 ms on hd45.jnwj.com (executor 1) (18/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 20.0 in stage 2.0 (TID 21, hd45.jnwj.com, executor 1, partition 20, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 19.0 in stage 2.0 (TID 20) in 23 ms on hd45.jnwj.com (executor 1) (19/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 21.0 in stage 2.0 (TID 22, hd45.jnwj.com, executor 1, partition 21, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 20.0 in stage 2.0 (TID 21) in 22 ms on hd45.jnwj.com (executor 1) (20/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 22.0 in stage 2.0 (TID 23, hd45.jnwj.com, executor 1, partition 22, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 21.0 in stage 2.0 (TID 22) in 24 ms on hd45.jnwj.com (executor 1) (21/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 23.0 in stage 2.0 (TID 24, hd45.jnwj.com, executor 1, partition 23, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 22.0 in stage 2.0 (TID 23) in 27 ms on hd45.jnwj.com (executor 1) (22/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 24.0 in stage 2.0 (TID 25, hd45.jnwj.com, executor 1, partition 24, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 23.0 in stage 2.0 (TID 24) in 26 ms on hd45.jnwj.com (executor 1) (23/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 25.0 in stage 2.0 (TID 26, hd45.jnwj.com, executor 1, partition 25, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 24.0 in stage 2.0 (TID 25) in 23 ms on hd45.jnwj.com (executor 1) (24/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 26.0 in stage 2.0 (TID 27, hd45.jnwj.com, executor 1, partition 26, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 25.0 in stage 2.0 (TID 26) in 22 ms on hd45.jnwj.com (executor 1) (25/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 27.0 in stage 2.0 (TID 28, hd45.jnwj.com, executor 1, partition 27, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 26.0 in stage 2.0 (TID 27) in 25 ms on hd45.jnwj.com (executor 1) (26/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 28.0 in stage 2.0 (TID 29, hd45.jnwj.com, executor 1, partition 28, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 27.0 in stage 2.0 (TID 28) in 23 ms on hd45.jnwj.com (executor 1) (27/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 29.0 in stage 2.0 (TID 30, hd45.jnwj.com, executor 1, partition 29, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 28.0 in stage 2.0 (TID 29) in 19 ms on hd45.jnwj.com (executor 1) (28/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 30.0 in stage 2.0 (TID 31, hd45.jnwj.com, executor 1, partition 30, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 29.0 in stage 2.0 (TID 30) in 19 ms on hd45.jnwj.com (executor 1) (29/32)

21/05/25 19:37:36 INFO TaskSetManager: Starting task 31.0 in stage 2.0 (TID 32, hd45.jnwj.com, executor 1, partition 31, PROCESS_LOCAL, 7766 bytes)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 30.0 in stage 2.0 (TID 31) in 18 ms on hd45.jnwj.com (executor 1) (30/32)

21/05/25 19:37:36 INFO TaskSetManager: Finished task 31.0 in stage 2.0 (TID 32) in 19 ms on hd45.jnwj.com (executor 1) (31/32)

21/05/25 19:37:38 INFO TaskSetManager: Finished task 1.0 in stage 2.0 (TID 2) in 3663 ms on hd49.jnwj.com (executor 2) (32/32)

21/05/25 19:37:38 INFO YarnScheduler: Removed TaskSet 2.0, whose tasks have all completed, from pool

21/05/25 19:37:38 INFO DAGScheduler: ResultStage 2 (foreachPartition at VerticesProcessor.scala:274) finished in 3.710 s

21/05/25 19:37:38 INFO DAGScheduler: Job 0 finished: foreachPartition at VerticesProcessor.scala:274, took 8.276100 s

21/05/25 19:37:38 INFO Exchange$: Client-Import: batchSuccess.person: 0

21/05/25 19:37:38 INFO Exchange$: Client-Import: batchFailure.person: 0

21/05/25 19:37:38 WARN Exchange$: Edge is not defined

21/05/25 19:37:39 INFO FileSourceStrategy: Pruning directories with:

21/05/25 19:37:39 INFO FileSourceStrategy: Post-Scan Filters:

21/05/25 19:37:39 INFO FileSourceStrategy: Output Data Schema: struct<value: string>

21/05/25 19:37:39 INFO FileSourceScanExec: Pushed Filters:

21/05/25 19:37:39 INFO CodeGenerator: Code generated in 20.12698 ms

21/05/25 19:37:39 INFO MemoryStore: Block broadcast_3 stored as values in memory (estimated size 446.9 KB, free 365.3 MB)

21/05/25 19:37:39 INFO MemoryStore: Block broadcast_3_piece0 stored as bytes in memory (estimated size 47.0 KB, free 365.3 MB)

21/05/25 19:37:39 INFO BlockManagerInfo: Added broadcast_3_piece0 in memory on 15.48.83.120:41896 (size: 47.0 KB, free: 366.2 MB)

21/05/25 19:37:39 INFO SparkContext: Created broadcast 3 from foreachPartition at ReloadProcessor.scala:24

21/05/25 19:37:39 INFO FileSourceScanExec: Planning scan with bin packing, max size: 134217728 bytes, open cost is considered as scanning 4194304 bytes.

21/05/25 19:37:39 INFO SparkContext: Starting job: foreachPartition at ReloadProcessor.scala:24

21/05/25 19:37:39 INFO DAGScheduler: Got job 1 (foreachPartition at ReloadProcessor.scala:24) with 788 output partitions

21/05/25 19:37:39 INFO DAGScheduler: Final stage: ResultStage 3 (foreachPartition at ReloadProcessor.scala:24)

21/05/25 19:37:39 INFO DAGScheduler: Parents of final stage: List()

21/05/25 19:37:39 INFO DAGScheduler: Missing parents: List()

21/05/25 19:37:39 INFO DAGScheduler: Submitting ResultStage 3 (MapPartitionsRDD[14] at foreachPartition at ReloadProcessor.scala:24), which has no missing parents

21/05/25 19:37:39 INFO MemoryStore: Block broadcast_4 stored as values in memory (estimated size 17.1 KB, free 365.3 MB)

21/05/25 19:37:39 INFO MemoryStore: Block broadcast_4_piece0 stored as bytes in memory (estimated size 8.8 KB, free 365.2 MB)

21/05/25 19:37:39 INFO BlockManagerInfo: Added broadcast_4_piece0 in memory on 15.48.83.120:41896 (size: 8.8 KB, free: 366.2 MB)

21/05/25 19:37:39 INFO SparkContext: Created broadcast 4 from broadcast at DAGScheduler.scala:1039

21/05/25 19:37:39 INFO DAGScheduler: Submitting 788 missing tasks from ResultStage 3 (MapPartitionsRDD[14] at foreachPartition at ReloadProcessor.scala:24) (first 15 tasks are for partitions Vector(0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14))

21/05/25 19:37:39 INFO YarnScheduler: Adding task set 3.0 with 788 tasks

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 3

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 20

21/05/25 19:37:41 INFO ContextCleaner: Cleaned shuffle 1

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 68

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 39

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 61

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 33

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 74

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 67

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 23

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 47

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 62

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 59

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 35

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 21

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 49

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 51

21/05/25 19:37:41 INFO ContextCleaner: Cleaned shuffle 0

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 65

21/05/25 19:37:41 INFO BlockManagerInfo: Removed broadcast_1_piece0 on 15.48.83.120:41896 in memory (size: 8.9 KB, free: 366.2 MB)

21/05/25 19:37:41 INFO BlockManagerInfo: Removed broadcast_1_piece0 on hd45.jnwj.com:43397 in memory (size: 8.9 KB, free: 2.5 GB)

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 26

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 12

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 28

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 45

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 15

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 30

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 58

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 72

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 60

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 32

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 16

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 38

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 56

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 53

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 57

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 22

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 25

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 52

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 17

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 43

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 46

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 48

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 37

21/05/25 19:37:41 INFO BlockManagerInfo: Removed broadcast_0_piece0 on 15.48.83.120:41896 in memory (size: 47.2 KB, free: 366.2 MB)

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 9

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 66

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 69

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 19

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 40

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 31

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 75

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 1

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 64

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 50

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 27

21/05/25 19:37:41 INFO BlockManagerInfo: Removed broadcast_2_piece0 on 15.48.83.120:41896 in memory (size: 11.0 KB, free: 366.2 MB)

21/05/25 19:37:41 INFO BlockManagerInfo: Removed broadcast_2_piece0 on hd45.jnwj.com:43397 in memory (size: 11.0 KB, free: 2.5 GB)

21/05/25 19:37:41 INFO BlockManagerInfo: Removed broadcast_2_piece0 on hd49.jnwj.com:38845 in memory (size: 11.0 KB, free: 2.5 GB)

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 63

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 44

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 73

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 34

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 0

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 71

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 29

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 11

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 10

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 7

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 55

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 76

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 24

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 36

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 14

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 54

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 6

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 13

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 18

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 70

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 42

21/05/25 19:37:41 INFO ContextCleaner: Cleaned accumulator 41

21/05/25 19:37:41 INFO TaskSetManager: Starting task 24.0 in stage 3.0 (TID 33, hd49.jnwj.com, executor 2, partition 24, NODE_LOCAL, 8314 bytes)

21/05/25 19:37:41 INFO TaskSetManager: Starting task 117.0 in stage 3.0 (TID 34, hd45.jnwj.com, executor 1, partition 117, NODE_LOCAL, 8315 bytes)

21/05/25 19:37:41 INFO BlockManagerInfo: Added broadcast_4_piece0 in memory on hd45.jnwj.com:43397 (size: 8.8 KB, free: 2.5 GB)

21/05/25 19:37:41 INFO BlockManagerInfo: Added broadcast_4_piece0 in memory on hd49.jnwj.com:38845 (size: 8.8 KB, free: 2.5 GB)

21/05/25 19:37:41 INFO BlockManagerInfo: Added broadcast_3_piece0 in memory on hd45.jnwj.com:43397 (size: 47.0 KB, free: 2.5 GB)

21/05/25 19:37:41 INFO BlockManagerInfo: Added broadcast_3_piece0 in memory on hd49.jnwj.com:38845 (size: 47.0 KB, free: 2.5 GB)

21/05/25 19:37:56 INFO TaskSetManager: Starting task 216.0 in stage 3.0 (TID 35, hd45.jnwj.com, executor 1, partition 216, NODE_LOCAL, 8315 bytes)

21/05/25 19:37:56 INFO TaskSetManager: Finished task 117.0 in stage 3.0 (TID 34) in 14226 ms on hd45.jnwj.com (executor 1) (1/788)

21/05/25 19:38:01 INFO TaskSetManager: Starting task 25.0 in stage 3.0 (TID 36, hd49.jnwj.com, executor 2, partition 25, NODE_LOCAL, 8314 bytes)

21/05/25 19:38:01 INFO TaskSetManager: Finished task 24.0 in stage 3.0 (TID 33) in 19215 ms on hd49.jnwj.com (executor 2) (2/788)

21/05/25 19:38:09 INFO TaskSetManager: Starting task 296.0 in stage 3.0 (TID 37, hd45.jnwj.com, executor 1, partition 296, NODE_LOCAL, 8315 bytes)

21/05/25 19:38:09 INFO TaskSetManager: Finished task 216.0 in stage 3.0 (TID 35) in 13229 ms on hd45.jnwj.com (executor 1) (3/788)

21/05/25 19:38:19 INFO TaskSetManager: Starting task 32.0 in stage 3.0 (TID 38, hd49.jnwj.com, executor 2, partition 32, NODE_LOCAL, 8315 bytes)

21/05/25 19:38:19 INFO TaskSetManager: Finished task 25.0 in stage 3.0 (TID 36) in 18414 ms on hd49.jnwj.com (executor 2) (4/788)

21/05/25 19:38:21 INFO TaskSetManager: Starting task 332.0 in stage 3.0 (TID 39, hd45.jnwj.com, executor 1, partition 332, NODE_LOCAL, 8315 bytes)

21/05/25 19:38:21 INFO TaskSetManager: Finished task 296.0 in stage 3.0 (TID 37) in 12118 ms on hd45.jnwj.com (executor 1) (5/788)

21/05/25 19:38:33 INFO TaskSetManager: Starting task 516.0 in stage 3.0 (TID 40, hd45.jnwj.com, executor 1, partition 516, NODE_LOCAL, 8315 bytes)

21/05/25 19:38:33 INFO TaskSetManager: Finished task 332.0 in stage 3.0 (TID 39) in 11673 ms on hd45.jnwj.com (executor 1) (6/788)

21/05/25 19:38:36 INFO TaskSetManager: Starting task 36.0 in stage 3.0 (TID 41, hd49.jnwj.com, executor 2, partition 36, NODE_LOCAL, 8314 bytes)

21/05/25 19:38:36 INFO TaskSetManager: Finished task 32.0 in stage 3.0 (TID 38) in 16669 ms on hd49.jnwj.com (executor 2) (7/788)

21/05/25 19:38:43 INFO TaskSetManager: Starting task 554.0 in stage 3.0 (TID 42, hd45.jnwj.com, executor 1, partition 554, NODE_LOCAL, 8315 bytes)

21/05/25 19:38:43 INFO TaskSetManager: Finished task 516.0 in stage 3.0 (TID 40) in 10851 ms on hd45.jnwj.com (executor 1) (8/788)

21/05/25 19:38:48 INFO Client: Deleted staging directory hdfs://mycluster/user/renzixing/.sparkStaging/application_1619435766040_41321

21/05/25 19:38:48 ERROR YarnClientSchedulerBackend: Yarn application has already exited with state KILLED!

21/05/25 19:38:48 INFO AbstractConnector: Stopped Spark@2c42b421{HTTP/1.1,[http/1.1]}{0.0.0.0:4042}

21/05/25 19:38:48 INFO SparkUI: Stopped Spark web UI at http://15.48.83.120:4042

21/05/25 19:38:48 INFO DAGScheduler: ResultStage 3 (foreachPartition at ReloadProcessor.scala:24) failed in 69.317 s due to Stage cancelled because SparkContext was shut down

21/05/25 19:38:48 INFO DAGScheduler: Job 1 failed: foreachPartition at ReloadProcessor.scala:24, took 69.395599 s

Exception in thread "main" org.apache.spark.SparkException: Job 1 cancelled because SparkContext was shut down

at org.apache.spark.scheduler.DAGScheduler$$anonfun$cleanUpAfterSchedulerStop$1.apply(DAGScheduler.scala:837)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$cleanUpAfterSchedulerStop$1.apply(DAGScheduler.scala:835)

at scala.collection.mutable.HashSet.foreach(HashSet.scala:78)

at org.apache.spark.scheduler.DAGScheduler.cleanUpAfterSchedulerStop(DAGScheduler.scala:835)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onStop(DAGScheduler.scala:1848)

at org.apache.spark.util.EventLoop.stop(EventLoop.scala:83)

at org.apache.spark.scheduler.DAGScheduler.stop(DAGScheduler.scala:1761)

at org.apache.spark.SparkContext$$anonfun$stop$8.apply$mcV$sp(SparkContext.scala:1931)

at org.apache.spark.util.Utils$.tryLogNonFatalError(Utils.scala:1361)

at org.apache.spark.SparkContext.stop(SparkContext.scala:1930)

at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend$MonitorThread.run(YarnClientSchedulerBackend.scala:112)

at org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:642)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2034)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2055)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2074)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2099)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1.apply(RDD.scala:929)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1.apply(RDD.scala:927)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:363)

at org.apache.spark.rdd.RDD.foreachPartition(RDD.scala:927)

at org.apache.spark.sql.Dataset$$anonfun$foreachPartition$1.apply$mcV$sp(Dataset.scala:2680)

at org.apache.spark.sql.Dataset$$anonfun$foreachPartition$1.apply(Dataset.scala:2680)

at org.apache.spark.sql.Dataset$$anonfun$foreachPartition$1.apply(Dataset.scala:2680)

at org.apache.spark.sql.Dataset$$anonfun$withNewRDDExecutionId$1.apply(Dataset.scala:3244)

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:77)

at org.apache.spark.sql.Dataset.withNewRDDExecutionId(Dataset.scala:3240)

at org.apache.spark.sql.Dataset.foreachPartition(Dataset.scala:2679)

at com.vesoft.nebula.exchange.processor.ReloadProcessor.process(ReloadProcessor.scala:24)

at com.vesoft.nebula.exchange.Exchange$.main(Exchange.scala:200)

at com.vesoft.nebula.exchange.Exchange.main(Exchange.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:904)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:198)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:228)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:137)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

21/05/25 19:38:48 ERROR TransportClient: Failed to send RPC 6557563533105013209 to /15.48.91.73:53674: java.nio.channels.ClosedChannelException

java.nio.channels.ClosedChannelException

at io.netty.channel.AbstractChannel$AbstractUnsafe.write(...)(Unknown Source)

21/05/25 19:38:48 INFO DiskBlockManager: Shutdown hook called

21/05/25 19:38:48 ERROR YarnSchedulerBackend$YarnSchedulerEndpoint: Sending RequestExecutors(0,0,Map(),Set()) to AM was unsuccessful

java.io.IOException: Failed to send RPC 6557563533105013209 to /15.48.91.73:53674: java.nio.channels.ClosedChannelException

at org.apache.spark.network.client.TransportClient.lambda$sendRpc$2(TransportClient.java:237)

at io.netty.util.concurrent.DefaultPromise.notifyListener0(DefaultPromise.java:507)

at io.netty.util.concurrent.DefaultPromise.notifyListenersNow(DefaultPromise.java:481)

at io.netty.util.concurrent.DefaultPromise.notifyListeners(DefaultPromise.java:420)

at io.netty.util.concurrent.DefaultPromise.tryFailure(DefaultPromise.java:122)

at io.netty.channel.AbstractChannel$AbstractUnsafe.safeSetFailure(AbstractChannel.java:987)

at io.netty.channel.AbstractChannel$AbstractUnsafe.write(AbstractChannel.java:869)

at io.netty.channel.DefaultChannelPipeline$HeadContext.write(DefaultChannelPipeline.java:1316)

at io.netty.channel.AbstractChannelHandlerContext.invokeWrite0(AbstractChannelHandlerContext.java:738)

at io.netty.channel.AbstractChannelHandlerContext.invokeWrite(AbstractChannelHandlerContext.java:730)

at io.netty.channel.AbstractChannelHandlerContext.access$1900(AbstractChannelHandlerContext.java:38)

at io.netty.channel.AbstractChannelHandlerContext$AbstractWriteTask.write(AbstractChannelHandlerContext.java:1081)

at io.netty.channel.AbstractChannelHandlerContext$WriteAndFlushTask.write(AbstractChannelHandlerContext.java:1128)

at io.netty.channel.AbstractChannelHandlerContext$AbstractWriteTask.run(AbstractChannelHandlerContext.java:1070)

at io.netty.util.concurrent.AbstractEventExecutor.safeExecute(AbstractEventExecutor.java:163)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:403)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:463)

at io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858)

at io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:138)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.nio.channels.ClosedChannelException

at io.netty.channel.AbstractChannel$AbstractUnsafe.write(...)(Unknown Source)

21/05/25 19:38:48 INFO SchedulerExtensionServices: Stopping SchedulerExtensionServices

(serviceOption=None,

services=List(),

started=false)

21/05/25 19:38:48 ERROR Utils: Uncaught exception in thread Yarn application state monitor

org.apache.spark.SparkException: Exception thrown in awaitResult:

at org.apache.spark.util.ThreadUtils$.awaitResult(ThreadUtils.scala:205)

at org.apache.spark.rpc.RpcTimeout.awaitResult(RpcTimeout.scala:75)

at org.apache.spark.scheduler.cluster.CoarseGrainedSchedulerBackend.requestTotalExecutors(CoarseGrainedSchedulerBackend.scala:565)

at org.apache.spark.scheduler.cluster.YarnSchedulerBackend.stop(YarnSchedulerBackend.scala:95)

at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.stop(YarnClientSchedulerBackend.scala:155)

at org.apache.spark.scheduler.TaskSchedulerImpl.stop(TaskSchedulerImpl.scala:508)

at org.apache.spark.scheduler.DAGScheduler.stop(DAGScheduler.scala:1762)

at org.apache.spark.SparkContext$$anonfun$stop$8.apply$mcV$sp(SparkContext.scala:1931)

at org.apache.spark.util.Utils$.tryLogNonFatalError(Utils.scala:1361)

at org.apache.spark.SparkContext.stop(SparkContext.scala:1930)

at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend$MonitorThread.run(YarnClientSchedulerBackend.scala:112)

Caused by: java.io.IOException: Failed to send RPC 6557563533105013209 to /15.48.91.73:53674: java.nio.channels.ClosedChannelException

at org.apache.spark.network.client.TransportClient.lambda$sendRpc$2(TransportClient.java:237)

at io.netty.util.concurrent.DefaultPromise.notifyListener0(DefaultPromise.java:507)

at io.netty.util.concurrent.DefaultPromise.notifyListenersNow(DefaultPromise.java:481)

at io.netty.util.concurrent.DefaultPromise.notifyListeners(DefaultPromise.java:420)

at io.netty.util.concurrent.DefaultPromise.tryFailure(DefaultPromise.java:122)

at io.netty.channel.AbstractChannel$AbstractUnsafe.safeSetFailure(AbstractChannel.java:987)

at io.netty.channel.AbstractChannel$AbstractUnsafe.write(AbstractChannel.java:869)

at io.netty.channel.DefaultChannelPipeline$HeadContext.write(DefaultChannelPipeline.java:1316)

at io.netty.channel.AbstractChannelHandlerContext.invokeWrite0(AbstractChannelHandlerContext.java:738)

at io.netty.channel.AbstractChannelHandlerContext.invokeWrite(AbstractChannelHandlerContext.java:730)

at io.netty.channel.AbstractChannelHandlerContext.access$1900(AbstractChannelHandlerContext.java:38)

at io.netty.channel.AbstractChannelHandlerContext$AbstractWriteTask.write(AbstractChannelHandlerContext.java:1081)

at io.netty.channel.AbstractChannelHandlerContext$WriteAndFlushTask.write(AbstractChannelHandlerContext.java:1128)

at io.netty.channel.AbstractChannelHandlerContext$AbstractWriteTask.run(AbstractChannelHandlerContext.java:1070)

at io.netty.util.concurrent.AbstractEventExecutor.safeExecute(AbstractEventExecutor.java:163)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:403)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:463)

at io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858)

at io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:138)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.nio.channels.ClosedChannelException

at io.netty.channel.AbstractChannel$AbstractUnsafe.write(...)(Unknown Source)

21/05/25 19:38:48 INFO ShutdownHookManager: Shutdown hook called

21/05/25 19:38:48 INFO ShutdownHookManager: Deleting directory /tmp/spark-dddc41be-298b-4428-bcea-16ba5f2ca63e/userFiles-d6334bdb-d7f1-49d4-8597-477b5ffa3c0b

21/05/25 19:38:48 INFO ShutdownHookManager: Deleting directory /tmp/spark-dddc41be-298b-4428-bcea-16ba5f2ca63e

21/05/25 19:38:48 INFO ShutdownHookManager: Deleting directory /tmp/spark-4238b7c0-dc72-4450-9c3c-fd216632a8bd

21/05/25 19:38:48 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

3、graphd日志,graphd日志几乎全是这样的,我就截取了一部分

E0525 19:19:08.781213 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.782701 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.784117 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.785037 24324 QueryInstance.cpp:103] SyntaxError: syntax error near `15376405891'

E0525 19:19:08.786082 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.787611 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.788903 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.790503 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.791666 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.793943 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.794353 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.796730 24324 QueryInstance.cpp:103] SyntaxError: syntax error near `17561933518'

E0525 19:19:08.796844 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.799230 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.801036 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.801076 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.803828 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.803889 24324 QueryInstance.cpp:103] SyntaxError: syntax error near `05393705091'

E0525 19:19:08.806629 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.806720 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.809177 24322 QueryInstance.cpp:103] SyntaxError: syntax error near `18562003819'

E0525 19:19:08.809362 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.811933 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.812822 24324 QueryInstance.cpp:103] SyntaxError: syntax error near `13326317471'

E0525 19:19:08.814317 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.814323 24322 QueryInstance.cpp:103] SyntaxError: syntax error near `15665807507'

E0525 19:19:08.816725 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.817937 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.821043 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.821056 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.823621 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.824661 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.826087 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.827024 24322 QueryInstance.cpp:103] SyntaxError: syntax error near `15589503165'

E0525 19:19:08.829061 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.830559 24324 QueryInstance.cpp:103] SyntaxError: syntax error near `15053733905'

E0525 19:19:08.831840 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.832046 24324 QueryInstance.cpp:103] SyntaxError: syntax error near `13376374668'

E0525 19:19:08.834772 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.835785 24322 QueryInstance.cpp:103] SyntaxError: syntax error near `18954807874'

E0525 19:19:08.837450 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.839440 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.839922 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.843144 24324 QueryInstance.cpp:103] SyntaxError: syntax error near `13336387645'

E0525 19:19:08.843868 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.846611 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.846889 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.849915 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.850497 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.852622 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.854949 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.855023 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.857440 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.858532 24324 QueryInstance.cpp:103] SyntaxError: syntax error near `18554306987'

E0525 19:19:08.859875 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.861647 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.862659 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.864928 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.865387 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.867455 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.867779 24324 QueryInstance.cpp:103] SyntaxError: syntax error near `18053097864'

E0525 19:19:08.870184 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.870607 24322 QueryInstance.cpp:103] SyntaxError: syntax error near `17763318289'

E0525 19:19:08.873368 24324 QueryInstance.cpp:103] SyntaxError: syntax error near `13062077161'

E0525 19:19:08.873435 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.875516 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.876510 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.877488 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.878563 24322 QueryInstance.cpp:103] SyntaxError: syntax error near `18653678172'

E0525 19:19:08.879544 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.881227 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.881865 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.884081 24322 QueryInstance.cpp:103] SyntaxError: syntax error near `18553888068'

E0525 19:19:08.884301 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.886404 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.886868 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.888646 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.888854 24322 QueryInstance.cpp:103] SyntaxError: syntax error near `19153940396'

E0525 19:19:08.890980 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.891438 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.892854 24322 QueryInstance.cpp:103] SemanticError: Space was not chosen.

E0525 19:19:08.893833 24324 QueryInstance.cpp:103] SemanticError: Space was not chosen.