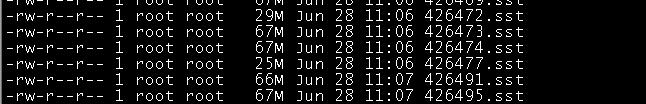

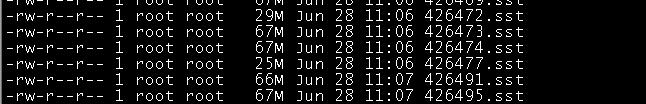

问题一: 为啥没有改变大小呢??? 是不是不是这2个参数, 还有这个.sst文件大小对数据查询和写入有啥影响吗?

问题二: 自动compact打开之后,还需不需要做全量的compact, 之前导入89亿数据最后报错的当时就是关了自动compact导入的, 这次的打开自动compact导入的, 导入了170亿数据到现在还是正常的

问题一: 为啥没有改变大小呢??? 是不是不是这2个参数, 还有这个.sst文件大小对数据查询和写入有啥影响吗?

问题二: 自动compact打开之后,还需不需要做全量的compact, 之前导入89亿数据最后报错的当时就是关了自动compact导入的, 这次的打开自动compact导入的, 导入了170亿数据到现在还是正常的