- nebula 版本:v2.2.1

- 部署方式(分布式 / 单机 / Docker / DBaaS):Docker

- 是否为线上版本:Y

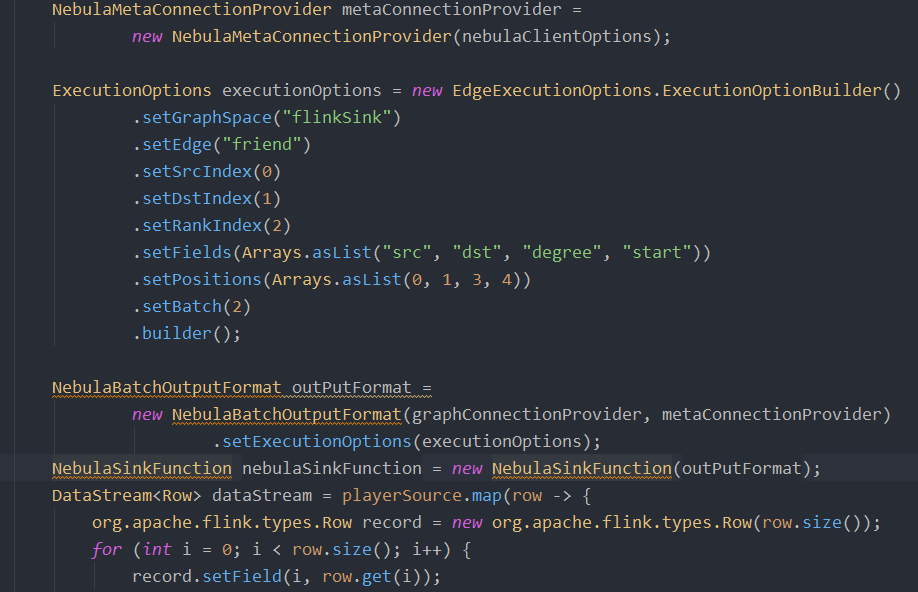

- 问题的具体描述“:新建了一个java项目用maven引入了nebula-flink-connector,跑的FlinkConnectorExample,报错了

日志:

[2021-08-11T15:32:11.534+0800][INFO ][main][org.apache.flink.runtime.rest.RestServerEndpoint][|||][]Shutting down rest endpoint.

[2021-08-11T15:32:11.536+0800][ERROR][main][com.cmft.FlinkConnectorExample1][|||][]error when write Nebula Graph,

org.apache.flink.util.FlinkException: Could not create the DispatcherResourceManagerComponent.

at org.apache.flink.runtime.entrypoint.component.DefaultDispatcherResourceManagerComponentFactory.create(DefaultDispatcherResourceManagerComponentFactory.java:256)

at org.apache.flink.runtime.minicluster.MiniCluster.createDispatcherResourceManagerComponents(MiniCluster.java:412)

at org.apache.flink.runtime.minicluster.MiniCluster.setupDispatcherResourceManagerComponents(MiniCluster.java:378)

at org.apache.flink.runtime.minicluster.MiniCluster.start(MiniCluster.java:332)

at org.apache.flink.client.program.PerJobMiniClusterFactory.submitJob(PerJobMiniClusterFactory.java:87)

at org.apache.flink.client.deployment.executors.LocalExecutor.execute(LocalExecutor.java:81)

at org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.executeAsync(StreamExecutionEnvironment.java:1818)

at org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.execute(StreamExecutionEnvironment.java:1714)

at org.apache.flink.streaming.api.environment.LocalStreamEnvironment.execute(LocalStreamEnvironment.java:74)

at org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.execute(StreamExecutionEnvironment.java:1700)

at com.cmft.FlinkConnectorExample1.sinkVertexData(FlinkConnectorExample1.java:93)

at com.cmft.FlinkConnectorExample1.main(FlinkConnectorExample1.java:25)

Caused by: org.apache.flink.util.FlinkRuntimeException: REST handler registration overlaps with another registration for: version=V1, method=GET, url=/jars/:jarid/plan.

at org.apache.flink.runtime.rest.RestServerEndpoint.checkAllEndpointsAndHandlersAreUnique(RestServerEndpoint.java:511)

at org.apache.flink.runtime.rest.RestServerEndpoint.start(RestServerEndpoint.java:158)

at org.apache.flink.runtime.entrypoint.component.DefaultDispatcherResourceManagerComponentFactory.create(DefaultDispatcherResourceManagerComponentFactory.java:163)

... 11 more

[2021-08-11T15:32:11.539+0800][INFO ][TransientBlobCache shutdown hook][org.apache.flink.runtime.blob.AbstractBlobCache][|||][]Shutting down BLOB cache

[2021-08-11T15:32:11.539+0800][INFO ][PermanentBlobCache shutdown hook][org.apache.flink.runtime.blob.AbstractBlobCache][|||][]Shutting down BLOB cache

[2021-08-11T15:32:11.539+0800][INFO ][TaskExecutorLocalStateStoresManager shutdown hook][org.apache.flink.runtime.state.TaskExecutorLocalStateStoresManager][|||][]Shutting down TaskExecutorLocalStateStoresManager.

[2021-08-11T15:32:11.545+0800][INFO ][FileCache shutdown hook][org.apache.flink.runtime.filecache.FileCache][|||][]removed file cache directory D:\Users\XIAOSY~1\AppData\Local\Temp\flink-dist-cache-653cfe13-02b1-4332-b632-9fe0e3ccec3e

[2021-08-11T15:32:11.546+0800][INFO ][BlobServer shutdown hook][org.apache.flink.runtime.blob.BlobServer][|||][]Stopped BLOB server at 0.0.0.0:64977

[2021-08-11T15:32:11.546+0800][INFO ][FileChannelManagerImpl-io shutdown hook][org.apache.flink.runtime.io.disk.FileChannelManagerImpl][|||][]FileChannelManager removed spill file directory D:\Users\XIAOSY~1\AppData\Local\Temp\flink-io-450794f3-df45-4a04-bbb0-e07ea1828c58

[2021-08-11T15:32:11.547+0800][INFO ][FileChannelManagerImpl-netty-shuffle shutdown hook][org.apache.flink.runtime.io.disk.FileChannelManagerImpl][|||][]FileChannelManager removed spill file directory D:\Users\XIAOSY~1\AppData\Local\Temp\flink-netty-shuffle-deffd7f6-cdf1-4949-956f-00fc02b89925

Process finished with exit code -1

求解