- nebula 版本:2.6.1

- 部署方式: 单机

- 安装方式: Docker

- 是否为线上版本:N

- 硬件信息

- 磁盘( 推荐使用 SSD)

- CPU、内存信息

- 问题的具体描述

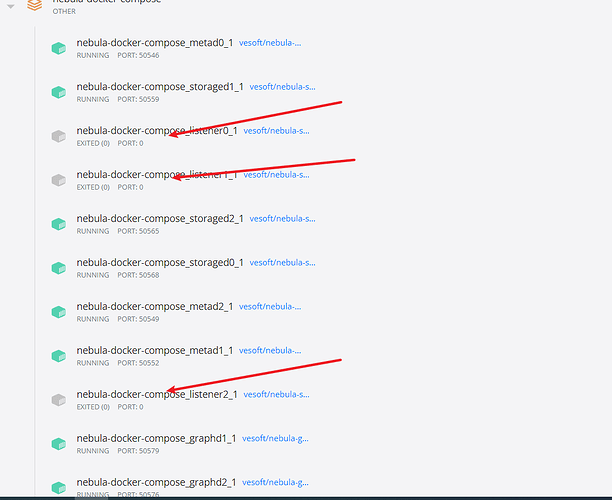

listener 启动不了

docker-compose.yml文件信息

version: '2.2'

services:

metad0:

image: vesoft/nebula-metad:v2.6.0

environment:

USER: root

TZ: "${TZ}"

command:

- --meta_server_addrs=metad0:9559,metad1:9559,metad2:9559

- --local_ip=metad0

- --ws_ip=metad0

- --port=9559

- --ws_http_port=19559

- --data_path=/data/meta

- --log_dir=/logs

- --v=0

- --minloglevel=0

healthcheck:

test: ["CMD", "curl", "-sf", "http://metad0:19559/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- 9559

- 19559

- 19560

volumes:

- ${MNT}/data/meta0:/data/meta

- ${MNT}/logs/meta0:/logs

networks:

- nebula-net

restart: on-failure

cap_add:

- SYS_PTRACE

metad1:

image: vesoft/nebula-metad:v2.6.0

environment:

USER: root

TZ: "${TZ}"

command:

- --meta_server_addrs=metad0:9559,metad1:9559,metad2:9559

- --local_ip=metad1

- --ws_ip=metad1

- --port=9559

- --ws_http_port=19559

- --data_path=/data/meta

- --log_dir=/logs

- --v=0

- --minloglevel=0

healthcheck:

test: ["CMD", "curl", "-sf", "http://metad1:19559/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- 9559

- 19559

- 19560

volumes:

- ${MNT}/data/meta1:/data/meta

- ${MNT}/logs/meta1:/logs

networks:

- nebula-net

restart: on-failure

cap_add:

- SYS_PTRACE

metad2:

image: vesoft/nebula-metad:v2.6.0

environment:

USER: root

TZ: "${TZ}"

command:

- --meta_server_addrs=metad0:9559,metad1:9559,metad2:9559

- --local_ip=metad2

- --ws_ip=metad2

- --port=9559

- --ws_http_port=19559

- --data_path=/data/meta

- --log_dir=/logs

- --v=0

- --minloglevel=0

healthcheck:

test: ["CMD", "curl", "-sf", "http://metad2:19559/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- 9559

- 19559

- 19560

volumes:

- ${MNT}/data/meta2:/data/meta

- ${MNT}/logs/meta2:/logs

networks:

- nebula-net

restart: on-failure

cap_add:

- SYS_PTRACE

storaged0:

image: vesoft/nebula-storaged:v2.6.0

environment:

USER: root

TZ: "${TZ}"

command:

- --meta_server_addrs=metad0:9559,metad1:9559,metad2:9559

- --local_ip=storaged0

- --ws_ip=storaged0

- --port=9779

- --ws_http_port=19779

- --data_path=/data/storage

- --log_dir=/logs

- --v=0

- --minloglevel=0

depends_on:

- metad0

- metad1

- metad2

healthcheck:

test: ["CMD", "curl", "-sf", "http://storaged0:19779/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- 9779

- 19779

- 19780

volumes:

- ${MNT}/data/storage0:/data/storage

- ${MNT}/logs/storage0:/logs

networks:

- nebula-net

restart: on-failure

cap_add:

- SYS_PTRACE

storaged1:

image: vesoft/nebula-storaged:v2.6.0

environment:

USER: root

TZ: "${TZ}"

command:

- --meta_server_addrs=metad0:9559,metad1:9559,metad2:9559

- --local_ip=storaged1

- --ws_ip=storaged1

- --port=9779

- --ws_http_port=19779

- --data_path=/data/storage

- --log_dir=/logs

- --v=0

- --minloglevel=0

depends_on:

- metad0

- metad1

- metad2

healthcheck:

test: ["CMD", "curl", "-sf", "http://storaged1:19779/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- 9779

- 19779

- 19780

volumes:

- ${MNT}/data/storage1:/data/storage

- ${MNT}/logs/storage1:/logs

networks:

- nebula-net

restart: on-failure

cap_add:

- SYS_PTRACE

storaged2:

image: vesoft/nebula-storaged:v2.6.0

environment:

USER: root

TZ: "${TZ}"

command:

- --meta_server_addrs=metad0:9559,metad1:9559,metad2:9559

- --local_ip=storaged2

- --ws_ip=storaged2

- --port=9779

- --ws_http_port=19779

- --data_path=/data/storage

- --log_dir=/logs

- --v=0

- --minloglevel=0

depends_on:

- metad0

- metad1

- metad2

healthcheck:

test: ["CMD", "curl", "-sf", "http://storaged2:19779/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- 9779

- 19779

- 19780

volumes:

- ${MNT}/data/storage2:/data/storage

- ${MNT}/logs/storage2:/logs

networks:

- nebula-net

restart: on-failure

cap_add:

- SYS_PTRACE

graphd:

image: vesoft/nebula-graphd:v2.6.0

environment:

USER: root

TZ: "${TZ}"

command:

- --meta_server_addrs=metad0:9559,metad1:9559,metad2:9559

- --port=9669

- --local_ip=graphd

- --ws_ip=graphd

- --ws_http_port=19669

- --log_dir=/logs

- --v=0

- --minloglevel=0

depends_on:

- storaged0

- storaged1

- storaged2

healthcheck:

test: ["CMD", "curl", "-sf", "http://graphd:19669/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- 9669

- 19669

- 19670

volumes:

- ${MNT}/logs/graph:/logs

networks:

- nebula-net

restart: on-failure

cap_add:

- SYS_PTRACE

graphd1:

image: vesoft/nebula-graphd:v2.6.0

environment:

USER: root

TZ: "${TZ}"

command:

- --meta_server_addrs=metad0:9559,metad1:9559,metad2:9559

- --port=9669

- --local_ip=graphd1

- --ws_ip=graphd1

- --ws_http_port=19669

- --log_dir=/logs

- --v=0

- --minloglevel=0

depends_on:

- storaged0

- storaged1

- storaged2

healthcheck:

test: ["CMD", "curl", "-sf", "http://graphd1:19669/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- 9669

- 19669

- 19670

volumes:

- ${MNT}/logs/graph1:/logs

networks:

- nebula-net

restart: on-failure

cap_add:

- SYS_PTRACE

graphd2:

image: vesoft/nebula-graphd:v2.6.0

environment:

USER: root

TZ: "${TZ}"

command:

- --meta_server_addrs=metad0:9559,metad1:9559,metad2:9559

- --port=9669

- --local_ip=graphd2

- --ws_ip=graphd2

- --ws_http_port=19669

- --log_dir=/logs

- --v=0

- --minloglevel=0

depends_on:

- storaged0

- storaged1

- storaged2

healthcheck:

test: ["CMD", "curl", "-sf", "http://graphd2:19669/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- 9669

- 19669

- 19670

volumes:

- ${MNT}/logs/graph2:/logs

networks:

- nebula-net

restart: on-failure

cap_add:

- SYS_PTRACE

listener0:

image: vesoft/nebula-storaged:v2.6.0

environment:

USER: root

TZ: "${TZ}"

entrypoint:

- sh

- -c

command:

- /usr/local/nebula/bin/nebula-storaged --flagfile=/usr/local/nebula/etc/nebula-storaged-listener.conf

depends_on:

- metad0

- metad1

- metad2

healthcheck:

test: ["CMD", "curl", "-sf", "http://listener0:19789/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- 9789

- 19789

- 19790

volumes:

- ${MNT}/etc/listener0/nebula-storaged-listener.conf:/usr/local/nebula/etc/nebula-storaged-listener.conf

- ${MNT}/data/listener0:/data/listener

- ${MNT}/logs/listener0:/logs_listener

networks:

- nebula-net

restart: on-failure

cap_add:

- SYS_PTRACE

listener1:

image: vesoft/nebula-storaged:v2.6.0

environment:

USER: root

TZ: "${TZ}"

entrypoint:

- sh

- -c

command:

- /usr/local/nebula/bin/nebula-storaged --flagfile=/usr/local/nebula/etc/nebula-storaged-listener.conf

depends_on:

- metad0

- metad1

- metad2

healthcheck:

test: ["CMD", "curl", "-sf", "http://listener1:19789/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- 9789

- 19789

- 19790

volumes:

- ${MNT}/etc/listener1/nebula-storaged-listener.conf:/usr/local/nebula/etc/nebula-storaged-listener.conf

- ${MNT}/data/listener1:/data/listener

- ${MNT}/logs/listener1:/logs_listener

networks:

- nebula-net

restart: on-failure

cap_add:

- SYS_PTRACE

listener2:

image: vesoft/nebula-storaged:v2.6.0

environment:

USER: root

TZ: "${TZ}"

entrypoint:

- sh

- -c

command:

- /usr/local/nebula/bin/nebula-storaged --flagfile=/usr/local/nebula/etc/nebula-storaged-listener.conf

depends_on:

- metad0

- metad1

- metad2

healthcheck:

test: ["CMD", "curl", "-sf", "http://listener2:19789/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 20s

ports:

- 9789

- 19789

- 19790

volumes:

- ${MNT}/etc/listener2/nebula-storaged-listener.conf:/usr/local/nebula/etc/nebula-storaged-listener.conf

- ${MNT}/data/listener2:/data/listener

- ${MNT}/logs/listener2:/logs_listener

networks:

- nebula-net

restart: on-failure

cap_add:

- SYS_PTRACE

networks:

nebula-net:

nebula-storaged-listener.conf文件信息 其中一个

########## nebula-storaged-listener ###########

########## basics ##########

# Whether to run as a daemon process

--daemonize=true

# The file to host the process id

--pid_file=pids_listener/nebula-storaged.pid

# Whether to use the configuration obtained from the configuration file

--local_config=true

########## logging ##########

# The directory to host logging files

--log_dir=/logs_listener

# Log level, 0, 1, 2, 3 for INFO, WARNING, ERROR, FATAL respectively

--minloglevel=0

# Verbose log level, 1, 2, 3, 4, the higher of the level, the more verbose of the logging

--v=0

# Maximum seconds to buffer the log messages

--logbufsecs=0

# Whether to redirect stdout and stderr to separate output files

--redirect_stdout=true

# Destination filename of stdout and stderr, which will also reside in log_dir.

--stdout_log_file=storaged-stdout.log

--stderr_log_file=storaged-stderr.log

# Copy log messages at or above this level to stderr in addition to logfiles. The numbers of severity levels INFO, WARNING, ERROR, and FATAL are 0, 1, 2, and 3, respectively.

--stderrthreshold=2

########## networking ##########

# Meta server address

--meta_server_addrs=metad0:9559,metad1:9559,metad2:9559

# Local ip

--local_ip=listener2

# Storage daemon listening port

--port=9789

# HTTP service ip

--ws_ip=listener2

# HTTP service port

--ws_http_port=19789

# HTTP2 service port

--ws_h2_port=19790

# heartbeat with meta service

--heartbeat_interval_secs=10

########## storage ##########

# Listener wal directory. only one path is allowed.

--listener_path=/data/listener

# This parameter can be ignored for compatibility. let's fill A default value of "data"

--data_path=/data/storage

# The type of part manager, [memory | meta]

--part_man_type=memory

# The default reserved bytes for one batch operation

--rocksdb_batch_size=4096

# The default block cache size used in BlockBasedTable.

# The unit is MB.

--rocksdb_block_cache=4

# The type of storage engine, `rocksdb', `memory', etc.

--engine_type=rocksdb

# The type of part, `simple', `consensus'...

--part_type=simple

求解

storaged 的端口

- 9779

- 19779

- 19780

listener 的端口

- 9789

- 19789

- 19790

有啥区别 (主要是我看了好几个文档 他们设置的端口不一样)