醒醒,我们没有 Scala 客户端, 你用的啥。顺便补充下 Nebula 和客户端的版本号,第一次发帖就不要删模版了,省得来回咨询本应该出现在问题正文里的信息。

你用的啥。顺便补充下 Nebula 和客户端的版本号,第一次发帖就不要删模版了,省得来回咨询本应该出现在问题正文里的信息。

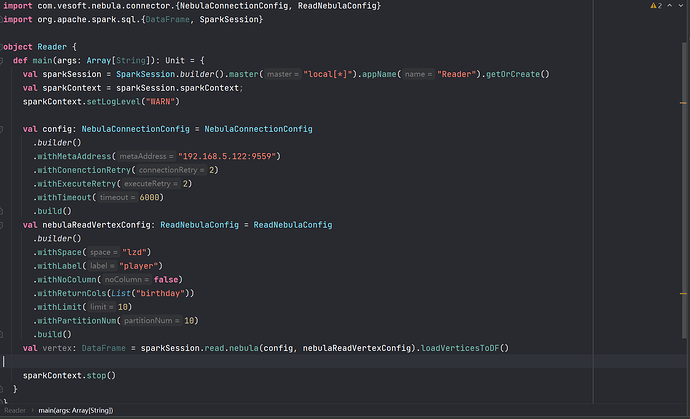

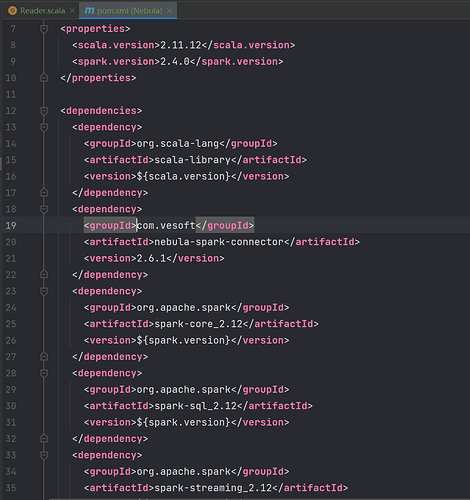

Spark用的是2.4.0,连接器用的是2.6.1,nebula用的是2.6.1,Java也是报这个错,我再瞅瞅,sbt依赖怎么导,下载那个连接器一直报错,显示下不下来,啊哟喂

所以你用的是 Spark Connector 对吗

对 连接不上 我现在也没找出来

你试试这个方式,![]() 感觉和你的问题是一个问题。

感觉和你的问题是一个问题。

服务器重启了一下好像就不报连接被拒绝了,现在现在这个差jar包的错,什么原因

Goyokki 方便给我贴文本吗?

WARNING: An illegal reflective access operation has occurred

WARNING: Illegal reflective access by org.apache.spark.unsafe.Platform (file:/D:/conf/repository/org/apache/spark/spark-unsafe_2.11/2.4.4/spark-unsafe_2.11-2.4.4.jar) to method java.nio.Bits.unaligned()

WARNING: Please consider reporting this to the maintainers of org.apache.spark.unsafe.Platform

WARNING: Use --illegal-access=warn to enable warnings of further illegal reflective access operations

WARNING: All illegal access operations will be denied in a future release

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

22/01/27 11:58:36 INFO SparkContext: Running Spark version 2.4.4

22/01/27 11:58:37 INFO SparkContext: Submitted application: Reader

22/01/27 11:58:37 INFO SecurityManager: Changing view acls to: Goyokki,root

22/01/27 11:58:37 INFO SecurityManager: Changing modify acls to: Goyokki,root

22/01/27 11:58:37 INFO SecurityManager: Changing view acls groups to:

22/01/27 11:58:37 INFO SecurityManager: Changing modify acls groups to:

22/01/27 11:58:37 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(Goyokki, root); groups with view permissions: Set(); users with modify permissions: Set(Goyokki, root); groups with modify permissions: Set()

22/01/27 11:58:38 INFO Utils: Successfully started service 'sparkDriver' on port 8852.

22/01/27 11:58:38 INFO SparkEnv: Registering MapOutputTracker

22/01/27 11:58:38 INFO SparkEnv: Registering BlockManagerMaster

22/01/27 11:58:38 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

22/01/27 11:58:38 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

22/01/27 11:58:38 INFO DiskBlockManager: Created local directory at C:\Users\Goyokki\AppData\Local\Temp\blockmgr-e53f645c-3565-4c64-8919-ee05de270bdf

22/01/27 11:58:38 INFO MemoryStore: MemoryStore started with capacity 2.2 GB

22/01/27 11:58:38 INFO SparkEnv: Registering OutputCommitCoordinator

22/01/27 11:58:38 INFO Utils: Successfully started service 'SparkUI' on port 4040.

22/01/27 11:58:38 INFO SparkUI: Bound SparkUI to 0.0.0.0, and started at http://DESKTOP-U1T3QJ9:4040

22/01/27 11:58:38 INFO Executor: Starting executor ID driver on host localhost

22/01/27 11:58:38 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 8893.

22/01/27 11:58:38 INFO NettyBlockTransferService: Server created on DESKTOP-U1T3QJ9:8893

22/01/27 11:58:38 INFO BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

22/01/27 11:58:38 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(driver, DESKTOP-U1T3QJ9, 8893, None)

22/01/27 11:58:38 INFO BlockManagerMasterEndpoint: Registering block manager DESKTOP-U1T3QJ9:8893 with 2.2 GB RAM, BlockManagerId(driver, DESKTOP-U1T3QJ9, 8893, None)

22/01/27 11:58:38 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(driver, DESKTOP-U1T3QJ9, 8893, None)

22/01/27 11:58:38 INFO BlockManager: Initialized BlockManager: BlockManagerId(driver, DESKTOP-U1T3QJ9, 8893, None)

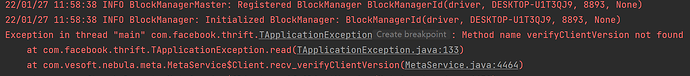

Exception in thread "main" com.facebook.thrift.TApplicationException: Method name verifyClientVersion not found

at com.facebook.thrift.TApplicationException.read(TApplicationException.java:133)

at com.vesoft.nebula.meta.MetaService$Client.recv_verifyClientVersion(MetaService.java:4464)

at com.vesoft.nebula.meta.MetaService$Client.verifyClientVersion(MetaService.java:4439)

at com.vesoft.nebula.client.meta.MetaClient.getClient(MetaClient.java:153)

at com.vesoft.nebula.client.meta.MetaClient.doConnect(MetaClient.java:124)

at com.vesoft.nebula.client.meta.MetaClient.connect(MetaClient.java:113)

at com.vesoft.nebula.connector.nebula.MetaProvider.<init>(MetaProvider.scala:52)

at com.vesoft.nebula.connector.reader.NebulaSourceReader.getSchema(NebulaSourceReader.scala:44)

at com.vesoft.nebula.connector.reader.NebulaSourceReader.readSchema(NebulaSourceReader.scala:30)

at org.apache.spark.sql.execution.datasources.v2.DataSourceV2Relation$.create(DataSourceV2Relation.scala:175)

at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:204)

at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:167)

at com.vesoft.nebula.connector.connector.package$NebulaDataFrameReader.loadVerticesToDF(package.scala:135)

at com.nebula.Reader$.main(Reader.scala:34)

at com.nebula.Reader.main(Reader.scala)

Process finished with exit code 1

上面是执行日志

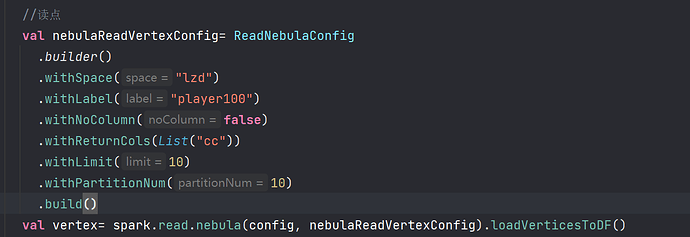

报错显示的是图片代码的最后一行

这个nebula引用的是这个包名

不知道什么问题 麻烦看看谢谢

看起来好像是版本不对

哪个版本?? 我使用的连接器是2.6.1,maven导入的,Spark2.4.0,Scala使用的是2.11.12,是哪个版本不兼容呢?

什么版本呢

:: 瞅瞅

如果版本没问题 那就是地址和端口号写错了 看看连得是不是Meta Address

您看看

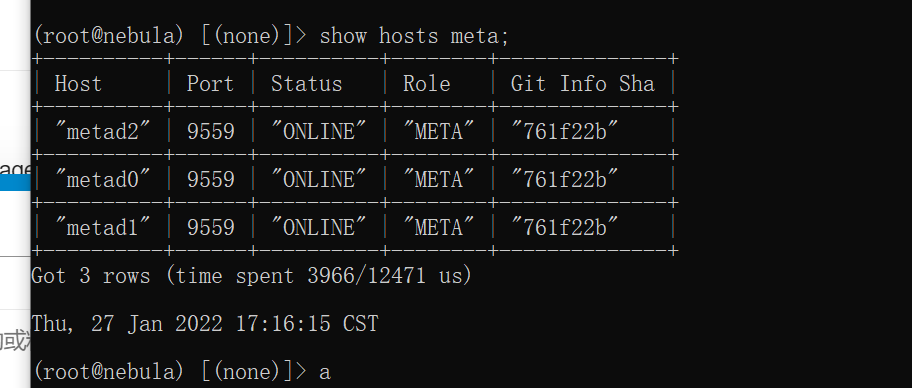

show hosts meta 可以看看这个print么?确定一下 nebula graph 的版本

(root@nebula) [basketballplayer]> show hosts meta

+-------------+------+----------+--------+--------------+----------------------+

| Host | Port | Status | Role | Git Info Sha | Version |

+-------------+------+----------+--------+--------------+----------------------+

| "127.0.0.1" | 9559 | "ONLINE" | "META" | "1e75ef8" | "2022.01.26-nightly" |

+-------------+------+----------+--------+--------------+----------------------+

Got 1 rows (time spent 867/46006 us)

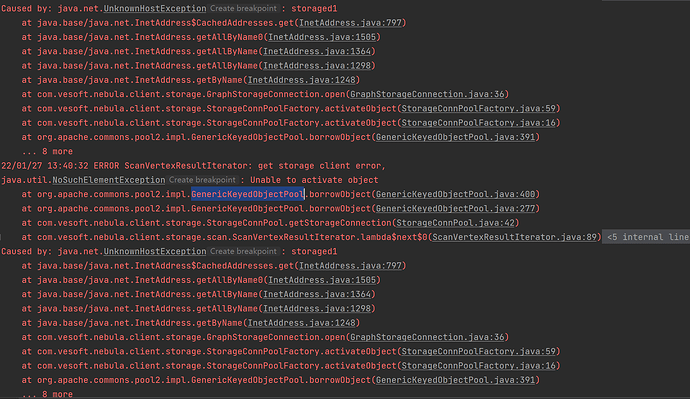

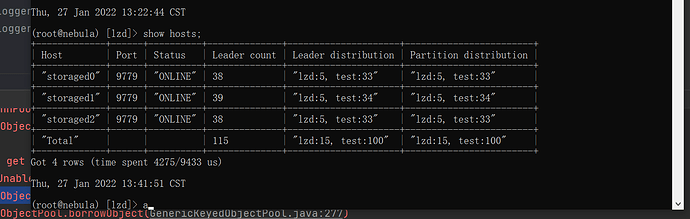

很明显了,是这个原因 : 你的storage地址是 storaged0, 外部无法访问

1 个赞