Spark :2.4.0

Scala:2.11.12

Nebula:2.6.0

连接器:2.6.0

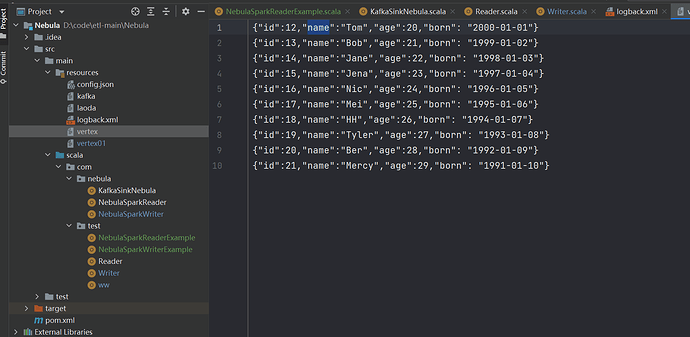

配置如下:

pom文件

4.0.0

com

Nebula

1.0-SNAPSHOT

2008

<scala.version>2.11.12</scala.version>

<spark.version>2.4.4</spark.version>

<nebula.version>2.6.0</nebula.version>

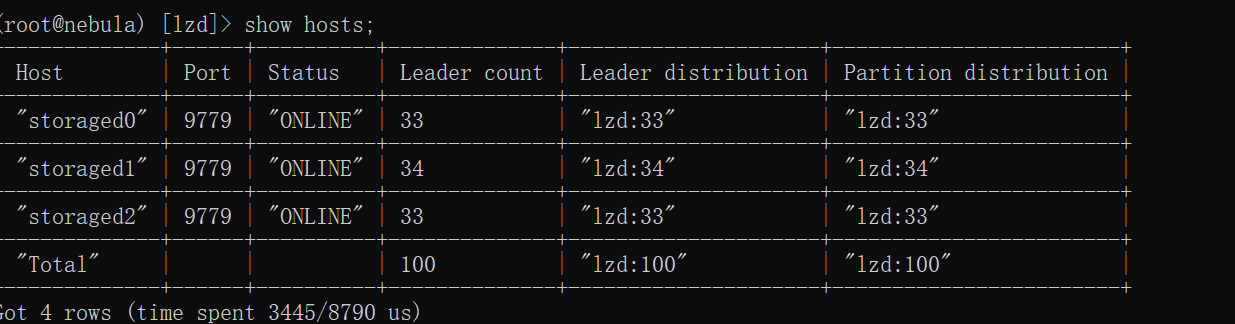

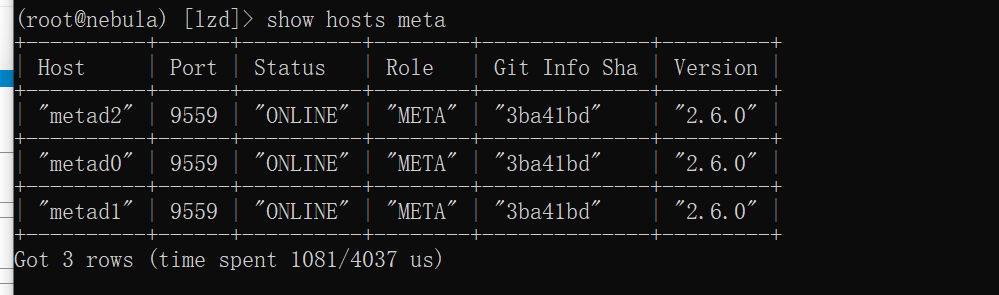

报错如下

Exception in thread “main” com.facebook.thrift.transport.TTransportException: java.net.UnknownHostException: metad2

at com.facebook.thrift.transport.TSocket.open(TSocket.java:206)

at com.vesoft.nebula.client.meta.MetaClient.getClient(MetaClient.java:110)

at com.vesoft.nebula.client.meta.MetaClient.freshClient(MetaClient.java:128)

at com.vesoft.nebula.client.meta.MetaClient.getSpace(MetaClient.java:190)

at com.vesoft.nebula.client.meta.MetaClient.getTag(MetaClient.java:255)

at com.vesoft.nebula.connector.nebula.MetaProvider.getTag(MetaProvider.scala:37)

at com.vesoft.nebula.connector.reader.NebulaSourceReader.getSchema(NebulaSourceReader.scala:71)

at com.vesoft.nebula.connector.reader.NebulaSourceReader.readSchema(NebulaSourceReader.scala:31)

at org.apache.spark.sql.execution.datasources.v2.DataSourceV2Relation$.create(DataSourceV2Relation.scala:175)

at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:204)

at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:167)

at com.vesoft.nebula.connector.connector.package$NebulaDataFrameReader.loadVerticesToDF(package.scala:122)

at com.test.NebulaSparkReaderExample$.readVertex(NebulaSparkReaderExample.scala:53)

at com.test.NebulaSparkReaderExample$.main(NebulaSparkReaderExample.scala:27)

at com.test.NebulaSparkReaderExample.main(NebulaSparkReaderExample.scala)

Caused by: java.net.UnknownHostException: metad2

at java.base/java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:220)

at java.base/java.net.SocksSocketImpl.connect(SocksSocketImpl.java:403)

at java.base/java.net.Socket.connect(Socket.java:608)

at com.facebook.thrift.transport.TSocket.open(TSocket.java:201)

… 14 more

22/01/29 11:50:42 INFO SparkContext: Invoking stop() from shutdown hook

22/01/29 11:50:42 INFO SparkUI: Stopped Spark web UI at http://DESKTOP-U1T3QJ9:4040

22/01/29 11:50:42 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

22/01/29 11:50:42 INFO MemoryStore: MemoryStore cleared

22/01/29 11:50:42 INFO BlockManager: BlockManager stopped

22/01/29 11:50:42 INFO BlockManagerMaster: BlockManagerMaster stopped

22/01/29 11:50:42 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

22/01/29 11:50:42 INFO SparkContext: Successfully stopped SparkContext

22/01/29 11:50:42 INFO ShutdownHookManager: Shutdown hook called

22/01/29 11:50:42 INFO ShutdownHookManager: Deleting directory C:\Users\Goyokki\AppData\Local\Temp\spark-08c4b80c-df23-40ef-a0a1-7af5a0276a24

着急 大佬们看看