我的apps_v1alpha1_nebulacluster.yaml的文件,就只是在官网配置基础上,改了replicas属性:

apiVersion: apps.nebula-graph.io/v1alpha1

kind: NebulaCluster

metadata:

name: nebula

spec:

graphd:

resources:

requests:

cpu: "500m"

memory: "500Mi"

limits:

cpu: "1"

memory: "1Gi"

replicas: 3

image: vesoft/nebula-graphd

version: v2.6.2

service:

type: NodePort

externalTrafficPolicy: Local

logVolumeClaim:

resources:

requests:

storage: 2Gi

storageClassName: gp2

metad:

resources:

requests:

cpu: "500m"

memory: "500Mi"

limits:

cpu: "1"

memory: "1Gi"

replicas: 3

image: vesoft/nebula-metad

version: v2.6.2

dataVolumeClaim:

resources:

requests:

storage: 2Gi

storageClassName: gp2

logVolumeClaim:

resources:

requests:

storage: 2Gi

storageClassName: gp2

storaged:

resources:

requests:

cpu: "500m"

memory: "500Mi"

limits:

cpu: "1"

memory: "1Gi"

replicas: 3

image: vesoft/nebula-storaged

version: v2.6.2

dataVolumeClaim:

resources:

requests:

storage: 2Gi

storageClassName: gp2

logVolumeClaim:

resources:

requests:

storage: 2Gi

storageClassName: gp2

reference:

name: statefulsets.apps

version: v1

schedulerName: default-scheduler

imagePullPolicy: Always

查看某个pending状态pod的describe信息:kubectl describe pod nebula-metad-0

Name: nebula-metad-0

Namespace: default

Priority: 0

Node: <none>

Labels: app.kubernetes.io/cluster=nebula

app.kubernetes.io/component=metad

app.kubernetes.io/managed-by=nebula-operator

app.kubernetes.io/name=nebula-graph

controller-revision-hash=nebula-metad-6fbfd9f5c6

statefulset.kubernetes.io/pod-name=nebula-metad-0

Annotations: nebula-graph.io/cm-hash: bf057776a3ae4f4c

Status: Pending

IP:

IPs: <none>

Controlled By: StatefulSet/nebula-metad

Containers:

metad:

Image: vesoft/nebula-metad:v2.6.2

Ports: 9559/TCP, 19559/TCP, 19560/TCP

Host Ports: 0/TCP, 0/TCP, 0/TCP

Command:

/bin/bash

-ecx

exec /usr/local/nebula/bin/nebula-metad --flagfile=/usr/local/nebula/etc/nebula-metad.conf --meta_server_addrs=nebula-metad-0.nebula-metad-headless.default.svc.cluster.local:9559 --local_ip=$(hostname).nebula-metad-headless.default.svc.cluster.local --ws_ip=$(hostname).nebula-metad-headless.default.svc.cluster.local --daemonize=false

Limits:

cpu: 1

memory: 1Gi

Requests:

cpu: 500m

memory: 500Mi

Readiness: http-get http://:19559/status delay=10s timeout=5s period=10s #success=1 #failure=3

Environment: <none>

Mounts:

/usr/local/nebula/data from metad-data (rw,path="data")

/usr/local/nebula/etc from nebula-metad (rw)

/usr/local/nebula/logs from metad-log (rw,path="logs")

/var/run/secrets/kubernetes.io/serviceaccount from default-token-fd5q9 (ro)

Conditions:

Type Status

PodScheduled False

Volumes:

metad-log:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: metad-log-nebula-metad-0

ReadOnly: false

metad-data:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: metad-data-nebula-metad-0

ReadOnly: false

nebula-metad:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: nebula-metad

Optional: false

default-token-fd5q9:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-fd5q9

Optional: false

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 24m default-scheduler 0/4 nodes are available: 1 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate, 3 pod has unbound immediate PersistentVolumeClaims.

Warning FailedScheduling 24m default-scheduler 0/4 nodes are available: 1 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate, 3 pod has unbound immediate PersistentVolumeClaims.

storageClass需要修改为你本地部署环境的,gp2这里是示例

storageClassName都有哪些选型配置啊?我该如何填写storageClassName呢?

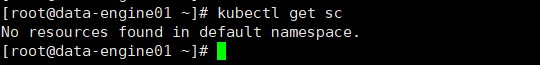

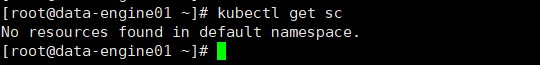

你用kubectl get sc查看下,然后再修改helm charts里的storageClassName值

是这样,我的服务器虽然是阿里云的,但是我通过命令kubectl get sc发现啥都没有

这storageClassName能够配置成local-storage吗?

storageClass支持本地存储,可以看下local-storage类型

我尝试了很多方式,都没有成功安装好storageClass。你给的连接,以及这篇帖子https://www.jianshu.com/p/d35fba102643,都没有成功。

我这两天尝试了一下operator安装,发现对于用户来说还是门槛太高啊,文档太简洁,新手安装坑很多

system

关闭

12

此话题已在最后回复的 30 天后被自动关闭。不再允许新回复。