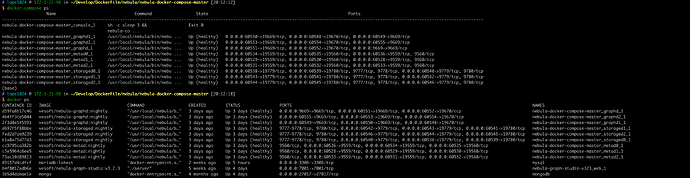

- nebula 版本:3.0.2

- 部署方式:单机

- 安装方式:Docker

- 是否为线上版本:N

- 硬件信息

- 磁盘 SSD

- CPU M1 PRO

- 内存32G

- spark版本 2.4

使用官方提供的exchange jar包,按照官方步骤执行导入不成功,conf文件以及报错信息如下,求助

conf配置如下

{

# Spark 相关配置

spark: {

app: {

name: Nebula Exchange 3.0.0

}

driver: {

cores: 1

maxResultSize: 1G

}

cores: {

max: 16

}

}

# Nebula Graph 相关配置

nebula: {

address:{

# 以下为 Nebula Graph 的 Graph 服务和 Meta 服务所在机器的 IP 地址及端口。

# 如果有多个地址,格式为 "ip1:port","ip2:port","ip3:port"。

# 不同地址之间以英文逗号 (,) 隔开。

graph:["172.1.21.98:9669"]

meta:["172.1.21.98:60536","172.1.21.98:60528","172.1.21.98:60533"]

}

# 填写的账号必须拥有 Nebula Graph 相应图空间的写数据权限。

user: root

pswd: nebula

# 填写 Nebula Graph 中需要写入数据的图空间名称。

space: basketballplayer

connection: {

timeout: 3000

retry: 3

}

execution: {

retry: 3

}

error: {

max: 32

output: /tmp/errors

}

rate: {

limit: 1024

timeout: 1000

}

}

# 处理点

tags: [

# 设置 Tag player 相关信息。

{

# Nebula Graph 中对应的 Tag 名称。

name: player

type: {

# 指定数据源文件格式,设置为 MySQL。

source: mysql

# 指定如何将点数据导入 Nebula Graph:Client 或 SST。

sink: client

}

host:127.0.0.1

port:3306

database:"basketballplayer"

table:"player"

user:"root"

password:"123456"

sentence:"select playerid, age, name from player order by playerid"

# 在 fields 里指定 player 表中的列名称,其对应的 value 会作为 Nebula Graph 中指定属性。

# fields 和 nebula.fields 里的配置必须一一对应。

# 如果需要指定多个列名称,用英文逗号(,)隔开。

fields: [age,name]

nebula.fields: [age,name]

# 指定表中某一列数据为 Nebula Graph 中点 VID 的来源。

vertex: {

field:playerid

}

# 单批次写入 Nebula Graph 的数据条数。

batch: 256

# Spark 分区数量

partition: 32

}

# 设置 Tag team 相关信息。

{

name: team

type: {

source: mysql

sink: client

}

host:127.0.0.1

port:3306

database:"basketballplayer"

table:"team"

user:"root"

password:"123456"

sentence:"select teamid, name from team order by teamid"

fields: [name]

nebula.fields: [name]

vertex: {

field: teamid

}

batch: 256

partition: 32

}

]

# 处理边数据

edges: [

# 设置 Edge type follow 相关信息

{

# Nebula Graph 中对应的 Edge type 名称。

name: follow

type: {

# 指定数据源文件格式,设置为 MySQL。

source: mysql

# 指定边数据导入 Nebula Graph 的方式,

# 指定如何将点数据导入 Nebula Graph:Client 或 SST。

sink: client

}

host:127.0.0.1

port:3306

database:"basketballplayer"

table:"follow"

user:"root"

password:"123456"

sentence:"select src_player,dst_player,degree from follow order by src_player"

# 在 fields 里指定 follow 表中的列名称,其对应的 value 会作为 Nebula Graph 中指定属性。

# fields 和 nebula.fields 里的配置必须一一对应。

# 如果需要指定多个列名称,用英文逗号(,)隔开。

fields: [degree]

nebula.fields: [degree]

# 在 source 里,将 follow 表中某一列作为边的起始点数据源。

# 在 target 里,将 follow 表中某一列作为边的目的点数据源。

source: {

field: src_player

}

target: {

field: dst_player

}

# 指定一个列作为 rank 的源(可选)。

#ranking: rank

# 单批次写入 Nebula Graph 的数据条数。

batch: 256

# Spark 分区数量

partition: 32

}

# 设置 Edge type serve 相关信息

{

name: serve

type: {

source: mysql

sink: client

}

host:127.0.0.1

port:3306

database:"basketballplayer"

table:"serve"

user:"root"

password:"123456"

sentence:"select playerid,teamid,start_year,end_year from serve order by playerid"

fields: [start_year,end_year]

nebula.fields: [start_year,end_year]

source: {

field: playerid

}

target: {

field: teamid

}

# 指定一个列作为 rank 的源(可选)。

#ranking: rank

batch: 256

partition: 32

}

]

}

执行报错信息如下:

$ ~/Develop/spark-2.4.8-bin-hadoop2.7/bin/spark-submit --master "local" --class com.vesoft.nebula.exchange.Exchange nebula-exchange_spark_2.4-3.0.0.jar -c application.conf

WARNING: An illegal reflective access operation has occurred

WARNING: Illegal reflective access by org.apache.spark.unsafe.Platform (file:/Users/lope1024/Develop/spark-2.4.8-bin-hadoop2.7/jars/spark-unsafe_2.11-2.4.8.jar) to method java.nio.Bits.unaligned()

WARNING: Please consider reporting this to the maintainers of org.apache.spark.unsafe.Platform

WARNING: Use --illegal-access=warn to enable warnings of further illegal reflective access operations

WARNING: All illegal access operations will be denied in a future release

22/05/30 17:31:44 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

log4j:WARN No appenders could be found for logger (com.vesoft.exchange.common.config.Configs$).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

22/05/30 17:31:44 INFO SparkContext: Running Spark version 2.4.8

22/05/30 17:31:44 INFO SparkContext: Submitted application: com.vesoft.nebula.exchange.Exchange

22/05/30 17:31:44 INFO SecurityManager: Changing view acls to: lope1024

22/05/30 17:31:44 INFO SecurityManager: Changing modify acls to: lope1024

22/05/30 17:31:44 INFO SecurityManager: Changing view acls groups to:

22/05/30 17:31:44 INFO SecurityManager: Changing modify acls groups to:

22/05/30 17:31:44 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(lope1024); groups with view permissions: Set(); users with modify permissions: Set(lope1024); groups with modify permissions: Set()

22/05/30 17:31:44 INFO Utils: Successfully started service 'sparkDriver' on port 56528.

22/05/30 17:31:44 INFO SparkEnv: Registering MapOutputTracker

22/05/30 17:31:44 INFO SparkEnv: Registering BlockManagerMaster

22/05/30 17:31:44 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

22/05/30 17:31:44 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

22/05/30 17:31:44 INFO DiskBlockManager: Created local directory at /private/var/folders/g2/zxv2vx110nq1vf953g9s1n400000gn/T/blockmgr-c660473e-87d4-4d91-ad18-7c79030bbf65

22/05/30 17:31:44 INFO MemoryStore: MemoryStore started with capacity 434.4 MB

22/05/30 17:31:44 INFO SparkEnv: Registering OutputCommitCoordinator

22/05/30 17:31:44 INFO Utils: Successfully started service 'SparkUI' on port 4040.

22/05/30 17:31:44 INFO SparkUI: Bound SparkUI to 0.0.0.0, and started at http://172-1-21-98.lightspeed.frsnca.sbcglobal.net:4040

22/05/30 17:31:44 INFO SparkContext: Added JAR file:/Users/lope1024/Develop/DockerFile/nebula/nebula-exchange/nebula-exchange_spark_2.4-3.0.0.jar at spark://172-1-21-98.lightspeed.frsnca.sbcglobal.net:56528/jars/nebula-exchange_spark_2.4-3.0.0.jar with timestamp 1653903104590

22/05/30 17:31:44 INFO Executor: Starting executor ID driver on host localhost

22/05/30 17:31:44 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 56529.

22/05/30 17:31:44 INFO NettyBlockTransferService: Server created on 172-1-21-98.lightspeed.frsnca.sbcglobal.net:56529

22/05/30 17:31:44 INFO BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

22/05/30 17:31:44 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(driver, 172-1-21-98.lightspeed.frsnca.sbcglobal.net, 56529, None)

22/05/30 17:31:44 INFO BlockManagerMasterEndpoint: Registering block manager 172-1-21-98.lightspeed.frsnca.sbcglobal.net:56529 with 434.4 MB RAM, BlockManagerId(driver, 172-1-21-98.lightspeed.frsnca.sbcglobal.net, 56529, None)

22/05/30 17:31:44 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(driver, 172-1-21-98.lightspeed.frsnca.sbcglobal.net, 56529, None)

22/05/30 17:31:44 INFO BlockManager: Initialized BlockManager: BlockManagerId(driver, 172-1-21-98.lightspeed.frsnca.sbcglobal.net, 56529, None)

22/05/30 17:31:44 INFO Exchange$: Processing Tag player

22/05/30 17:31:44 INFO Exchange$: field keys: age, name

22/05/30 17:31:44 INFO Exchange$: nebula keys: age, name

22/05/30 17:31:44 INFO Exchange$: Loading from mysql com.vesoft.exchange.common.config: MySql source host: 127.0.0.1, port: 3306, database: basketballplayer, table: player, user: root, password: 123456, sentence: select playerid, age, name from player order by playerid

22/05/30 17:31:44 INFO SharedState: Setting hive.metastore.warehouse.dir ('null') to the value of spark.sql.warehouse.dir ('file:/Users/lope1024/Develop/DockerFile/nebula/nebula-exchange/spark-warehouse/').

22/05/30 17:31:44 INFO SharedState: Warehouse path is 'file:/Users/lope1024/Develop/DockerFile/nebula/nebula-exchange/spark-warehouse/'.

22/05/30 17:31:44 INFO StateStoreCoordinatorRef: Registered StateStoreCoordinator endpoint

22/05/30 17:31:45 INFO ClickHouseDriver: Driver registered

22/05/30 17:31:46 INFO CodeGenerator: Code generated in 94.753709 ms

22/05/30 17:31:46 INFO CodeGenerator: Code generated in 6.8715 ms

22/05/30 17:31:46 INFO CodeGenerator: Code generated in 6.167083 ms

Exception in thread "main" org.apache.spark.sql.catalyst.errors.package$TreeNodeException: execute, tree:

Exchange RoundRobinPartitioning(32)

+- *(2) Sort [playerid#0 ASC NULLS FIRST], true, 0

+- Exchange rangepartitioning(playerid#0 ASC NULLS FIRST, 200)

+- *(1) Scan JDBCRelation(player) [numPartitions=1] [playerid#0,age#1,name#2] PushedFilters: [], ReadSchema: struct<playerid:string,age:int,name:string>

at org.apache.spark.sql.catalyst.errors.package$.attachTree(package.scala:56)

at org.apache.spark.sql.execution.exchange.ShuffleExchangeExec.doExecute(ShuffleExchangeExec.scala:119)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:136)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:132)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$1.apply(SparkPlan.scala:160)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:157)

at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:132)

at org.apache.spark.sql.execution.DeserializeToObjectExec.doExecute(objects.scala:89)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:136)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:132)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$1.apply(SparkPlan.scala:160)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:157)

at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:132)

at org.apache.spark.sql.execution.FilterExec.doExecute(basicPhysicalOperators.scala:219)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:136)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:132)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$1.apply(SparkPlan.scala:160)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:157)

at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:132)

at org.apache.spark.sql.execution.MapElementsExec.doExecute(objects.scala:234)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:136)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:132)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$1.apply(SparkPlan.scala:160)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:157)

at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:132)

at org.apache.spark.sql.execution.QueryExecution.toRdd$lzycompute(QueryExecution.scala:83)

at org.apache.spark.sql.execution.QueryExecution.toRdd(QueryExecution.scala:81)

at org.apache.spark.sql.Dataset.rdd$lzycompute(Dataset.scala:3043)

at org.apache.spark.sql.Dataset.rdd(Dataset.scala:3041)

at org.apache.spark.sql.Dataset$$anonfun$foreachPartition$1.apply$mcV$sp(Dataset.scala:2741)

at org.apache.spark.sql.Dataset$$anonfun$foreachPartition$1.apply(Dataset.scala:2741)

at org.apache.spark.sql.Dataset$$anonfun$foreachPartition$1.apply(Dataset.scala:2741)

at org.apache.spark.sql.Dataset$$anonfun$withNewRDDExecutionId$1.apply(Dataset.scala:3354)

at org.apache.spark.sql.execution.SQLExecution$$anonfun$withNewExecutionId$1.apply(SQLExecution.scala:80)

at org.apache.spark.sql.execution.SQLExecution$.withSQLConfPropagated(SQLExecution.scala:127)

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:75)

at org.apache.spark.sql.Dataset.withNewRDDExecutionId(Dataset.scala:3350)

at org.apache.spark.sql.Dataset.foreachPartition(Dataset.scala:2740)

at com.vesoft.nebula.exchange.processor.VerticesProcessor.process(VerticesProcessor.scala:180)

at com.vesoft.nebula.exchange.Exchange$$anonfun$main$2.apply(Exchange.scala:123)

at com.vesoft.nebula.exchange.Exchange$$anonfun$main$2.apply(Exchange.scala:95)

at scala.collection.immutable.List.foreach(List.scala:392)

at com.vesoft.nebula.exchange.Exchange$.main(Exchange.scala:95)

at com.vesoft.nebula.exchange.Exchange.main(Exchange.scala)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:566)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:855)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:161)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:184)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:86)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:930)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:939)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: org.apache.spark.sql.catalyst.errors.package$TreeNodeException: execute, tree:

Exchange rangepartitioning(playerid#0 ASC NULLS FIRST, 200)

+- *(1) Scan JDBCRelation(player) [numPartitions=1] [playerid#0,age#1,name#2] PushedFilters: [], ReadSchema: struct<playerid:string,age:int,name:string>

at org.apache.spark.sql.catalyst.errors.package$.attachTree(package.scala:56)

at org.apache.spark.sql.execution.exchange.ShuffleExchangeExec.doExecute(ShuffleExchangeExec.scala:119)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:136)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:132)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$1.apply(SparkPlan.scala:160)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:157)

at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:132)

at org.apache.spark.sql.execution.InputAdapter.inputRDDs(WholeStageCodegenExec.scala:391)

at org.apache.spark.sql.execution.SortExec.inputRDDs(SortExec.scala:129)

at org.apache.spark.sql.execution.WholeStageCodegenExec.doExecute(WholeStageCodegenExec.scala:627)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:136)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:132)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$1.apply(SparkPlan.scala:160)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:157)

at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:132)

at org.apache.spark.sql.execution.exchange.ShuffleExchangeExec.prepareShuffleDependency(ShuffleExchangeExec.scala:92)

at org.apache.spark.sql.execution.exchange.ShuffleExchangeExec$$anonfun$doExecute$1.apply(ShuffleExchangeExec.scala:128)

at org.apache.spark.sql.execution.exchange.ShuffleExchangeExec$$anonfun$doExecute$1.apply(ShuffleExchangeExec.scala:119)

at org.apache.spark.sql.catalyst.errors.package$.attachTree(package.scala:52)

... 59 more

Caused by: java.lang.IllegalArgumentException: Unsupported class file major version 55

at org.apache.xbean.asm6.ClassReader.<init>(ClassReader.java:166)

at org.apache.xbean.asm6.ClassReader.<init>(ClassReader.java:148)

at org.apache.xbean.asm6.ClassReader.<init>(ClassReader.java:136)

at org.apache.xbean.asm6.ClassReader.<init>(ClassReader.java:237)

at org.apache.spark.util.ClosureCleaner$.getClassReader(ClosureCleaner.scala:50)

at org.apache.spark.util.FieldAccessFinder$$anon$4$$anonfun$visitMethodInsn$7.apply(ClosureCleaner.scala:845)

at org.apache.spark.util.FieldAccessFinder$$anon$4$$anonfun$visitMethodInsn$7.apply(ClosureCleaner.scala:828)

at scala.collection.TraversableLike$WithFilter$$anonfun$foreach$1.apply(TraversableLike.scala:733)

at scala.collection.mutable.HashMap$$anon$1$$anonfun$foreach$2.apply(HashMap.scala:134)

at scala.collection.mutable.HashMap$$anon$1$$anonfun$foreach$2.apply(HashMap.scala:134)

at scala.collection.mutable.HashTable$class.foreachEntry(HashTable.scala:236)

at scala.collection.mutable.HashMap.foreachEntry(HashMap.scala:40)

at scala.collection.mutable.HashMap$$anon$1.foreach(HashMap.scala:134)

at scala.collection.TraversableLike$WithFilter.foreach(TraversableLike.scala:732)

at org.apache.spark.util.FieldAccessFinder$$anon$4.visitMethodInsn(ClosureCleaner.scala:828)

at org.apache.xbean.asm6.ClassReader.readCode(ClassReader.java:2175)

at org.apache.xbean.asm6.ClassReader.readMethod(ClassReader.java:1238)

at org.apache.xbean.asm6.ClassReader.accept(ClassReader.java:631)

at org.apache.xbean.asm6.ClassReader.accept(ClassReader.java:355)

at org.apache.spark.util.ClosureCleaner$$anonfun$org$apache$spark$util$ClosureCleaner$$clean$14.apply(ClosureCleaner.scala:272)

at org.apache.spark.util.ClosureCleaner$$anonfun$org$apache$spark$util$ClosureCleaner$$clean$14.apply(ClosureCleaner.scala:271)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.apache.spark.util.ClosureCleaner$.org$apache$spark$util$ClosureCleaner$$clean(ClosureCleaner.scala:271)

at org.apache.spark.util.ClosureCleaner$.clean(ClosureCleaner.scala:163)

at org.apache.spark.SparkContext.clean(SparkContext.scala:2332)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2106)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2132)

at org.apache.spark.rdd.RDD$$anonfun$collect$1.apply(RDD.scala:990)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:385)

at org.apache.spark.rdd.RDD.collect(RDD.scala:989)

at org.apache.spark.RangePartitioner$.sketch(Partitioner.scala:309)

at org.apache.spark.RangePartitioner.<init>(Partitioner.scala:171)

at org.apache.spark.sql.execution.exchange.ShuffleExchangeExec$.prepareShuffleDependency(ShuffleExchangeExec.scala:224)

at org.apache.spark.sql.execution.exchange.ShuffleExchangeExec.prepareShuffleDependency(ShuffleExchangeExec.scala:91)

at org.apache.spark.sql.execution.exchange.ShuffleExchangeExec$$anonfun$doExecute$1.apply(ShuffleExchangeExec.scala:128)

at org.apache.spark.sql.execution.exchange.ShuffleExchangeExec$$anonfun$doExecute$1.apply(ShuffleExchangeExec.scala:119)

at org.apache.spark.sql.catalyst.errors.package$.attachTree(package.scala:52)

... 79 more

22/05/30 17:31:46 INFO SparkContext: Invoking stop() from shutdown hook

22/05/30 17:31:46 INFO SparkUI: Stopped Spark web UI at http://172-1-21-98.lightspeed.frsnca.sbcglobal.net:4040

22/05/30 17:31:46 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

22/05/30 17:31:46 INFO MemoryStore: MemoryStore cleared

22/05/30 17:31:46 INFO BlockManager: BlockManager stopped

22/05/30 17:31:46 INFO BlockManagerMaster: BlockManagerMaster stopped

22/05/30 17:31:46 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

22/05/30 17:31:46 INFO SparkContext: Successfully stopped SparkContext

22/05/30 17:31:46 INFO ShutdownHookManager: Shutdown hook called

22/05/30 17:31:46 INFO ShutdownHookManager: Deleting directory /private/var/folders/g2/zxv2vx110nq1vf953g9s1n400000gn/T/spark-8a842c2a-b3b4-4f46-aaf4-1a431b61f113

22/05/30 17:31:46 INFO ShutdownHookManager: Deleting directory /private/var/folders/g2/zxv2vx110nq1vf953g9s1n400000gn/T/spark-70efa882-9409-4b5a-bb97-54a20325f009