背景

使用nebula-br进行单节点数据备份报错

$ ./bin/br backup full --meta "127.0.0.1:9559" --storage "local:///Users/jermey/projects/nebula-backup"

{"level":"info","meta address":"127.0.0.1:9559","msg":"Try to connect meta service.","time":"2022-07-22T16:48:33.974Z"}

{"level":"info","meta address":"127.0.0.1:9559","msg":"Connect meta server successfully.","time":"2022-07-22T16:48:33.981Z"}

Error: parse cluster response failed: response is not successful, code is E_LIST_CLUSTER_NO_AGENT_FAILURE

环境

nebula-br

我的nebula-br版本

$ ./bin/br version

Nebula Backup And Restore Utility Tool,V-0.6.1

GitSha: 5aca40c

GitRef: master

please run "help" subcommand for more infomation.%

nebula 服务

nebula 是通过 docker 启动的单节点服务

> docker-compose ps

Name Command State Ports

------------------------------------------------------------------------------------------------------------------------------------------------------

graphd /usr/local/nebula/bin/nebu ... Up (healthy) 0.0.0.0:19669->19669/tcp,:::19669->19669/tcp, 0.0.0.0:19670->19670/tcp,:::19670->19670/tcp,

0.0.0.0:9669->9669/tcp,:::9669->9669/tcp

metad /usr/local/nebula/bin/nebu ... Up (healthy) 0.0.0.0:19559->19559/tcp,:::19559->19559/tcp, 0.0.0.0:19560->19560/tcp,:::19560->19560/tcp,

0.0.0.0:9559->9559/tcp,:::9559->9559/tcp, 9560/tcp

storaged /usr/local/nebula/bin/nebu ... Up (healthy) 0.0.0.0:19779->19779/tcp,:::19779->19779/tcp, 0.0.0.0:19780->19780/tcp,:::19780->19780/tcp,

9777/tcp, 9778/tcp, 0.0.0.0:9779->9779/tcp,:::9779->9779/tcp, 9780/tcp

studio ./server Up 0.0.0.0:7001->7001/tcp,:::7001->7001/tcp

nebula graphd/metad/storaged/ 使用的都是 v3.1.0

下面是metad服务的配置文件信息:

########## basics ##########

# Whether to run as a daemon process

--daemonize=true

# The file to host the process id

--pid_file=pids/nebula-metad.pid

########## logging ##########

# The directory to host logging files

--log_dir=logs

# Log level, 0, 1, 2, 3 for INFO, WARNING, ERROR, FATAL respectively

--minloglevel=0

# Verbose log level, 1, 2, 3, 4, the higher of the level, the more verbose of the logging

--v=0

# Maximum seconds to buffer the log messages

--logbufsecs=0

# Whether to redirect stdout and stderr to separate output files

--redirect_stdout=true

# Destination filename of stdout and stderr, which will also reside in log_dir.

--stdout_log_file=metad-stdout.log

--stderr_log_file=metad-stderr.log

# Copy log messages at or above this level to stderr in addition to logfiles. The numbers of severity levels INFO, WARNING, ERROR, and FATAL are 0, 1, 2, and 3, respectively.

--stderrthreshold=2

# wether logging files' name contain time stamp, If Using logrotate to rotate logging files, than should set it to true.

--timestamp_in_logfile_name=true

########## networking ##########

# Comma separated Meta Server addresses

--meta_server_addrs=127.0.0.1:9559

# Local IP used to identify the nebula-metad process.

# Change it to an address other than loopback if the service is distributed or

# will be accessed remotely.

--local_ip=127.0.0.1

# Meta daemon listening port

--port=9559

# HTTP service ip

--ws_ip=0.0.0.0

# HTTP service port

--ws_http_port=19559

# Port to listen on Storage with HTTP protocol, it corresponds to ws_http_port in storage's configuration file

--ws_storage_http_port=19779

########## storage ##########

# Root data path, here should be only single path for metad

--data_path=data/meta

########## Misc #########

# The default number of parts when a space is created

--default_parts_num=100

# The default replica factor when a space is created

--default_replica_factor=1

--heartbeat_interval_secs=10

--agent_heartbeat_interval_secs=60

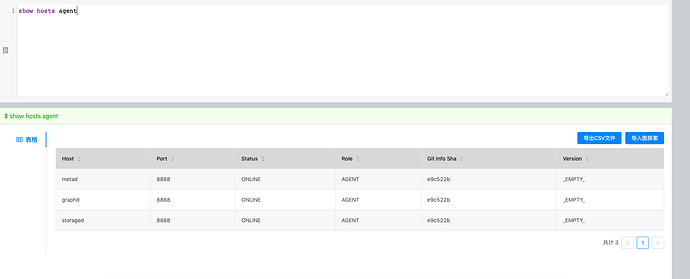

nebula-agent

> ./agent --agent="127.0.0.1:8888" --meta="127.0.0.1:9559"

{"file":"command-line-arguments/agent.go:31","func":"main.main","level":"info","msg":"Start agent server...","time":"2022-07-22T16:59:49.120Z","version":"96646b8"}

{"file":"github.com/vesoft-inc/nebula-agent/internal/clients/meta.go:75","func":"github.com/vesoft-inc/nebula-agent/internal/clients.connect","level":"info","meta address":"127.0.0.1:9559","msg":"try to connect meta service","time":"2022-07-22T16:59:49.121Z"}

{"file":"github.com/vesoft-inc/nebula-agent/internal/clients/meta.go:102","func":"github.com/vesoft-inc/nebula-agent/internal/clients.connect","level":"info","meta address":"127.0.0.1:9559","msg":"connect meta server successfully","time":"2022-07-22T16:59:49.126Z"}

agent 显示都已经连接成功的

但是执行备份命令时:

> ./bin/br backup full --meta "127.0.0.1:9559" --storage "local:///Users/jermey/projects/nebula-backup"

{"level":"info","meta address":"127.0.0.1:9559","msg":"Try to connect meta service.","time":"2022-07-22T17:01:02.004Z"}

{"level":"info","meta address":"127.0.0.1:9559","msg":"Connect meta server successfully.","time":"2022-07-22T17:01:02.009Z"}

Error: parse cluster response failed: response is not successful, code is E_LIST_CLUSTER_NO_AGENT_FAILURE

根据报错信息说链接metad服务不成功,

但是我查阅metad的配置文件中 meta_server_addrs 的ip也是 127.0.0.1,我telnet也是通的

telnet 127.0.0.1 9559

Trying 127.0.0.1...

Connected to localhost.

Escape character is '^]'.

但是我通过 real ip 进行测试,也是会报这个错误

各位大佬能看看帮忙看看原因吗

best wish!