提问参考模版:

+----------------+------------------+------------+----------------------------+----------------------------+-------------------+

| Job Id(TaskId) | Command(Dest) | Status | Start Time | Stop Time | Error Code |

+----------------+------------------+------------+----------------------------+----------------------------+-------------------+

| 6 | "COMPACT" | "RUNNING" | 2023-01-14T00:18:32.000000 | | "E_JOB_SUBMITTED" |

| 0 | "172.18.103.120" | "FAILED" | 2023-01-14T00:18:32.000000 | 2023-01-14T06:22:42.000000 | "" |

| 1 | "172.18.103.125" | "FAILED" | 2023-01-14T00:18:32.000000 | 2023-01-14T17:22:08.000000 | "" |

| 2 | "172.18.103.113" | "RUNNING" | 2023-01-14T00:18:32.000000 | | "" |

| "Total:3" | "Succeeded:0" | "Failed:2" | "In Progress:1" | "" | "" |

+----------------+------------------+------------+----------------------------+----------------------------+-------------------+

compaction 已经跑了好几天了,另外两台失败,但是meta leader 还在跑,一直也不结束,有什么办法可以解决这个问题吗?

ps: 这个集群是HDD,io 性能不太好。

这个节点已经offline 很久了,但是这个Compact 还是没有结束

(root@nebula) [(none)]> show hosts

+------------------+------+-----------+-----------+--------------+----------------------+------------------------+---------+

| Host | Port | HTTP port | Status | Leader count | Leader distribution | Partition distribution | Version |

+------------------+------+-----------+-----------+--------------+----------------------+------------------------+---------+

| "172.18.103.113" | 9779 | 19779 | "OFFLINE" | 0 | "No valid partition" | "Unified:30" | "3.3.0" |

| "172.18.103.120" | 9779 | 19779 | "ONLINE" | 13 | "Unified:13" | "Unified:30" | "3.3.0" |

| "172.18.103.125" | 9779 | 19779 | "ONLINE" | 17 | "Unified:17" | "Unified:30" | "3.3.0" |

+------------------+------+-----------+-----------+--------------+----------------------+------------------------+---------+

Got 3 rows (time spent 5.88ms/8.067512ms)

Tue, 17 Jan 2023 17:53:29 CST

(root@nebula) [(none)]> use Unified

Execution succeeded (time spent 2.488ms/3.123339ms)

Tue, 17 Jan 2023 17:53:35 CST

(root@nebula) [Unified]> show jobs

+--------+------------------+------------+----------------------------+----------------------------+

| Job Id | Command | Status | Start Time | Stop Time |

+--------+------------------+------------+----------------------------+----------------------------+

| 7 | "LEADER_BALANCE" | "QUEUE" | | |

| 6 | "COMPACT" | "RUNNING" | 2023-01-14T00:18:32.000000 | |

| 5 | "COMPACT" | "FINISHED" | 2023-01-13T03:10:26.000000 | 2023-01-13T07:11:16.000000 |

| 4 | "COMPACT" | "FINISHED" | 2023-01-11T11:51:45.000000 | 2023-01-11T16:37:11.000000 |

| 3 | "LEADER_BALANCE" | "FINISHED" | 2023-01-11T01:50:48.000000 | 2023-01-11T01:50:54.000000 |

+--------+------------------+------------+----------------------------+----------------------------+

Got 5 rows (time spent 23.884ms/24.97749ms)

Tue, 17 Jan 2023 17:53:36 CST

离线以后确实不能完成,是storage上报完成后才能完成的。

1 个赞

后面离线的storaged 恢复了, 能够慢慢完成吗?我现在看的这个节点online,但是还在RUNNING

spw

7

重启之后基本就只能等待失败了。现在还是 running 状态吗?可以 show job 6 看一下

信息如下,113 节点,我看目前硬盘空间也是够的。

我早晨重启了113 的storaged 服务:

日志

I20230128 09:50:07.366894 5015 NebulaStore.cpp:92] Scan path "/data/nebula330storage/nebula/1"

I20230128 09:50:07.367002 5015 RocksEngineConfig.cpp:366] Emplace rocksdb option max_background_jobs=8

I20230128 09:50:07.367030 5015 RocksEngineConfig.cpp:366] Emplace rocksdb option max_subcompactions=8

I20230128 09:50:07.367213 5015 RocksEngineConfig.cpp:366] Emplace rocksdb option max_bytes_for_level_base=268435456

I20230128 09:50:07.367237 5015 RocksEngineConfig.cpp:366] Emplace rocksdb option max_write_buffer_number=4

I20230128 09:50:07.367271 5015 RocksEngineConfig.cpp:366] Emplace rocksdb option write_buffer_size=67108864

I20230128 09:50:07.367287 5015 RocksEngineConfig.cpp:366] Emplace rocksdb option disable_auto_compactions=true

I20230128 09:50:07.376287 5015 RocksEngineConfig.cpp:366] Emplace rocksdb option block_size=8192

I20230128 09:50:07.487393 5015 RocksEngine.cpp:97] open rocksdb on /data/nebula330storage/nebula/1/data

I20230128 09:50:07.487450 5015 RocksEngine.h:196] Release rocksdb on /data/nebula330storage/nebula/1

I20230128 09:50:07.489181 5015 NebulaStore.cpp:271] Init data from partManager for "172.18.103.113":9779

I20230128 09:50:07.489264 5015 NebulaStore.cpp:369] Data space 1 has existed!

I20230128 09:50:07.489316 5015 RocksEngineConfig.cpp:366] Emplace rocksdb option max_background_jobs=8

I20230128 09:50:07.489328 5015 RocksEngineConfig.cpp:366] Emplace rocksdb option max_subcompactions=8

I20230128 09:50:07.489435 5015 RocksEngineConfig.cpp:366] Emplace rocksdb option max_bytes_for_level_base=268435456

I20230128 09:50:07.489446 5015 RocksEngineConfig.cpp:366] Emplace rocksdb option max_write_buffer_number=4

I20230128 09:50:07.489456 5015 RocksEngineConfig.cpp:366] Emplace rocksdb option write_buffer_size=67108864

I20230128 09:50:07.489466 5015 RocksEngineConfig.cpp:366] Emplace rocksdb option disable_auto_compactions=true

I20230128 09:50:07.489630 5015 RocksEngineConfig.cpp:366] Emplace rocksdb option block_size=8192

I20230128 09:50:07.553908 5015 RocksEngine.cpp:97] open rocksdb on /data/nebula330storage/nebula/1/data

I20230128 09:50:07.554031 5015 NebulaStore.cpp:430] [Space: 1, Part: 1] has existed!

I20230128 09:50:07.554078 5015 NebulaStore.cpp:430] [Space: 1, Part: 2] has existed!

I20230128 09:50:07.554093 5015 NebulaStore.cpp:430] [Space: 1, Part: 3] has existed!

I20230128 09:50:07.554107 5015 NebulaStore.cpp:430] [Space: 1, Part: 4] has existed!

I20230128 09:50:07.554121 5015 NebulaStore.cpp:430] [Space: 1, Part: 5] has existed!

I20230128 09:50:07.554133 5015 NebulaStore.cpp:430] [Space: 1, Part: 6] has existed!

I20230128 09:50:07.554147 5015 NebulaStore.cpp:430] [Space: 1, Part: 7] has existed!

I20230128 09:50:07.554160 5015 NebulaStore.cpp:430] [Space: 1, Part: 8] has existed!

I20230128 09:50:07.554183 5015 NebulaStore.cpp:430] [Space: 1, Part: 9] has existed!

I20230128 09:50:07.554195 5015 NebulaStore.cpp:430] [Space: 1, Part: 10] has existed!

I20230128 09:50:07.554208 5015 NebulaStore.cpp:430] [Space: 1, Part: 11] has existed!

I20230128 09:50:07.554222 5015 NebulaStore.cpp:430] [Space: 1, Part: 12] has existed!

I20230128 09:50:07.554235 5015 NebulaStore.cpp:430] [Space: 1, Part: 13] has existed!

I20230128 09:50:07.554250 5015 NebulaStore.cpp:430] [Space: 1, Part: 14] has existed!

I20230128 09:50:07.554262 5015 NebulaStore.cpp:430] [Space: 1, Part: 15] has existed!

I20230128 09:50:07.554275 5015 NebulaStore.cpp:430] [Space: 1, Part: 16] has existed!

I20230128 09:50:07.554289 5015 NebulaStore.cpp:430] [Space: 1, Part: 17] has existed!

I20230128 09:50:07.554302 5015 NebulaStore.cpp:430] [Space: 1, Part: 18] has existed!

I20230128 09:50:07.554315 5015 NebulaStore.cpp:430] [Space: 1, Part: 19] has existed!

I20230128 09:50:07.554328 5015 NebulaStore.cpp:430] [Space: 1, Part: 20] has existed!

I20230128 09:50:07.554347 5015 NebulaStore.cpp:430] [Space: 1, Part: 21] has existed!

I20230128 09:50:07.554360 5015 NebulaStore.cpp:430] [Space: 1, Part: 22] has existed!

I20230128 09:50:07.554378 5015 NebulaStore.cpp:430] [Space: 1, Part: 23] has existed!

I20230128 09:50:07.554397 5015 NebulaStore.cpp:430] [Space: 1, Part: 24] has existed!

I20230128 09:50:07.554414 5015 NebulaStore.cpp:430] [Space: 1, Part: 25] has existed!

I20230128 09:50:07.554430 5015 NebulaStore.cpp:430] [Space: 1, Part: 26] has existed!

I20230128 09:50:07.554442 5015 NebulaStore.cpp:430] [Space: 1, Part: 27] has existed!

I20230128 09:50:07.554455 5015 NebulaStore.cpp:430] [Space: 1, Part: 28] has existed!

I20230128 09:50:07.554468 5015 NebulaStore.cpp:430] [Space: 1, Part: 29] has existed!

I20230128 09:50:07.554481 5015 NebulaStore.cpp:430] [Space: 1, Part: 30] has existed!

I20230128 09:50:07.554563 5015 NebulaStore.cpp:78] Register handler...

I20230128 09:50:07.554576 5015 StorageServer.cpp:228] Init LogMonitor

I20230128 09:50:07.554723 5015 StorageServer.cpp:96] Starting Storage HTTP Service

I20230128 09:50:07.555509 5015 StorageServer.cpp:100] Http Thread Pool started

I20230128 09:50:07.560770 5179 WebService.cpp:124] Web service started on HTTP[19779]

I20230128 09:50:07.560891 5015 TransactionManager.cpp:24] TransactionManager ctor()

I20230128 09:50:07.561961 5015 RocksEngineConfig.cpp:366] Emplace rocksdb option max_background_jobs=8

I20230128 09:50:07.561991 5015 RocksEngineConfig.cpp:366] Emplace rocksdb option max_subcompactions=8

I20230128 09:50:07.562110 5015 RocksEngineConfig.cpp:366] Emplace rocksdb option max_bytes_for_level_base=268435456

I20230128 09:50:07.562124 5015 RocksEngineConfig.cpp:366] Emplace rocksdb option max_write_buffer_number=4

I20230128 09:50:07.562136 5015 RocksEngineConfig.cpp:366] Emplace rocksdb option write_buffer_size=67108864

I20230128 09:50:07.562150 5015 RocksEngineConfig.cpp:366] Emplace rocksdb option disable_auto_compactions=true

I20230128 09:50:07.562343 5015 RocksEngineConfig.cpp:366] Emplace rocksdb option block_size=8192

I20230128 09:50:07.641964 5015 RocksEngine.cpp:97] open rocksdb on /mnt/nebula330/data/storage/nebula/0/data

I20230128 09:50:07.642197 5015 AdminTaskManager.cpp:22] max concurrent subtasks: 10

I20230128 09:50:07.642505 5015 AdminTaskManager.cpp:40] exit AdminTaskManager::init()

I20230128 09:50:07.642700 5200 AdminTaskManager.cpp:227] waiting for incoming task

I20230128 09:50:28.812856 5081 MetaClient.cpp:3108] Load leader of "172.18.103.113":9779 in 0 space

I20230128 09:50:28.812965 5081 MetaClient.cpp:3108] Load leader of "172.18.103.120":9779 in 1 space

I20230128 09:50:28.812988 5081 MetaClient.cpp:3108] Load leader of "172.18.103.125":9779 in 1 space

I20230128 09:50:28.812997 5081 MetaClient.cpp:3114] Load leader ok

(root@nebula) [Unified]> show job 6

+----------------+------------------+------------+----------------------------+----------------------------+-------------------+

| Job Id(TaskId) | Command(Dest) | Status | Start Time | Stop Time | Error Code |

+----------------+------------------+------------+----------------------------+----------------------------+-------------------+

| 6 | "COMPACT" | "RUNNING" | 2023-01-14T00:18:32.000000 | | "E_JOB_SUBMITTED" |

| 0 | "172.18.103.120" | "FAILED" | 2023-01-14T00:18:32.000000 | 2023-01-14T06:22:42.000000 | "" |

| 1 | "172.18.103.125" | "FAILED" | 2023-01-14T00:18:32.000000 | 2023-01-14T17:22:08.000000 | "" |

| 2 | "172.18.103.113" | "RUNNING" | 2023-01-14T00:18:32.000000 | | "" |

| "Total:3" | "Succeeded:0" | "Failed:2" | "In Progress:1" | "" | "" |

+----------------+------------------+------------+----------------------------+----------------------------+-------------------+

(root@nebula) [(none)]> show hosts

+------------------+------+-----------+----------+--------------+----------------------+------------------------+---------+

| Host | Port | HTTP port | Status | Leader count | Leader distribution | Partition distribution | Version |

+------------------+------+-----------+----------+--------------+----------------------+------------------------+---------+

| "172.18.103.113" | 9779 | 19779 | "ONLINE" | 0 | "No valid partition" | "Unified:30" | "3.3.0" |

| "172.18.103.120" | 9779 | 19779 | "ONLINE" | 13 | "Unified:13" | "Unified:30" | "3.3.0" |

| "172.18.103.125" | 9779 | 19779 | "ONLINE" | 17 | "Unified:17" | "Unified:30" | "3.3.0" |

+------------------+------+-----------+----------+--------------+----------------------+------------------------+---------+

spw

9

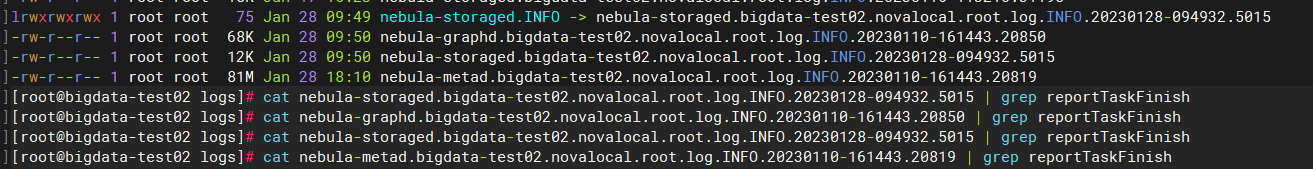

172.18.103.113 这台机器重启后,有 reportTaskFinish() 字样的日志吗,可以贴一下

spw

11

这就有点奇怪了,请确认下:

1.13 机器的所有 storaged 日志可以都看下。

2. 这台机器上的 data 文件夹动过没?

3. 可以 stop job ,从代码上来说,无论如何这个 compact job 已经失败了。

spw

13

我跟同事确认了下,这确实是一个 bug,在这个 pr 修了:https://github.com/vesoft-inc/nebula/pull/5195

这个 job 的尸体可以留在那不管了。如果对你后续提交 job 有影响的话,可以重启下 metad。

1 个赞

Reid00

14

重启meta 后,已经过去1h 多了,后续的balace leader 确实可以running, 但是这个一直不finishe

看了meta 相关的日志,正常的hearbeat 没其他信息

(root@nebula) [(none)]> show hosts

+------------------+------+-----------+----------+--------------+----------------------+------------------------+---------+

| Host | Port | HTTP port | Status | Leader count | Leader distribution | Partition distribution | Version |

+------------------+------+-----------+----------+--------------+----------------------+------------------------+---------+

| "172.18.103.113" | 9779 | 19779 | "ONLINE" | 0 | "No valid partition" | "Unified:30" | "3.3.0" |

| "172.18.103.120" | 9779 | 19779 | "ONLINE" | 13 | "Unified:13" | "Unified:30" | "3.3.0" |

| "172.18.103.125" | 9779 | 19779 | "ONLINE" | 17 | "Unified:17" | "Unified:30" | "3.3.0" |

+------------------+------+-----------+----------+--------------+----------------------+------------------------+---------+

Got 3 rows (time spent 4.422ms/6.104329ms)

Sun, 29 Jan 2023 15:57:48 CST

(root@nebula) [(none)]> use Unified

Execution succeeded (time spent 2.106ms/3.05725ms)

Sun, 29 Jan 2023 15:57:52 CST

(root@nebula) [Unified]> show jobs

+--------+------------------+-----------+----------------------------+-----------+

| Job Id | Command | Status | Start Time | Stop Time |

+--------+------------------+-----------+----------------------------+-----------+

| 7 | "LEADER_BALANCE" | "RUNNING" | 2023-01-29T05:47:43.000000 | |

| 6 | "COMPACT" | "RUNNING" | 2023-01-14T00:18:32.000000 | |

+--------+------------------+-----------+----------------------------+-----------+

Got 2 rows (time spent 23.079ms/24.300296ms)

Sun, 29 Jan 2023 15:57:53 CST

spw

15

嗯,由于上面那个 bug,这个状态已经改不了了,可以忽略他,不影响其他 Job 运行。

Reid00

16

还有要给问题:

因为我在storage中添加了新的data_path, 我想通过balance data 把数据均衡到两个目录中。

现在发现balance data 是disable了

看到了这个pr, 想要实现这个数据均衡到两个目录中,该怎么做呢?

这个pr仅仅是将data balance开关放到了实验特性下,目的是与toss特性分开,你只需要把

–enable_experimental_feature=true

–enable_data_balance=true

同时打开就可以使用了。

Reid00

18

storage 中的 --data_path 开始时默认值 --data_path=data/storage

后来磁盘空间不够,我新加了一个硬盘,改为 --data_path=data/storage,/data/nebula330storage

enable 之后,发现balance data 并不能达到把已有的数据,平衡到新的目录下,怎么实现这个目的呢?

data balance功能是在hosts之间平衡分片,你这样是没法达成目的的。你要么改动data path和数据把某个storage的数据放到新增的磁盘上,要么基于新磁盘增加新的host,然后data balance