不行,还是没生成sst,命令行有报错,采用的数据还是上面贴的json数据。

(base) [consumer@localhost nebula-docker-compose-v2.0]$ $SPARK_HOME/bin/spark-submit --class com.vesoft.nebula.exchange.Exchange --master spark://localhost:7077 nebula-spark-utils/nebula-exchange/target/nebula-exchange-2.0.0.jar -c nebula_application_sst.conf

21/01/21 14:50:09 WARN util.Utils: Your hostname, localhost resolves to a loopback address: 127.0.0.1; using 192.168.100.72 instead (on interface em3)

21/01/21 14:50:09 WARN util.Utils: Set SPARK_LOCAL_IP if you need to bind to another address

21/01/21 14:50:09 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

21/01/21 14:50:09 INFO config.Configs$: DataBase Config com.vesoft.nebula.exchange.config.DataBaseConfigEntry@eac734d9

21/01/21 14:50:09 INFO config.Configs$: User Config com.vesoft.nebula.exchange.config.UserConfigEntry@3ac82b6c

21/01/21 14:50:10 INFO config.Configs$: Connection Config Some(Config(SimpleConfigObject({"retry":3,"timeout":3000})))

21/01/21 14:50:10 INFO config.Configs$: Execution Config com.vesoft.nebula.exchange.config.ExecutionConfigEntry@7f9c3944

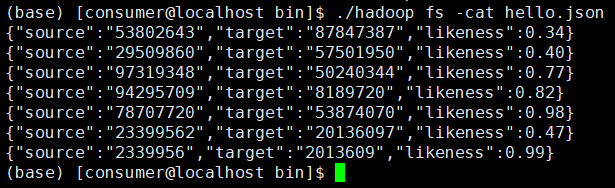

21/01/21 14:50:10 INFO config.Configs$: Source Config File source path: hdfs://127.0.0.1:9000/user/consumer/hello.json, separator: None, header: None

21/01/21 14:50:10 INFO config.Configs$: Sink Config File source path: hdfs://127.0.0.1:9000/user/consumer/hello.json, separator: None, header: None

21/01/21 14:50:10 INFO config.Configs$: name source batch 256

21/01/21 14:50:10 INFO config.Configs$: Tag Config: Tag name: source, source: File source path: hdfs://127.0.0.1:9000/user/consumer/hello.json, separator: None, header: None, sink: File sink: from /home/consumer/nebula-docker-compose-v2.0/sst to hdfs://127.0.0.1:9000/user/consumer/sst/, vertex field: source, vertex policy: None, batch: 256, partition: 32.

21/01/21 14:50:10 INFO config.Configs$: Source Config File source path: hdfs://127.0.0.1:9000/user/consumer/hello.json, separator: None, header: None

21/01/21 14:50:10 INFO config.Configs$: Sink Config File source path: hdfs://127.0.0.1:9000/user/consumer/hello.json, separator: None, header: None

21/01/21 14:50:10 INFO config.Configs$: name target batch 256

21/01/21 14:50:10 INFO config.Configs$: Tag Config: Tag name: target, source: File source path: hdfs://127.0.0.1:9000/user/consumer/hello.json, separator: None, header: None, sink: File sink: from /home/consumer/nebula-docker-compose-v2.0/sst to hdfs://127.0.0.1:9000/user/consumer/sst/, vertex field: target, vertex policy: None, batch: 256, partition: 32.

21/01/21 14:50:10 INFO config.Configs$: Source Config File source path: hdfs://127.0.0.1:9000/user/consumer/hello.json, separator: None, header: None

21/01/21 14:50:10 INFO config.Configs$: Sink Config File source path: hdfs://127.0.0.1:9000/user/consumer/hello.json, separator: None, header: None

21/01/21 14:50:10 INFO config.Configs$: Edge Config: Edge name: like, source: File source path: hdfs://127.0.0.1:9000/user/consumer/hello.json, separator: None, header: None, sink: File sink: from /home/consumer/nebula-docker-compose-v2.0/sst to hdfs://127.0.0.1:9000/user/consumer/sst/, source field: source, source policy: None, ranking: None, target field: target, target policy: None, batch: 256, partition: 32.

21/01/21 14:50:10 INFO exchange.Exchange$: Config Configs(com.vesoft.nebula.exchange.config.DataBaseConfigEntry@eac734d9,com.vesoft.nebula.exchange.config.UserConfigEntry@3ac82b6c,com.vesoft.nebula.exchange.config.ConnectionConfigEntry@c419f174,com.vesoft.nebula.exchange.config.ExecutionConfigEntry@7f9c3944,com.vesoft.nebula.exchange.config.ErrorConfigEntry@55508fa6,com.vesoft.nebula.exchange.config.RateConfigEntry@fc4543af,,List(Tag name: source, source: File source path: hdfs://127.0.0.1:9000/user/consumer/hello.json, separator: None, header: None, sink: File sink: from /home/consumer/nebula-docker-compose-v2.0/sst to hdfs://127.0.0.1:9000/user/consumer/sst/, vertex field: source, vertex policy: None, batch: 256, partition: 32., Tag name: target, source: File source path: hdfs://127.0.0.1:9000/user/consumer/hello.json, separator: None, header: None, sink: File sink: from /home/consumer/nebula-docker-compose-v2.0/sst to hdfs://127.0.0.1:9000/user/consumer/sst/, vertex field: target, vertex policy: None, batch: 256, partition: 32.),List(Edge name: like, source: File source path: hdfs://127.0.0.1:9000/user/consumer/hello.json, separator: None, header: None, sink: File sink: from /home/consumer/nebula-docker-compose-v2.0/sst to hdfs://127.0.0.1:9000/user/consumer/sst/, source field: source, source policy: None, ranking: None, target field: target, target policy: None, batch: 256, partition: 32.),None)

21/01/21 14:50:10 INFO spark.SparkContext: Running Spark version 2.4.7

21/01/21 14:50:10 INFO spark.SparkContext: Submitted application: Nebula Exchange 2.0

21/01/21 14:50:10 INFO spark.SecurityManager: Changing view acls to: consumer

21/01/21 14:50:10 INFO spark.SecurityManager: Changing modify acls to: consumer

21/01/21 14:50:10 INFO spark.SecurityManager: Changing view acls groups to:

21/01/21 14:50:10 INFO spark.SecurityManager: Changing modify acls groups to:

21/01/21 14:50:10 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(consumer); groups with view permissions: Set(); users with modify permissions: Set(consumer); groups with modify permissions: Set()

21/01/21 14:50:10 INFO util.Utils: Successfully started service 'sparkDriver' on port 46248.

21/01/21 14:50:10 INFO spark.SparkEnv: Registering MapOutputTracker

21/01/21 14:50:10 INFO spark.SparkEnv: Registering BlockManagerMaster

21/01/21 14:50:10 INFO storage.BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

21/01/21 14:50:10 INFO storage.BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

21/01/21 14:50:10 INFO storage.DiskBlockManager: Created local directory at /home/consumer/nebula-docker-compose-v2.0/spark-2.4.7/blockmgr-3ae6e52d-6031-4aea-9d8c-8e85d9d1a404

21/01/21 14:50:10 INFO memory.MemoryStore: MemoryStore started with capacity 93.3 MB

21/01/21 14:50:10 INFO spark.SparkEnv: Registering OutputCommitCoordinator

21/01/21 14:50:10 INFO util.log: Logging initialized @1801ms

21/01/21 14:50:10 INFO server.Server: jetty-9.3.z-SNAPSHOT, build timestamp: unknown, git hash: unknown

21/01/21 14:50:10 INFO server.Server: Started @1873ms

21/01/21 14:50:10 INFO server.AbstractConnector: Started ServerConnector@60e949e1{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

21/01/21 14:50:10 INFO util.Utils: Successfully started service 'SparkUI' on port 4040.

21/01/21 14:50:10 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@56f2bbea{/jobs,null,AVAILABLE,@Spark}

21/01/21 14:50:10 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@3935e9a8{/jobs/json,null,AVAILABLE,@Spark}

21/01/21 14:50:10 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@288a4658{/jobs/job,null,AVAILABLE,@Spark}

21/01/21 14:50:10 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@452c8a40{/jobs/job/json,null,AVAILABLE,@Spark}

21/01/21 14:50:10 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@534243e4{/stages,null,AVAILABLE,@Spark}

21/01/21 14:50:10 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@29006752{/stages/json,null,AVAILABLE,@Spark}

21/01/21 14:50:10 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@470a9030{/stages/stage,null,AVAILABLE,@Spark}

21/01/21 14:50:10 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@d59970a{/stages/stage/json,null,AVAILABLE,@Spark}

21/01/21 14:50:10 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@1e411d81{/stages/pool,null,AVAILABLE,@Spark}

21/01/21 14:50:10 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@53b98ff6{/stages/pool/json,null,AVAILABLE,@Spark}

21/01/21 14:50:10 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@3e6fd0b9{/storage,null,AVAILABLE,@Spark}

21/01/21 14:50:10 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@7fcff1b9{/storage/json,null,AVAILABLE,@Spark}

21/01/21 14:50:10 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@697446d4{/storage/rdd,null,AVAILABLE,@Spark}

21/01/21 14:50:10 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@76adb233{/storage/rdd/json,null,AVAILABLE,@Spark}

21/01/21 14:50:10 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@36074e47{/environment,null,AVAILABLE,@Spark}

21/01/21 14:50:10 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@36453307{/environment/json,null,AVAILABLE,@Spark}

21/01/21 14:50:10 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@7dcc91fd{/executors,null,AVAILABLE,@Spark}

21/01/21 14:50:10 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@66eb985d{/executors/json,null,AVAILABLE,@Spark}

21/01/21 14:50:10 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6a9287b1{/executors/threadDump,null,AVAILABLE,@Spark}

21/01/21 14:50:10 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@75504cef{/executors/threadDump/json,null,AVAILABLE,@Spark}

21/01/21 14:50:10 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6c8a68c1{/static,null,AVAILABLE,@Spark}

21/01/21 14:50:10 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@111610e6{/,null,AVAILABLE,@Spark}

21/01/21 14:50:10 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@4ad4936c{/api,null,AVAILABLE,@Spark}

21/01/21 14:50:10 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@4beddc56{/jobs/job/kill,null,AVAILABLE,@Spark}

21/01/21 14:50:10 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@79b663b3{/stages/stage/kill,null,AVAILABLE,@Spark}

21/01/21 14:50:10 INFO ui.SparkUI: Bound SparkUI to 0.0.0.0, and started at http://localhost:4040

21/01/21 14:50:10 INFO spark.SparkContext: Added JAR file:/home/consumer/nebula-docker-compose-v2.0/nebula-spark-utils/nebula-exchange/target/nebula-exchange-2.0.0.jar at spark://localhost:46248/jars/nebula-exchange-2.0.0.jar with timestamp 1611211810697

21/01/21 14:50:10 INFO client.StandaloneAppClient$ClientEndpoint: Connecting to master spark://localhost:7077...

21/01/21 14:50:10 INFO client.TransportClientFactory: Successfully created connection to localhost/127.0.0.1:7077 after 32 ms (0 ms spent in bootstraps)

21/01/21 14:50:10 INFO cluster.StandaloneSchedulerBackend: Connected to Spark cluster with app ID app-20210121145010-0029

21/01/21 14:50:10 INFO client.StandaloneAppClient$ClientEndpoint: Executor added: app-20210121145010-0029/0 on worker-20210120171427-192.168.100.72-36431 (192.168.100.72:36431) with 16 core(s)

21/01/21 14:50:10 INFO cluster.StandaloneSchedulerBackend: Granted executor ID app-20210121145010-0029/0 on hostPort 192.168.100.72:36431 with 16 core(s), 1024.0 MB RAM

21/01/21 14:50:10 INFO client.StandaloneAppClient$ClientEndpoint: Executor updated: app-20210121145010-0029/0 is now RUNNING

21/01/21 14:50:10 INFO util.Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 42920.

21/01/21 14:50:10 INFO netty.NettyBlockTransferService: Server created on localhost:42920

21/01/21 14:50:10 INFO storage.BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

21/01/21 14:50:10 INFO storage.BlockManagerMaster: Registering BlockManager BlockManagerId(driver, localhost, 42920, None)

21/01/21 14:50:10 INFO storage.BlockManagerMasterEndpoint: Registering block manager localhost:42920 with 93.3 MB RAM, BlockManagerId(driver, localhost, 42920, None)

21/01/21 14:50:10 INFO storage.BlockManagerMaster: Registered BlockManager BlockManagerId(driver, localhost, 42920, None)

21/01/21 14:50:10 INFO storage.BlockManager: Initialized BlockManager: BlockManagerId(driver, localhost, 42920, None)

21/01/21 14:50:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@2b4786dd{/metrics/json,null,AVAILABLE,@Spark}

21/01/21 14:50:11 INFO cluster.StandaloneSchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.0

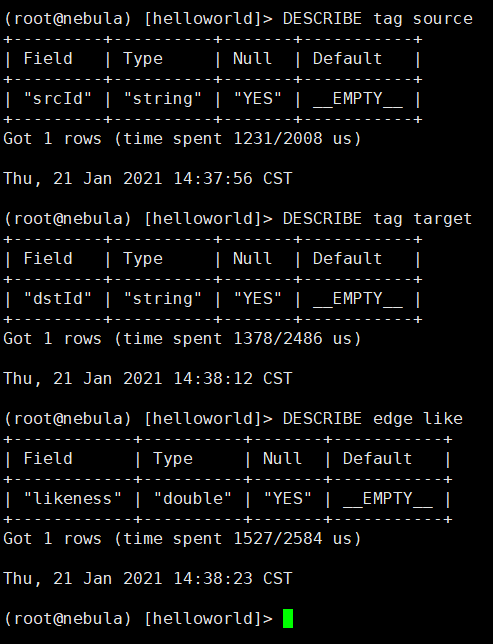

21/01/21 14:50:11 INFO exchange.Exchange$: Processing Tag source

21/01/21 14:50:11 INFO exchange.Exchange$: field keys: source

21/01/21 14:50:11 INFO exchange.Exchange$: nebula keys: srcId

21/01/21 14:50:11 INFO exchange.Exchange$: Loading JSON files from hdfs://127.0.0.1:9000/user/consumer/hello.json

21/01/21 14:50:11 INFO internal.SharedState: Setting hive.metastore.warehouse.dir ('null') to the value of spark.sql.warehouse.dir ('file:/home/consumer/nebula-docker-compose-v2.0/spark-warehouse').

21/01/21 14:50:11 INFO internal.SharedState: Warehouse path is 'file:/home/consumer/nebula-docker-compose-v2.0/spark-warehouse'.

21/01/21 14:50:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@1a28b346{/SQL,null,AVAILABLE,@Spark}

21/01/21 14:50:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@25e49cb2{/SQL/json,null,AVAILABLE,@Spark}

21/01/21 14:50:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@7d5508e0{/SQL/execution,null,AVAILABLE,@Spark}

21/01/21 14:50:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@554cd74a{/SQL/execution/json,null,AVAILABLE,@Spark}

21/01/21 14:50:11 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5a82ebf8{/static/sql,null,AVAILABLE,@Spark}

21/01/21 14:50:12 INFO state.StateStoreCoordinatorRef: Registered StateStoreCoordinator endpoint

21/01/21 14:50:13 INFO datasources.InMemoryFileIndex: It took 380 ms to list leaf files for 1 paths.

21/01/21 14:50:13 INFO datasources.InMemoryFileIndex: It took 4 ms to list leaf files for 1 paths.

21/01/21 14:50:13 INFO cluster.CoarseGrainedSchedulerBackend$DriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (127.0.0.1:35585) with ID 0

21/01/21 14:50:13 INFO storage.BlockManagerMasterEndpoint: Registering block manager 192.168.100.72:36562 with 366.3 MB RAM, BlockManagerId(0, 192.168.100.72, 36562, None)

21/01/21 14:50:14 INFO datasources.FileSourceStrategy: Pruning directories with:

21/01/21 14:50:14 INFO datasources.FileSourceStrategy: Post-Scan Filters:

21/01/21 14:50:14 INFO datasources.FileSourceStrategy: Output Data Schema: struct<value: string>

21/01/21 14:50:14 INFO execution.FileSourceScanExec: Pushed Filters:

21/01/21 14:50:15 INFO codegen.CodeGenerator: Code generated in 167.649553 ms

21/01/21 14:50:15 INFO memory.MemoryStore: Block broadcast_0 stored as values in memory (estimated size 278.8 KB, free 93.0 MB)

21/01/21 14:50:15 INFO memory.MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 23.6 KB, free 93.0 MB)

21/01/21 14:50:15 INFO storage.BlockManagerInfo: Added broadcast_0_piece0 in memory on localhost:42920 (size: 23.6 KB, free: 93.3 MB)

21/01/21 14:50:15 INFO spark.SparkContext: Created broadcast 0 from json at FileBaseReader.scala:67

21/01/21 14:50:15 INFO execution.FileSourceScanExec: Planning scan with bin packing, max size: 4194304 bytes, open cost is considered as scanning 4194304 bytes.

21/01/21 14:50:15 INFO spark.SparkContext: Starting job: json at FileBaseReader.scala:67

21/01/21 14:50:15 INFO scheduler.DAGScheduler: Got job 0 (json at FileBaseReader.scala:67) with 1 output partitions

21/01/21 14:50:15 INFO scheduler.DAGScheduler: Final stage: ResultStage 0 (json at FileBaseReader.scala:67)

21/01/21 14:50:15 INFO scheduler.DAGScheduler: Parents of final stage: List()

21/01/21 14:50:15 INFO scheduler.DAGScheduler: Missing parents: List()

21/01/21 14:50:15 INFO scheduler.DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[3] at json at FileBaseReader.scala:67), which has no missing parents

21/01/21 14:50:15 INFO memory.MemoryStore: Block broadcast_1 stored as values in memory (estimated size 10.9 KB, free 93.0 MB)

21/01/21 14:50:15 INFO memory.MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 6.1 KB, free 93.0 MB)

21/01/21 14:50:15 INFO storage.BlockManagerInfo: Added broadcast_1_piece0 in memory on localhost:42920 (size: 6.1 KB, free: 93.3 MB)

21/01/21 14:50:15 INFO spark.SparkContext: Created broadcast 1 from broadcast at DAGScheduler.scala:1184

21/01/21 14:50:15 INFO scheduler.DAGScheduler: Submitting 1 missing tasks from ResultStage 0 (MapPartitionsRDD[3] at json at FileBaseReader.scala:67) (first 15 tasks are for partitions Vector(0))

21/01/21 14:50:15 INFO scheduler.TaskSchedulerImpl: Adding task set 0.0 with 1 tasks

21/01/21 14:50:15 INFO scheduler.TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, 192.168.100.72, executor 0, partition 0, PROCESS_LOCAL, 8266 bytes)

21/01/21 14:50:16 INFO storage.BlockManagerInfo: Added broadcast_1_piece0 in memory on 192.168.100.72:36562 (size: 6.1 KB, free: 366.3 MB)

21/01/21 14:50:17 INFO storage.BlockManagerInfo: Added broadcast_0_piece0 in memory on 192.168.100.72:36562 (size: 23.6 KB, free: 366.3 MB)

21/01/21 14:50:18 INFO scheduler.TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 2831 ms on 192.168.100.72 (executor 0) (1/1)

21/01/21 14:50:18 INFO scheduler.TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

21/01/21 14:50:18 INFO scheduler.DAGScheduler: ResultStage 0 (json at FileBaseReader.scala:67) finished in 2.957 s

21/01/21 14:50:18 INFO scheduler.DAGScheduler: Job 0 finished: json at FileBaseReader.scala:67, took 3.017872 s

21/01/21 14:50:18 INFO storage.BlockManagerInfo: Removed broadcast_1_piece0 on localhost:42920 in memory (size: 6.1 KB, free: 93.3 MB)

21/01/21 14:50:18 INFO datasources.FileSourceStrategy: Pruning directories with:

21/01/21 14:50:18 INFO datasources.FileSourceStrategy: Post-Scan Filters:

21/01/21 14:50:18 INFO datasources.FileSourceStrategy: Output Data Schema: struct<likeness: double, source: string, target: string ... 1 more fields>

21/01/21 14:50:18 INFO execution.FileSourceScanExec: Pushed Filters:

21/01/21 14:50:18 INFO storage.BlockManagerInfo: Removed broadcast_1_piece0 on 192.168.100.72:36562 in memory (size: 6.1 KB, free: 366.3 MB)

21/01/21 14:50:18 INFO codegen.CodeGenerator: Code generated in 32.122587 ms

21/01/21 14:50:18 INFO memory.MemoryStore: Block broadcast_2 stored as values in memory (estimated size 278.6 KB, free 92.7 MB)

21/01/21 14:50:18 INFO memory.MemoryStore: Block broadcast_2_piece0 stored as bytes in memory (estimated size 23.6 KB, free 92.7 MB)

21/01/21 14:50:18 INFO storage.BlockManagerInfo: Added broadcast_2_piece0 in memory on localhost:42920 (size: 23.6 KB, free: 93.3 MB)

21/01/21 14:50:18 INFO spark.SparkContext: Created broadcast 2 from foreachPartition at VerticesProcessor.scala:153

21/01/21 14:50:18 INFO execution.FileSourceScanExec: Planning scan with bin packing, max size: 4194304 bytes, open cost is considered as scanning 4194304 bytes.

21/01/21 14:50:18 INFO spark.SparkContext: Starting job: foreachPartition at VerticesProcessor.scala:153

21/01/21 14:50:18 INFO scheduler.DAGScheduler: Registering RDD 7 (foreachPartition at VerticesProcessor.scala:153) as input to shuffle 0

21/01/21 14:50:18 INFO scheduler.DAGScheduler: Got job 1 (foreachPartition at VerticesProcessor.scala:153) with 32 output partitions

21/01/21 14:50:18 INFO scheduler.DAGScheduler: Final stage: ResultStage 2 (foreachPartition at VerticesProcessor.scala:153)

21/01/21 14:50:18 INFO scheduler.DAGScheduler: Parents of final stage: List(ShuffleMapStage 1)

21/01/21 14:50:18 INFO scheduler.DAGScheduler: Missing parents: List(ShuffleMapStage 1)

21/01/21 14:50:18 INFO scheduler.DAGScheduler: Submitting ShuffleMapStage 1 (MapPartitionsRDD[7] at foreachPartition at VerticesProcessor.scala:153), which has no missing parents

21/01/21 14:50:18 INFO memory.MemoryStore: Block broadcast_3 stored as values in memory (estimated size 11.6 KB, free 92.7 MB)

21/01/21 14:50:18 INFO memory.MemoryStore: Block broadcast_3_piece0 stored as bytes in memory (estimated size 6.3 KB, free 92.7 MB)

21/01/21 14:50:18 INFO storage.BlockManagerInfo: Added broadcast_3_piece0 in memory on localhost:42920 (size: 6.3 KB, free: 93.2 MB)

21/01/21 14:50:18 INFO spark.SparkContext: Created broadcast 3 from broadcast at DAGScheduler.scala:1184

21/01/21 14:50:18 INFO scheduler.DAGScheduler: Submitting 1 missing tasks from ShuffleMapStage 1 (MapPartitionsRDD[7] at foreachPartition at VerticesProcessor.scala:153) (first 15 tasks are for partitions Vector(0))

21/01/21 14:50:18 INFO scheduler.TaskSchedulerImpl: Adding task set 1.0 with 1 tasks

21/01/21 14:50:18 INFO scheduler.TaskSetManager: Starting task 0.0 in stage 1.0 (TID 1, 192.168.100.72, executor 0, partition 0, PROCESS_LOCAL, 8255 bytes)

21/01/21 14:50:18 INFO storage.BlockManagerInfo: Added broadcast_3_piece0 in memory on 192.168.100.72:36562 (size: 6.3 KB, free: 366.3 MB)

21/01/21 14:50:19 INFO storage.BlockManagerInfo: Added broadcast_2_piece0 in memory on 192.168.100.72:36562 (size: 23.6 KB, free: 366.2 MB)

21/01/21 14:50:19 INFO scheduler.TaskSetManager: Finished task 0.0 in stage 1.0 (TID 1) in 218 ms on 192.168.100.72 (executor 0) (1/1)

21/01/21 14:50:19 INFO scheduler.TaskSchedulerImpl: Removed TaskSet 1.0, whose tasks have all completed, from pool

21/01/21 14:50:19 INFO scheduler.DAGScheduler: ShuffleMapStage 1 (foreachPartition at VerticesProcessor.scala:153) finished in 0.239 s

21/01/21 14:50:19 INFO scheduler.DAGScheduler: looking for newly runnable stages

21/01/21 14:50:19 INFO scheduler.DAGScheduler: running: Set()

21/01/21 14:50:19 INFO scheduler.DAGScheduler: waiting: Set(ResultStage 2)

21/01/21 14:50:19 INFO scheduler.DAGScheduler: failed: Set()

21/01/21 14:50:19 INFO scheduler.DAGScheduler: Submitting ResultStage 2 (MapPartitionsRDD[14] at foreachPartition at VerticesProcessor.scala:153), which has no missing parents

21/01/21 14:50:19 INFO memory.MemoryStore: Block broadcast_4 stored as values in memory (estimated size 32.4 KB, free 92.7 MB)

21/01/21 14:50:19 INFO memory.MemoryStore: Block broadcast_4_piece0 stored as bytes in memory (estimated size 15.8 KB, free 92.6 MB)

21/01/21 14:50:19 INFO storage.BlockManagerInfo: Added broadcast_4_piece0 in memory on localhost:42920 (size: 15.8 KB, free: 93.2 MB)

21/01/21 14:50:19 INFO spark.SparkContext: Created broadcast 4 from broadcast at DAGScheduler.scala:1184

21/01/21 14:50:19 INFO scheduler.DAGScheduler: Submitting 32 missing tasks from ResultStage 2 (MapPartitionsRDD[14] at foreachPartition at VerticesProcessor.scala:153) (first 15 tasks are for partitions Vector(0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14))

21/01/21 14:50:19 INFO scheduler.TaskSchedulerImpl: Adding task set 2.0 with 32 tasks

21/01/21 14:50:19 INFO scheduler.TaskSetManager: Starting task 24.0 in stage 2.0 (TID 2, 192.168.100.72, executor 0, partition 24, NODE_LOCAL, 7771 bytes)

21/01/21 14:50:19 INFO scheduler.TaskSetManager: Starting task 25.0 in stage 2.0 (TID 3, 192.168.100.72, executor 0, partition 25, NODE_LOCAL, 7771 bytes)

21/01/21 14:50:19 INFO scheduler.TaskSetManager: Starting task 26.0 in stage 2.0 (TID 4, 192.168.100.72, executor 0, partition 26, NODE_LOCAL, 7771 bytes)

21/01/21 14:50:19 INFO scheduler.TaskSetManager: Starting task 27.0 in stage 2.0 (TID 5, 192.168.100.72, executor 0, partition 27, NODE_LOCAL, 7771 bytes)

21/01/21 14:50:19 INFO scheduler.TaskSetManager: Starting task 28.0 in stage 2.0 (TID 6, 192.168.100.72, executor 0, partition 28, NODE_LOCAL, 7771 bytes)

21/01/21 14:50:19 INFO scheduler.TaskSetManager: Starting task 29.0 in stage 2.0 (TID 7, 192.168.100.72, executor 0, partition 29, NODE_LOCAL, 7771 bytes)

21/01/21 14:50:19 INFO scheduler.TaskSetManager: Starting task 30.0 in stage 2.0 (TID 8, 192.168.100.72, executor 0, partition 30, NODE_LOCAL, 7771 bytes)

21/01/21 14:50:19 INFO scheduler.TaskSetManager: Starting task 0.0 in stage 2.0 (TID 9, 192.168.100.72, executor 0, partition 0, PROCESS_LOCAL, 7771 bytes)

21/01/21 14:50:19 INFO scheduler.TaskSetManager: Starting task 1.0 in stage 2.0 (TID 10, 192.168.100.72, executor 0, partition 1, PROCESS_LOCAL, 7771 bytes)

21/01/21 14:50:19 INFO scheduler.TaskSetManager: Starting task 2.0 in stage 2.0 (TID 11, 192.168.100.72, executor 0, partition 2, PROCESS_LOCAL, 7771 bytes)

21/01/21 14:50:19 INFO scheduler.TaskSetManager: Starting task 3.0 in stage 2.0 (TID 12, 192.168.100.72, executor 0, partition 3, PROCESS_LOCAL, 7771 bytes)

21/01/21 14:50:19 INFO scheduler.TaskSetManager: Starting task 4.0 in stage 2.0 (TID 13, 192.168.100.72, executor 0, partition 4, PROCESS_LOCAL, 7771 bytes)

21/01/21 14:50:19 INFO scheduler.TaskSetManager: Starting task 5.0 in stage 2.0 (TID 14, 192.168.100.72, executor 0, partition 5, PROCESS_LOCAL, 7771 bytes)

21/01/21 14:50:19 INFO scheduler.TaskSetManager: Starting task 6.0 in stage 2.0 (TID 15, 192.168.100.72, executor 0, partition 6, PROCESS_LOCAL, 7771 bytes)

21/01/21 14:50:19 INFO scheduler.TaskSetManager: Starting task 7.0 in stage 2.0 (TID 16, 192.168.100.72, executor 0, partition 7, PROCESS_LOCAL, 7771 bytes)

21/01/21 14:50:19 INFO scheduler.TaskSetManager: Starting task 8.0 in stage 2.0 (TID 17, 192.168.100.72, executor 0, partition 8, PROCESS_LOCAL, 7771 bytes)

21/01/21 14:50:19 INFO storage.BlockManagerInfo: Added broadcast_4_piece0 in memory on 192.168.100.72:36562 (size: 15.8 KB, free: 366.2 MB)

21/01/21 14:50:19 INFO spark.MapOutputTrackerMasterEndpoint: Asked to send map output locations for shuffle 0 to 127.0.0.1:35585

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 9.0 in stage 2.0 (TID 18, 192.168.100.72, executor 0, partition 9, PROCESS_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 10.0 in stage 2.0 (TID 19, 192.168.100.72, executor 0, partition 10, PROCESS_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 11.0 in stage 2.0 (TID 20, 192.168.100.72, executor 0, partition 11, PROCESS_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Finished task 7.0 in stage 2.0 (TID 16) in 1555 ms on 192.168.100.72 (executor 0) (1/32)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 12.0 in stage 2.0 (TID 21, 192.168.100.72, executor 0, partition 12, PROCESS_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Finished task 5.0 in stage 2.0 (TID 14) in 1557 ms on 192.168.100.72 (executor 0) (2/32)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Finished task 1.0 in stage 2.0 (TID 10) in 1560 ms on 192.168.100.72 (executor 0) (3/32)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 13.0 in stage 2.0 (TID 22, 192.168.100.72, executor 0, partition 13, PROCESS_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Finished task 3.0 in stage 2.0 (TID 12) in 1559 ms on 192.168.100.72 (executor 0) (4/32)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 14.0 in stage 2.0 (TID 23, 192.168.100.72, executor 0, partition 14, PROCESS_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Finished task 4.0 in stage 2.0 (TID 13) in 1560 ms on 192.168.100.72 (executor 0) (5/32)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Finished task 6.0 in stage 2.0 (TID 15) in 1559 ms on 192.168.100.72 (executor 0) (6/32)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 15.0 in stage 2.0 (TID 24, 192.168.100.72, executor 0, partition 15, PROCESS_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Finished task 2.0 in stage 2.0 (TID 11) in 1563 ms on 192.168.100.72 (executor 0) (7/32)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 16.0 in stage 2.0 (TID 25, 192.168.100.72, executor 0, partition 16, PROCESS_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 17.0 in stage 2.0 (TID 26, 192.168.100.72, executor 0, partition 17, PROCESS_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Finished task 0.0 in stage 2.0 (TID 9) in 1566 ms on 192.168.100.72 (executor 0) (8/32)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Finished task 8.0 in stage 2.0 (TID 17) in 1562 ms on 192.168.100.72 (executor 0) (9/32)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 18.0 in stage 2.0 (TID 27, 192.168.100.72, executor 0, partition 18, PROCESS_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Finished task 9.0 in stage 2.0 (TID 18) in 37 ms on 192.168.100.72 (executor 0) (10/32)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 19.0 in stage 2.0 (TID 28, 192.168.100.72, executor 0, partition 19, PROCESS_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 20.0 in stage 2.0 (TID 29, 192.168.100.72, executor 0, partition 20, PROCESS_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 21.0 in stage 2.0 (TID 30, 192.168.100.72, executor 0, partition 21, PROCESS_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 22.0 in stage 2.0 (TID 31, 192.168.100.72, executor 0, partition 22, PROCESS_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 23.0 in stage 2.0 (TID 32, 192.168.100.72, executor 0, partition 23, PROCESS_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 31.0 in stage 2.0 (TID 33, 192.168.100.72, executor 0, partition 31, PROCESS_LOCAL, 7771 bytes)

21/01/21 14:50:20 WARN scheduler.TaskSetManager: Lost task 30.0 in stage 2.0 (TID 8, 192.168.100.72, executor 0): java.lang.NullPointerException

at com.vesoft.nebula.client.meta.MetaManager.fillMetaInfo(MetaManager.java:93)

at com.vesoft.nebula.client.meta.MetaManager.getSpace(MetaManager.java:162)

at com.vesoft.nebula.encoder.NebulaCodecImpl.getSpaceVidLen(NebulaCodecImpl.java:54)

at com.vesoft.nebula.encoder.NebulaCodecImpl.vertexKey(NebulaCodecImpl.java:75)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$1$$anonfun$apply$1.apply(VerticesProcessor.scala:141)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$1$$anonfun$apply$1.apply(VerticesProcessor.scala:117)

at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage2.sort_addToSorter_0$(Unknown Source)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage2.processNext(Unknown Source)

at org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43)

at org.apache.spark.sql.execution.WholeStageCodegenExec$$anonfun$13$$anon$1.hasNext(WholeStageCodegenExec.scala:636)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409)

at scala.collection.Iterator$class.foreach(Iterator.scala:891)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$2.apply(VerticesProcessor.scala:160)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$2.apply(VerticesProcessor.scala:153)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$28.apply(RDD.scala:980)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$28.apply(RDD.scala:980)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:123)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Lost task 26.0 in stage 2.0 (TID 4) on 192.168.100.72, executor 0: java.lang.NullPointerException (null) [duplicate 1]

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Lost task 27.0 in stage 2.0 (TID 5) on 192.168.100.72, executor 0: java.lang.NullPointerException (null) [duplicate 2]

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Lost task 28.0 in stage 2.0 (TID 6) on 192.168.100.72, executor 0: java.lang.NullPointerException (null) [duplicate 3]

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 28.1 in stage 2.0 (TID 34, 192.168.100.72, executor 0, partition 28, NODE_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 27.1 in stage 2.0 (TID 35, 192.168.100.72, executor 0, partition 27, NODE_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 26.1 in stage 2.0 (TID 36, 192.168.100.72, executor 0, partition 26, NODE_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Finished task 12.0 in stage 2.0 (TID 21) in 81 ms on 192.168.100.72 (executor 0) (11/32)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Finished task 16.0 in stage 2.0 (TID 25) in 78 ms on 192.168.100.72 (executor 0) (12/32)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Finished task 10.0 in stage 2.0 (TID 19) in 87 ms on 192.168.100.72 (executor 0) (13/32)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Finished task 11.0 in stage 2.0 (TID 20) in 85 ms on 192.168.100.72 (executor 0) (14/32)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Finished task 14.0 in stage 2.0 (TID 23) in 81 ms on 192.168.100.72 (executor 0) (15/32)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 30.1 in stage 2.0 (TID 37, 192.168.100.72, executor 0, partition 30, NODE_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Finished task 15.0 in stage 2.0 (TID 24) in 82 ms on 192.168.100.72 (executor 0) (16/32)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Finished task 13.0 in stage 2.0 (TID 22) in 86 ms on 192.168.100.72 (executor 0) (17/32)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Finished task 20.0 in stage 2.0 (TID 29) in 35 ms on 192.168.100.72 (executor 0) (18/32)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Finished task 21.0 in stage 2.0 (TID 30) in 38 ms on 192.168.100.72 (executor 0) (19/32)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Finished task 19.0 in stage 2.0 (TID 28) in 43 ms on 192.168.100.72 (executor 0) (20/32)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Finished task 18.0 in stage 2.0 (TID 27) in 67 ms on 192.168.100.72 (executor 0) (21/32)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Finished task 17.0 in stage 2.0 (TID 26) in 91 ms on 192.168.100.72 (executor 0) (22/32)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Finished task 31.0 in stage 2.0 (TID 33) in 36 ms on 192.168.100.72 (executor 0) (23/32)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Finished task 22.0 in stage 2.0 (TID 31) in 44 ms on 192.168.100.72 (executor 0) (24/32)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Finished task 23.0 in stage 2.0 (TID 32) in 49 ms on 192.168.100.72 (executor 0) (25/32)

21/01/21 14:50:20 WARN scheduler.TaskSetManager: Lost task 24.0 in stage 2.0 (TID 2, 192.168.100.72, executor 0): java.lang.RuntimeException: Value: 2339956's type is unexpected

at com.vesoft.nebula.encoder.RowWriterImpl.write(RowWriterImpl.java:504)

at com.vesoft.nebula.encoder.RowWriterImpl.setValue(RowWriterImpl.java:616)

at com.vesoft.nebula.encoder.RowWriterImpl.setValue(RowWriterImpl.java:599)

at com.vesoft.nebula.encoder.NebulaCodecImpl.encode(NebulaCodecImpl.java:236)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$1$$anonfun$apply$1.apply(VerticesProcessor.scala:147)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$1$$anonfun$apply$1.apply(VerticesProcessor.scala:117)

at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage2.sort_addToSorter_0$(Unknown Source)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage2.processNext(Unknown Source)

at org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43)

at org.apache.spark.sql.execution.WholeStageCodegenExec$$anonfun$13$$anon$1.hasNext(WholeStageCodegenExec.scala:636)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409)

at scala.collection.Iterator$class.foreach(Iterator.scala:891)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$2.apply(VerticesProcessor.scala:160)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$2.apply(VerticesProcessor.scala:153)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$28.apply(RDD.scala:980)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$28.apply(RDD.scala:980)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:123)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 24.1 in stage 2.0 (TID 38, 192.168.100.72, executor 0, partition 24, NODE_LOCAL, 7771 bytes)

21/01/21 14:50:20 WARN scheduler.TaskSetManager: Lost task 30.1 in stage 2.0 (TID 37, 192.168.100.72, executor 0): java.lang.RuntimeException: Value: 94295709's type is unexpected

at com.vesoft.nebula.encoder.RowWriterImpl.write(RowWriterImpl.java:504)

at com.vesoft.nebula.encoder.RowWriterImpl.setValue(RowWriterImpl.java:616)

at com.vesoft.nebula.encoder.RowWriterImpl.setValue(RowWriterImpl.java:599)

at com.vesoft.nebula.encoder.NebulaCodecImpl.encode(NebulaCodecImpl.java:236)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$1$$anonfun$apply$1.apply(VerticesProcessor.scala:147)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$1$$anonfun$apply$1.apply(VerticesProcessor.scala:117)

at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage2.sort_addToSorter_0$(Unknown Source)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage2.processNext(Unknown Source)

at org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43)

at org.apache.spark.sql.execution.WholeStageCodegenExec$$anonfun$13$$anon$1.hasNext(WholeStageCodegenExec.scala:636)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409)

at scala.collection.Iterator$class.foreach(Iterator.scala:891)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$2.apply(VerticesProcessor.scala:160)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$2.apply(VerticesProcessor.scala:153)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$28.apply(RDD.scala:980)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$28.apply(RDD.scala:980)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:123)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 30.2 in stage 2.0 (TID 39, 192.168.100.72, executor 0, partition 30, NODE_LOCAL, 7771 bytes)

21/01/21 14:50:20 WARN scheduler.TaskSetManager: Lost task 25.0 in stage 2.0 (TID 3, 192.168.100.72, executor 0): java.lang.RuntimeException: Value: 78707720's type is unexpected

at com.vesoft.nebula.encoder.RowWriterImpl.write(RowWriterImpl.java:504)

at com.vesoft.nebula.encoder.RowWriterImpl.setValue(RowWriterImpl.java:616)

at com.vesoft.nebula.encoder.RowWriterImpl.setValue(RowWriterImpl.java:599)

at com.vesoft.nebula.encoder.NebulaCodecImpl.encode(NebulaCodecImpl.java:236)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$1$$anonfun$apply$1.apply(VerticesProcessor.scala:147)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$1$$anonfun$apply$1.apply(VerticesProcessor.scala:117)

at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage2.sort_addToSorter_0$(Unknown Source)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage2.processNext(Unknown Source)

at org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43)

at org.apache.spark.sql.execution.WholeStageCodegenExec$$anonfun$13$$anon$1.hasNext(WholeStageCodegenExec.scala:636)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409)

at scala.collection.Iterator$class.foreach(Iterator.scala:891)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$2.apply(VerticesProcessor.scala:160)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$2.apply(VerticesProcessor.scala:153)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$28.apply(RDD.scala:980)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$28.apply(RDD.scala:980)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:123)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 25.1 in stage 2.0 (TID 40, 192.168.100.72, executor 0, partition 25, NODE_LOCAL, 7771 bytes)

21/01/21 14:50:20 WARN scheduler.TaskSetManager: Lost task 27.1 in stage 2.0 (TID 35, 192.168.100.72, executor 0): java.lang.RuntimeException: Value: 53802643's type is unexpected

at com.vesoft.nebula.encoder.RowWriterImpl.write(RowWriterImpl.java:504)

at com.vesoft.nebula.encoder.RowWriterImpl.setValue(RowWriterImpl.java:616)

at com.vesoft.nebula.encoder.RowWriterImpl.setValue(RowWriterImpl.java:599)

at com.vesoft.nebula.encoder.NebulaCodecImpl.encode(NebulaCodecImpl.java:236)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$1$$anonfun$apply$1.apply(VerticesProcessor.scala:147)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$1$$anonfun$apply$1.apply(VerticesProcessor.scala:117)

at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage2.sort_addToSorter_0$(Unknown Source)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage2.processNext(Unknown Source)

at org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43)

at org.apache.spark.sql.execution.WholeStageCodegenExec$$anonfun$13$$anon$1.hasNext(WholeStageCodegenExec.scala:636)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409)

at scala.collection.Iterator$class.foreach(Iterator.scala:891)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$2.apply(VerticesProcessor.scala:160)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$2.apply(VerticesProcessor.scala:153)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$28.apply(RDD.scala:980)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$28.apply(RDD.scala:980)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:123)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

21/01/21 14:50:20 WARN scheduler.TaskSetManager: Lost task 26.1 in stage 2.0 (TID 36, 192.168.100.72, executor 0): java.lang.RuntimeException: Value: 29509860's type is unexpected

at com.vesoft.nebula.encoder.RowWriterImpl.write(RowWriterImpl.java:504)

at com.vesoft.nebula.encoder.RowWriterImpl.setValue(RowWriterImpl.java:616)

at com.vesoft.nebula.encoder.RowWriterImpl.setValue(RowWriterImpl.java:599)

at com.vesoft.nebula.encoder.NebulaCodecImpl.encode(NebulaCodecImpl.java:236)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$1$$anonfun$apply$1.apply(VerticesProcessor.scala:147)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$1$$anonfun$apply$1.apply(VerticesProcessor.scala:117)

at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage2.sort_addToSorter_0$(Unknown Source)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage2.processNext(Unknown Source)

at org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43)

at org.apache.spark.sql.execution.WholeStageCodegenExec$$anonfun$13$$anon$1.hasNext(WholeStageCodegenExec.scala:636)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409)

at scala.collection.Iterator$class.foreach(Iterator.scala:891)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$2.apply(VerticesProcessor.scala:160)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$2.apply(VerticesProcessor.scala:153)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$28.apply(RDD.scala:980)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$28.apply(RDD.scala:980)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:123)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

21/01/21 14:50:20 WARN scheduler.TaskSetManager: Lost task 28.1 in stage 2.0 (TID 34, 192.168.100.72, executor 0): java.lang.RuntimeException: Value: 97319348's type is unexpected

at com.vesoft.nebula.encoder.RowWriterImpl.write(RowWriterImpl.java:504)

at com.vesoft.nebula.encoder.RowWriterImpl.setValue(RowWriterImpl.java:616)

at com.vesoft.nebula.encoder.RowWriterImpl.setValue(RowWriterImpl.java:599)

at com.vesoft.nebula.encoder.NebulaCodecImpl.encode(NebulaCodecImpl.java:236)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$1$$anonfun$apply$1.apply(VerticesProcessor.scala:147)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$1$$anonfun$apply$1.apply(VerticesProcessor.scala:117)

at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage2.sort_addToSorter_0$(Unknown Source)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage2.processNext(Unknown Source)

at org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43)

at org.apache.spark.sql.execution.WholeStageCodegenExec$$anonfun$13$$anon$1.hasNext(WholeStageCodegenExec.scala:636)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409)

at scala.collection.Iterator$class.foreach(Iterator.scala:891)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$2.apply(VerticesProcessor.scala:160)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$2.apply(VerticesProcessor.scala:153)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$28.apply(RDD.scala:980)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$28.apply(RDD.scala:980)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:123)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

21/01/21 14:50:20 WARN scheduler.TaskSetManager: Lost task 29.0 in stage 2.0 (TID 7, 192.168.100.72, executor 0): java.lang.RuntimeException: Value: 23399562's type is unexpected

at com.vesoft.nebula.encoder.RowWriterImpl.write(RowWriterImpl.java:504)

at com.vesoft.nebula.encoder.RowWriterImpl.setValue(RowWriterImpl.java:616)

at com.vesoft.nebula.encoder.RowWriterImpl.setValue(RowWriterImpl.java:599)

at com.vesoft.nebula.encoder.NebulaCodecImpl.encode(NebulaCodecImpl.java:236)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$1$$anonfun$apply$1.apply(VerticesProcessor.scala:147)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$1$$anonfun$apply$1.apply(VerticesProcessor.scala:117)

at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage2.sort_addToSorter_0$(Unknown Source)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage2.processNext(Unknown Source)

at org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43)

at org.apache.spark.sql.execution.WholeStageCodegenExec$$anonfun$13$$anon$1.hasNext(WholeStageCodegenExec.scala:636)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409)

at scala.collection.Iterator$class.foreach(Iterator.scala:891)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$2.apply(VerticesProcessor.scala:160)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$2.apply(VerticesProcessor.scala:153)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$28.apply(RDD.scala:980)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$28.apply(RDD.scala:980)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:123)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 29.1 in stage 2.0 (TID 41, 192.168.100.72, executor 0, partition 29, NODE_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 28.2 in stage 2.0 (TID 42, 192.168.100.72, executor 0, partition 28, NODE_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 26.2 in stage 2.0 (TID 43, 192.168.100.72, executor 0, partition 26, NODE_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 27.2 in stage 2.0 (TID 44, 192.168.100.72, executor 0, partition 27, NODE_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO storage.BlockManagerInfo: Removed broadcast_3_piece0 on localhost:42920 in memory (size: 6.3 KB, free: 93.2 MB)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Lost task 24.1 in stage 2.0 (TID 38) on 192.168.100.72, executor 0: java.lang.RuntimeException (Value: 2339956's type is unexpected) [duplicate 1]

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Lost task 30.2 in stage 2.0 (TID 39) on 192.168.100.72, executor 0: java.lang.RuntimeException (Value: 94295709's type is unexpected) [duplicate 1]

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Lost task 25.1 in stage 2.0 (TID 40) on 192.168.100.72, executor 0: java.lang.RuntimeException (Value: 78707720's type is unexpected) [duplicate 1]

21/01/21 14:50:20 INFO storage.BlockManagerInfo: Removed broadcast_3_piece0 on 192.168.100.72:36562 in memory (size: 6.3 KB, free: 366.2 MB)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 25.2 in stage 2.0 (TID 45, 192.168.100.72, executor 0, partition 25, NODE_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 30.3 in stage 2.0 (TID 46, 192.168.100.72, executor 0, partition 30, NODE_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 24.2 in stage 2.0 (TID 47, 192.168.100.72, executor 0, partition 24, NODE_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO spark.ContextCleaner: Cleaned accumulator 32

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Lost task 26.2 in stage 2.0 (TID 43) on 192.168.100.72, executor 0: java.lang.RuntimeException (Value: 29509860's type is unexpected) [duplicate 1]

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 26.3 in stage 2.0 (TID 48, 192.168.100.72, executor 0, partition 26, NODE_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Lost task 27.2 in stage 2.0 (TID 44) on 192.168.100.72, executor 0: java.lang.RuntimeException (Value: 53802643's type is unexpected) [duplicate 1]

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Starting task 27.3 in stage 2.0 (TID 49, 192.168.100.72, executor 0, partition 27, NODE_LOCAL, 7771 bytes)

21/01/21 14:50:20 INFO scheduler.TaskSetManager: Lost task 30.3 in stage 2.0 (TID 46) on 192.168.100.72, executor 0: java.lang.RuntimeException (Value: 94295709's type is unexpected) [duplicate 2]

21/01/21 14:50:20 ERROR scheduler.TaskSetManager: Task 30 in stage 2.0 failed 4 times; aborting job

21/01/21 14:50:21 INFO scheduler.TaskSetManager: Lost task 29.1 in stage 2.0 (TID 41) on 192.168.100.72, executor 0: java.lang.RuntimeException (Value: 23399562's type is unexpected) [duplicate 1]

21/01/21 14:50:21 INFO scheduler.TaskSetManager: Lost task 28.2 in stage 2.0 (TID 42) on 192.168.100.72, executor 0: java.lang.RuntimeException (Value: 97319348's type is unexpected) [duplicate 1]

21/01/21 14:50:21 INFO scheduler.TaskSetManager: Lost task 24.2 in stage 2.0 (TID 47) on 192.168.100.72, executor 0: java.lang.RuntimeException (Value: 2339956's type is unexpected) [duplicate 2]

21/01/21 14:50:21 INFO scheduler.TaskSetManager: Lost task 25.2 in stage 2.0 (TID 45) on 192.168.100.72, executor 0: java.lang.RuntimeException (Value: 78707720's type is unexpected) [duplicate 2]

21/01/21 14:50:21 INFO scheduler.TaskSchedulerImpl: Cancelling stage 2

21/01/21 14:50:21 INFO scheduler.TaskSchedulerImpl: Killing all running tasks in stage 2: Stage cancelled

21/01/21 14:50:21 INFO scheduler.TaskSchedulerImpl: Stage 2 was cancelled

21/01/21 14:50:21 INFO scheduler.TaskSetManager: Lost task 26.3 in stage 2.0 (TID 48) on 192.168.100.72, executor 0: java.lang.RuntimeException (Value: 29509860's type is unexpected) [duplicate 2]

21/01/21 14:50:21 INFO scheduler.DAGScheduler: ResultStage 2 (foreachPartition at VerticesProcessor.scala:153) failed in 1.841 s due to Job aborted due to stage failure: Task 30 in stage 2.0 failed 4 times, most recent failure: Lost task 30.3 in stage 2.0 (TID 46, 192.168.100.72, executor 0): java.lang.RuntimeException: Value: 94295709's type is unexpected

at com.vesoft.nebula.encoder.RowWriterImpl.write(RowWriterImpl.java:504)

at com.vesoft.nebula.encoder.RowWriterImpl.setValue(RowWriterImpl.java:616)

at com.vesoft.nebula.encoder.RowWriterImpl.setValue(RowWriterImpl.java:599)

at com.vesoft.nebula.encoder.NebulaCodecImpl.encode(NebulaCodecImpl.java:236)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$1$$anonfun$apply$1.apply(VerticesProcessor.scala:147)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$1$$anonfun$apply$1.apply(VerticesProcessor.scala:117)

at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage2.sort_addToSorter_0$(Unknown Source)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage2.processNext(Unknown Source)

at org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43)

at org.apache.spark.sql.execution.WholeStageCodegenExec$$anonfun$13$$anon$1.hasNext(WholeStageCodegenExec.scala:636)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409)

at scala.collection.Iterator$class.foreach(Iterator.scala:891)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$2.apply(VerticesProcessor.scala:160)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$2.apply(VerticesProcessor.scala:153)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$28.apply(RDD.scala:980)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$28.apply(RDD.scala:980)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:123)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Driver stacktrace:

21/01/21 14:50:21 INFO scheduler.DAGScheduler: Job 1 failed: foreachPartition at VerticesProcessor.scala:153, took 2.119603 s

21/01/21 14:50:21 INFO scheduler.TaskSetManager: Lost task 27.3 in stage 2.0 (TID 49) on 192.168.100.72, executor 0: java.lang.RuntimeException (Value: 53802643's type is unexpected) [duplicate 2]

21/01/21 14:50:21 INFO scheduler.TaskSchedulerImpl: Removed TaskSet 2.0, whose tasks have all completed, from pool

Exception in thread "main" org.apache.spark.SparkException: Job aborted due to stage failure: Task 30 in stage 2.0 failed 4 times, most recent failure: Lost task 30.3 in stage 2.0 (TID 46, 192.168.100.72, executor 0): java.lang.RuntimeException: Value: 94295709's type is unexpected

at com.vesoft.nebula.encoder.RowWriterImpl.write(RowWriterImpl.java:504)

at com.vesoft.nebula.encoder.RowWriterImpl.setValue(RowWriterImpl.java:616)

at com.vesoft.nebula.encoder.RowWriterImpl.setValue(RowWriterImpl.java:599)

at com.vesoft.nebula.encoder.NebulaCodecImpl.encode(NebulaCodecImpl.java:236)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$1$$anonfun$apply$1.apply(VerticesProcessor.scala:147)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$1$$anonfun$apply$1.apply(VerticesProcessor.scala:117)

at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage2.sort_addToSorter_0$(Unknown Source)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage2.processNext(Unknown Source)

at org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43)

at org.apache.spark.sql.execution.WholeStageCodegenExec$$anonfun$13$$anon$1.hasNext(WholeStageCodegenExec.scala:636)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409)

at scala.collection.Iterator$class.foreach(Iterator.scala:891)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$2.apply(VerticesProcessor.scala:160)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$2.apply(VerticesProcessor.scala:153)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$28.apply(RDD.scala:980)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$28.apply(RDD.scala:980)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:123)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Driver stacktrace:

at org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$failJobAndIndependentStages(DAGScheduler.scala:1925)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1913)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1912)

at scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:48)

at org.apache.spark.scheduler.DAGScheduler.abortStage(DAGScheduler.scala:1912)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:948)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:948)

at scala.Option.foreach(Option.scala:257)

at org.apache.spark.scheduler.DAGScheduler.handleTaskSetFailed(DAGScheduler.scala:948)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.doOnReceive(DAGScheduler.scala:2146)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2095)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2084)

at org.apache.spark.util.EventLoop$$anon$1.run(EventLoop.scala:49)

at org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:759)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2061)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2082)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2101)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2126)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1.apply(RDD.scala:980)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1.apply(RDD.scala:978)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:385)

at org.apache.spark.rdd.RDD.foreachPartition(RDD.scala:978)

at org.apache.spark.sql.Dataset$$anonfun$foreachPartition$1.apply$mcV$sp(Dataset.scala:2741)

at org.apache.spark.sql.Dataset$$anonfun$foreachPartition$1.apply(Dataset.scala:2741)

at org.apache.spark.sql.Dataset$$anonfun$foreachPartition$1.apply(Dataset.scala:2741)

at org.apache.spark.sql.Dataset$$anonfun$withNewRDDExecutionId$1.apply(Dataset.scala:3355)

at org.apache.spark.sql.execution.SQLExecution$$anonfun$withNewExecutionId$1.apply(SQLExecution.scala:80)

at org.apache.spark.sql.execution.SQLExecution$.withSQLConfPropagated(SQLExecution.scala:127)

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:75)

at org.apache.spark.sql.Dataset.withNewRDDExecutionId(Dataset.scala:3351)

at org.apache.spark.sql.Dataset.foreachPartition(Dataset.scala:2740)

at com.vesoft.nebula.exchange.processor.VerticesProcessor.process(VerticesProcessor.scala:153)

at com.vesoft.nebula.exchange.Exchange$$anonfun$main$2.apply(Exchange.scala:139)

at com.vesoft.nebula.exchange.Exchange$$anonfun$main$2.apply(Exchange.scala:116)

at scala.collection.immutable.List.foreach(List.scala:392)

at com.vesoft.nebula.exchange.Exchange$.main(Exchange.scala:116)

at com.vesoft.nebula.exchange.Exchange.main(Exchange.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:845)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:161)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:184)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:86)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:920)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:929)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: java.lang.RuntimeException: Value: 94295709's type is unexpected

at com.vesoft.nebula.encoder.RowWriterImpl.write(RowWriterImpl.java:504)

at com.vesoft.nebula.encoder.RowWriterImpl.setValue(RowWriterImpl.java:616)

at com.vesoft.nebula.encoder.RowWriterImpl.setValue(RowWriterImpl.java:599)

at com.vesoft.nebula.encoder.NebulaCodecImpl.encode(NebulaCodecImpl.java:236)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$1$$anonfun$apply$1.apply(VerticesProcessor.scala:147)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$1$$anonfun$apply$1.apply(VerticesProcessor.scala:117)

at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

at scala.collection.Iterator$$anon$11.next(Iterator.scala:410)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage2.sort_addToSorter_0$(Unknown Source)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage2.processNext(Unknown Source)

at org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43)

at org.apache.spark.sql.execution.WholeStageCodegenExec$$anonfun$13$$anon$1.hasNext(WholeStageCodegenExec.scala:636)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409)

at scala.collection.Iterator$class.foreach(Iterator.scala:891)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$2.apply(VerticesProcessor.scala:160)

at com.vesoft.nebula.exchange.processor.VerticesProcessor$$anonfun$process$2.apply(VerticesProcessor.scala:153)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$28.apply(RDD.scala:980)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$28.apply(RDD.scala:980)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:123)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

21/01/21 14:50:21 INFO spark.SparkContext: Invoking stop() from shutdown hook

21/01/21 14:50:21 INFO server.AbstractConnector: Stopped Spark@60e949e1{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

21/01/21 14:50:21 INFO ui.SparkUI: Stopped Spark web UI at http://localhost:4040

21/01/21 14:50:21 INFO cluster.StandaloneSchedulerBackend: Shutting down all executors

21/01/21 14:50:21 INFO cluster.CoarseGrainedSchedulerBackend$DriverEndpoint: Asking each executor to shut down

21/01/21 14:50:21 INFO spark.MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

21/01/21 14:50:21 INFO memory.MemoryStore: MemoryStore cleared

21/01/21 14:50:21 INFO storage.BlockManager: BlockManager stopped

21/01/21 14:50:21 INFO storage.BlockManagerMaster: BlockManagerMaster stopped

21/01/21 14:50:21 INFO scheduler.OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

21/01/21 14:50:21 INFO spark.SparkContext: Successfully stopped SparkContext

21/01/21 14:50:21 INFO util.ShutdownHookManager: Shutdown hook called

21/01/21 14:50:21 INFO util.ShutdownHookManager: Deleting directory /home/consumer/nebula-docker-compose-v2.0/spark-2.4.7/spark-36a07b28-1278-4098-aeda-b6f793dc5852

21/01/21 14:50:21 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-31c7818f-e103-4c18-bc80-f3ab9be230b9

(base) [consumer@localhost nebula-docker-compose-v2.0]$

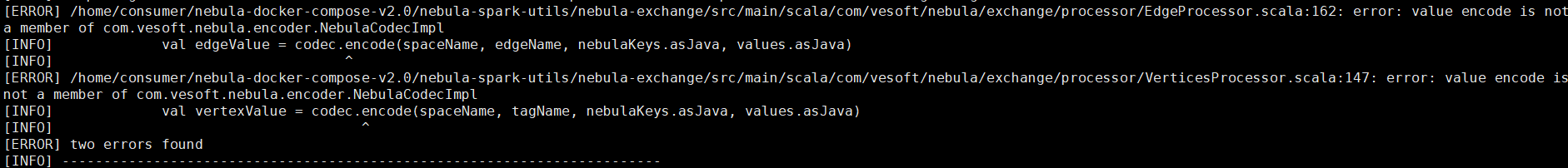

昨天下午client有更新接口,exchange的同步更新还未合入, 你可以拉这个pr的代码

昨天下午client有更新接口,exchange的同步更新还未合入, 你可以拉这个pr的代码