提问参考模版:

- nebula 版本:1.2

- 部署方式(分布式 / 单机 / Docker / DBaaS):分布式

- 硬件信息

- 磁盘( 推荐使用 SSD)SSD

- CPU、内存信息

- 问题的具体描述

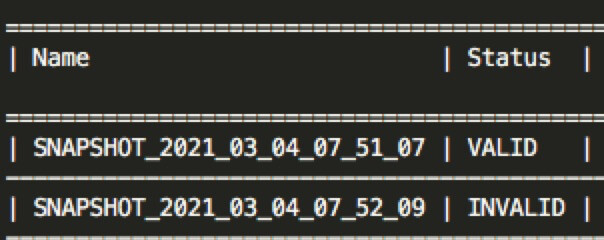

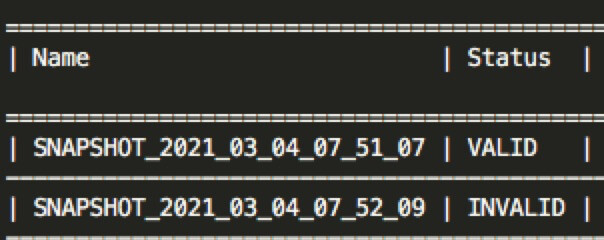

CREATE SNAPSHOT失败.

但是show snapshots显示存在。

提问参考模版:

但是show snapshots显示存在。

请问个是最新master的代码吗?

第一次发生的 409 错误是说在checkpoint的过程中发生了leader change。导致checkpoint报错。

但是会自动产生snapshot这个问题需要调查一下,看起来是一分钟一次。应用端有配置定时的checkpoint了吗?

checkpoint过程中没有进行balance leader,应用端没有配置定时的checkpoin,感觉好像是因为失败了自动进行重试。

用docker swarm部署的,镜像是vesoft/nebula-graphd:v1.2.0。部署有一个多月了,应该不是最新的。

问一下,docker镜像最新链接是哪个?

更新了镜像,成功了几次,现在又失败了:

报错:[ERROR (-8)]: RPC failure in MetaClient: N6apache6thrift9transport19TTransportExceptionE: Timed Out

只执行了一次,生成了3个快照:

storage日志:

E0309 09:59:53.399646 29 NebulaStore.cpp:815] Part sync failed. space : 739 Part : 267

E0309 09:59:53.450421 29 NebulaStore.cpp:815] Part sync failed. space : 739 Part : 267

E0309 09:59:53.501212 29 NebulaStore.cpp:815] Part sync failed. space : 739 Part : 267

E0309 09:59:53.551978 29 NebulaStore.cpp:815] Part sync failed. space : 739 Part : 267

E0309 09:59:53.602739 29 NebulaStore.cpp:815] Part sync failed. space : 739 Part : 267

E0309 09:59:53.653492 29 NebulaStore.cpp:815] Part sync failed. space : 739 Part : 267

E0309 09:59:53.704360 29 NebulaStore.cpp:815] Part sync failed. space : 739 Part : 267

E0309 09:59:53.755228 23 NebulaStore.cpp:815] Part sync failed. space : 739 Part : 267

E0309 09:59:53.806111 23 NebulaStore.cpp:815] Part sync failed. space : 739 Part : 267

E0309 09:59:53.856842 23 NebulaStore.cpp:815] Part sync failed. space : 739 Part : 267

E0309 09:59:53.907537 23 NebulaStore.cpp:815] Part sync failed. space : 739 Part : 267

E0309 09:59:53.958381 23 NebulaStore.cpp:815] Part sync failed. space : 739 Part : 267

E0309 09:59:54.009222 23 NebulaStore.cpp:815] Part sync failed. space : 739 Part : 267

E0309 09:59:54.060096 23 NebulaStore.cpp:815] Part sync failed. space : 739 Part : 267

E0309 09:59:54.111002 26 NebulaStore.cpp:815] Part sync failed. space : 739 Part : 267

E0309 09:59:54.161890 29 NebulaStore.cpp:815] Part sync failed. space : 739 Part : 267

E0309 09:59:54.212622 26 NebulaStore.cpp:815] Part sync failed. space : 739 Part : 267

E0309 09:59:54.263267 40 NebulaStore.cpp:815] Part sync failed. space : 739 Part : 267

E0309 09:59:54.314007 40 NebulaStore.cpp:815] Part sync failed. space : 739 Part : 267

E0309 09:59:54.364724 40 NebulaStore.cpp:815] Part sync failed. space : 739 Part : 267

E0309 09:59:54.415467 40 NebulaStore.cpp:815] Part sync failed. space : 739 Part : 267

E0309 09:59:54.466233 40 NebulaStore.cpp:815] Part sync failed. space : 739 Part : 267

E0309 09:59:54.516908 40 NebulaStore.cpp:815] Part sync failed. space : 739 Part : 267

E0309 09:59:54.567560 40 NebulaStore.cpp:815] Part sync failed. space : 739 Part : 267

E0309 09:59:54.618185 40 NebulaStore.cpp:815] Part sync failed. space : 739 Part : 267

E0309 09:59:54.668829 40 NebulaStore.cpp:815] Part sync failed. space : 739 Part : 267

麻烦把你的集群配置以及集群架构发一下,复现步骤也描述一下。

没有复现这个问题,看了一下代码逻辑,失败之后不太可能retry,也不太可能创建出三个不同名称的snapshot。

我现在用的镜像是:

vesoft/nebula-graphd:v1.2.0

vesoft/nebula-metad:v1.2.0

vesoft/nebula-storaged:v1.2.0

看了下这个是3个月前的镜像。

我想先试下最新的docker镜像,我用的是1.0版本的,应该用下面的哪个镜像?

1.

vesoft/nebula-graphd:nightly

vesoft/nebula-metad:nightly

vesoft/nebula-storaged:nightly

2.

vesoft/nebula-graphd:latest

vesoft/nebula-metad:latest

vesoft/nebula-storaged:latest

有可能是因为不是最新代码的原因

集群15台机器,docker swarm部署的,5台meta,15storaged,15graphd:

配置:

meta:

- --meta_server_addrs=

- --local_ip=

- --ws_ip=

- --port=45500

- --data_path=/data/meta

- --log_dir=/logs

- --v=0

- --minloglevel=2

- --heartbeat_interval_secs=10

storaged:

- --meta_server_addrs=

- --local_ip=

- --ws_ip=

- --port=44500

- --rocksdb_block_cache=32768

- --rocksdb_batch_size=4096

- --data_path=/data/storage0,/data/storage1,/data/storage2,/data/storage3,/data/storage4,/data/storage5,/data/storage6

- --log_dir=/logs

- --v=0

- --minloglevel=2

- --auto_remove_invalid_space=true

graphd:

- --meta_server_addrs=

- --port=3699

- --ws_ip=

- --log_dir=/logs

- --v=0

- --minloglevel=2

- --enable_authorize=true

其他配置:

(root@nebula) [nba]> show configs

==============================================================================================================================================================================================================================

| module | name | type | mode | value |

==============================================================================================================================================================================================================================

| GRAPH | v | INT64 | MUTABLE | 0 |

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

| GRAPH | minloglevel | INT64 | MUTABLE | 2 |

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

| GRAPH | slow_op_threshhold_ms | INT64 | MUTABLE | 50 |

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

| GRAPH | heartbeat_interval_secs | INT64 | MUTABLE | 3 |

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

| GRAPH | meta_client_retry_times | INT64 | MUTABLE | 3 |

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

| STORAGE | v | INT64 | MUTABLE | 0 |

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

| STORAGE | wal_ttl | INT64 | MUTABLE | 3600 |

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

| STORAGE | minloglevel | INT64 | MUTABLE | 2 |

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

| STORAGE | rocksdb_db_options | NESTED | MUTABLE | {

"max_background_jobs": "1",

"max_subcompactions": "1"

} |

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

| STORAGE | enable_multi_versions | BOOL | MUTABLE | False |

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

| STORAGE | slow_op_threshhold_ms | INT64 | MUTABLE | 50 |

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

| STORAGE | clean_wal_interval_secs | INT64 | MUTABLE | 600 |

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

| STORAGE | heartbeat_interval_secs | INT64 | MUTABLE | 10 |

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

| STORAGE | meta_client_retry_times | INT64 | MUTABLE | 3 |

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

| STORAGE | enable_reservoir_sampling | BOOL | MUTABLE | False |

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

| STORAGE | custom_filter_interval_secs | INT64 | MUTABLE | 86400 |

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

| STORAGE | max_edge_returned_per_vertex | INT64 | MUTABLE | 2147483647 |

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

| STORAGE | rocksdb_column_family_options | NESTED | MUTABLE | {

"max_bytes_for_level_base": "268435456",

"max_write_buffer_number": "4",

"disable_auto_compactions": "false",

"write_buffer_size": "67108864"

} |

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

| STORAGE | rocksdb_block_based_table_options | NESTED | MUTABLE | {

"block_size": "8192"

} |

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

Got 19 rows (Time spent: 12.493/14.063 ms)

复现步骤:

很简单,就是执行create snapshot

怀疑是meta错乱了,麻烦尝试一下只留一台meta?

只留了一台meta

(root@nebula) [(none)]> create snapshot

[ERROR (-8)]: RPC failure in MetaClient: N6apache6thrift9transport19TTransportExceptionE: AsyncSocketException: connect failed, type = Socket not open, errno = 111 (Connection refused): Connection refused

[root@node-01 logs]# docker service list

ID NAME MODE REPLICAS IMAGE PORTS

pomfudrdtd40 nebula_graphd0 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-graphd:v1.2.0

58084hcew9ve nebula_graphd1 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-graphd:v1.2.0

80ajief2nraj nebula_graphd2 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-graphd:v1.2.0

iis745y1s1wy nebula_graphd3 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-graphd:v1.2.0

twffk519r71n nebula_graphd4 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-graphd:v1.2.0

1hd8y2euljs2 nebula_graphd5 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-graphd:v1.2.0

lqmz7zy0kl6p nebula_graphd6 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-graphd:v1.2.0

motj0hbdl4il nebula_graphd7 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-graphd:v1.2.0

oc8fqv1oib4r nebula_graphd8 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-graphd:v1.2.0

80y21eh0quaa nebula_graphd9 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-graphd:v1.2.0

vwmsya6ljlhj nebula_graphd10 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-graphd:v1.2.0

u59h2dsev827 nebula_graphd11 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-graphd:v1.2.0

ym42o54ywcji nebula_graphd12 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-graphd:v1.2.0

us1ntt8g0b2p nebula_graphd13 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-graphd:v1.2.0

cyyjwkkqbawq nebula_graphd14 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-graphd:v1.2.0

9jrexp96ol88 nebula_metad0 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-metad:v1.2.0

0z1eu5vub7cz nebula_metad1 replicated 0/0 mirror.jd.com/9n/vesoft/nebula-metad:v1.2.0

to2umpv44jbm nebula_metad2 replicated 0/0 mirror.jd.com/9n/vesoft/nebula-metad:v1.2.0

x6e6e0ls3m4t nebula_metad3 replicated 0/0 mirror.jd.com/9n/vesoft/nebula-metad:v1.2.0

l6vs48mv2oq3 nebula_metad4 replicated 0/0 mirror.jd.com/9n/vesoft/nebula-metad:v1.2.0

33lixqtz7nzh nebula_storaged0 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-storaged:v1.2.0

59an08nml659 nebula_storaged1 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-storaged:v1.2.0

6y6hmwb3rfiv nebula_storaged2 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-storaged:v1.2.0

pg0smiwhk2hg nebula_storaged3 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-storaged:v1.2.0

lcwkevlbtjox nebula_storaged4 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-storaged:v1.2.0

p9l7knsjn6fe nebula_storaged5 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-storaged:v1.2.0

d34t2cdsp973 nebula_storaged6 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-storaged:v1.2.0

ssakb491sico nebula_storaged7 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-storaged:v1.2.0

72q5pvg90w9m nebula_storaged8 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-storaged:v1.2.0

z82jc8afjah3 nebula_storaged9 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-storaged:v1.2.0

pflxngmarl6f nebula_storaged10 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-storaged:v1.2.0

2pcdbv0kv6g2 nebula_storaged11 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-storaged:v1.2.0

nr4duju058kl nebula_storaged12 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-storaged:v1.2.0

7yr3s2u6i90j nebula_storaged13 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-storaged:v1.2.0

k6gn22ocpnum nebula_storaged14 replicated 1/1 mirror.jd.com/9n/vesoft/nebula-storaged:v1.2.0

meta日志:

E0309 11:20:09.176964 147 RaftPart.cpp:365] [Port: 45501, Space: 0, Part: 0] The partition is not a leader

E0309 11:20:09.177827 147 RaftPart.cpp:635] [Port: 45501, Space: 0, Part: 0] Cannot append logs, clean the buffer

E0309 11:22:56.943444 44 RaftPart.cpp:773] [Port: 45501, Space: 0, Part: 0] Replicate logs failed

E0309 11:24:16.970481 143 CreateSnapshotProcessor.cpp:54] Write snapshot meta error

E0309 11:24:18.973976 143 CreateSnapshotProcessor.cpp:54] Write snapshot meta error

E0309 11:24:31.523420 143 RaftPart.cpp:365] [Port: 45501, Space: 0, Part: 0] The partition is not a leader

E0309 11:24:31.523509 143 RaftPart.cpp:635] [Port: 45501, Space: 0, Part: 0] Cannot append logs, clean the buffer

E0309 11:25:09.061215 143 CreateSnapshotProcessor.cpp:54] Write snapshot meta error

E0309 11:26:13.871280 143 RaftPart.cpp:365] [Port: 45501, Space: 0, Part: 0] The partition is not a leader

E0309 11:26:13.871376 143 RaftPart.cpp:635] [Port: 45501, Space: 0, Part: 0] Cannot append logs, clean the buffer

storage日志

E0309 11:27:20.739928 64 MetaClient.cpp:110] Heartbeat failed, status:Unknown error(409): Leader changed!

E0309 11:27:33.754613 63 MetaClient.cpp:524] Send request to [172.18.154.38:45500], exceed retry limit

E0309 11:27:33.754868 64 MetaClient.cpp:110] Heartbeat failed, status:RPC failure in MetaClient: N6apache6thrift9transport19TTransportExceptionE: AsyncSocketException: connect failed, type = Socket not open, errno = 111 (Connection refused): Connection refused

E0309 11:27:46.768954 48 MetaClient.cpp:524] Send request to [172.18.154.38:45500], exceed retry limit

E0309 11:27:46.769156 64 MetaClient.cpp:110] Heartbeat failed, status:RPC failure in MetaClient: N6apache6thrift9transport19TTransportExceptionE: AsyncSocketException: connect failed, type = Socket not open, errno = 111 (Connection refused): Connection refused

E0309 11:27:59.784420 57 MetaClient.cpp:524] Send request to [172.18.153.39:45500], exceed retry limit

E0309 11:27:59.784773 64 MetaClient.cpp:110] Heartbeat failed, status:RPC failure in MetaClient: N6apache6thrift9transport19TTransportExceptionE: AsyncSocketException: connect failed, type = Socket not open, errno = 111 (Connection refused): Connection refused

E0309 11:28:12.793458 64 MetaClient.cpp:110] Heartbeat failed, status:Unknown error(409): Leader changed!

如果是meta错乱应该怎么解决呢?

只留一个meta之后,Leader count都是0了

留下的这台meta是 graph和storage的配置参数 --meta_server_addrs 里的吗?

猜测留下的meta的角色不是正在工作的master

可以尝试graph和storage的配置参数(–meta_server_addrs)改下,只留一个,然后全部(all storage, all graph , one meta)重启一下。

ERROR (-8)]: RPC failure in MetaClient: N6apache6thrift9transport19TTransportExceptionE: Timed Out

Tue Mar 9 11:14:24 2021

E0309 11:14:47.508344 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.154.38:44500

E0309 11:14:49.157444 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.155.101:44500

E0309 11:14:50.782269 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.155.102:44500

E0309 11:14:52.918222 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.153.31:44500

E0309 11:14:55.065866 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.153.33:44500

E0309 11:14:57.278834 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.153.39:44500

E0309 11:14:59.217269 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.153.40:44500

E0309 11:15:00.958760 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.153.42:44500

E0309 11:15:02.733294 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.153.44:44500

E0309 11:15:04.362973 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.153.46:44500

E0309 11:15:06.031448 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.153.48:44500

E0309 11:15:07.661350 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.153.55:44500

E0309 11:15:10.424243 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.153.57:44500

E0309 11:15:12.092144 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.153.59:44500

E0309 11:15:13.716800 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.153.61:44500

E0309 11:15:15.579665 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.154.38:44500

E0309 11:15:17.234797 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.155.101:44500

E0309 11:15:19.420120 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.155.102:44500

E0309 11:15:21.591212 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.153.31:44500

E0309 11:15:23.432749 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.153.33:44500

E0309 11:15:25.347010 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.153.39:44500

E0309 11:15:27.279222 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.153.40:44500

E0309 11:15:29.606353 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.153.42:44500

E0309 11:15:31.310940 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.153.44:44500

E0309 11:15:32.941073 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.153.46:44500

E0309 11:15:34.608043 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.153.48:44500

E0309 11:15:36.234722 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.153.55:44500

E0309 11:15:39.189229 144 SnapShot.cpp:68] Send blocking sign error on host : 172.18.153.57:44500